Join our community to see how developers are using Workik AI everyday.

Supported AI models on Workik

GPT 5.2 Codex, GPT 5.2, GPT 5.1 Codex, GPT 5.1, GPT 5 Mini, GPT 5

Gemini 3.1 Pro, Gemini 3 Flash, Gemini 3 Pro, Gemini 2.5 Pro

Claude 4.6 sonnet, Claude 4.5 Sonnet, Claude 4.5 Haiku, Claude 4 Sonnet

Deepseek Reasoner, Deepseek Chat, Deepseek R1(High)

Grok 4.1 Fast, Grok 4, Grok Code Fast 1

Models availability might vary based on your plan on Workik

Features

Dynamic Route Generation

Scaffold file-based routes and nested layouts with context-aware data loaders and actions.

Optimized Data Loading

Generate loader functions with async data fetching, caching, and streaming aligned with Remix conventions.

Form and Action Handlers

AI creates validated form submissions and Remix actions with automatic error handling and redirection logic.

Edge Deployment Support

Generate AI-enhanced, edge-optimized code ready for deployment on Cloudflare Workers, Vercel, or Netlify Edge.

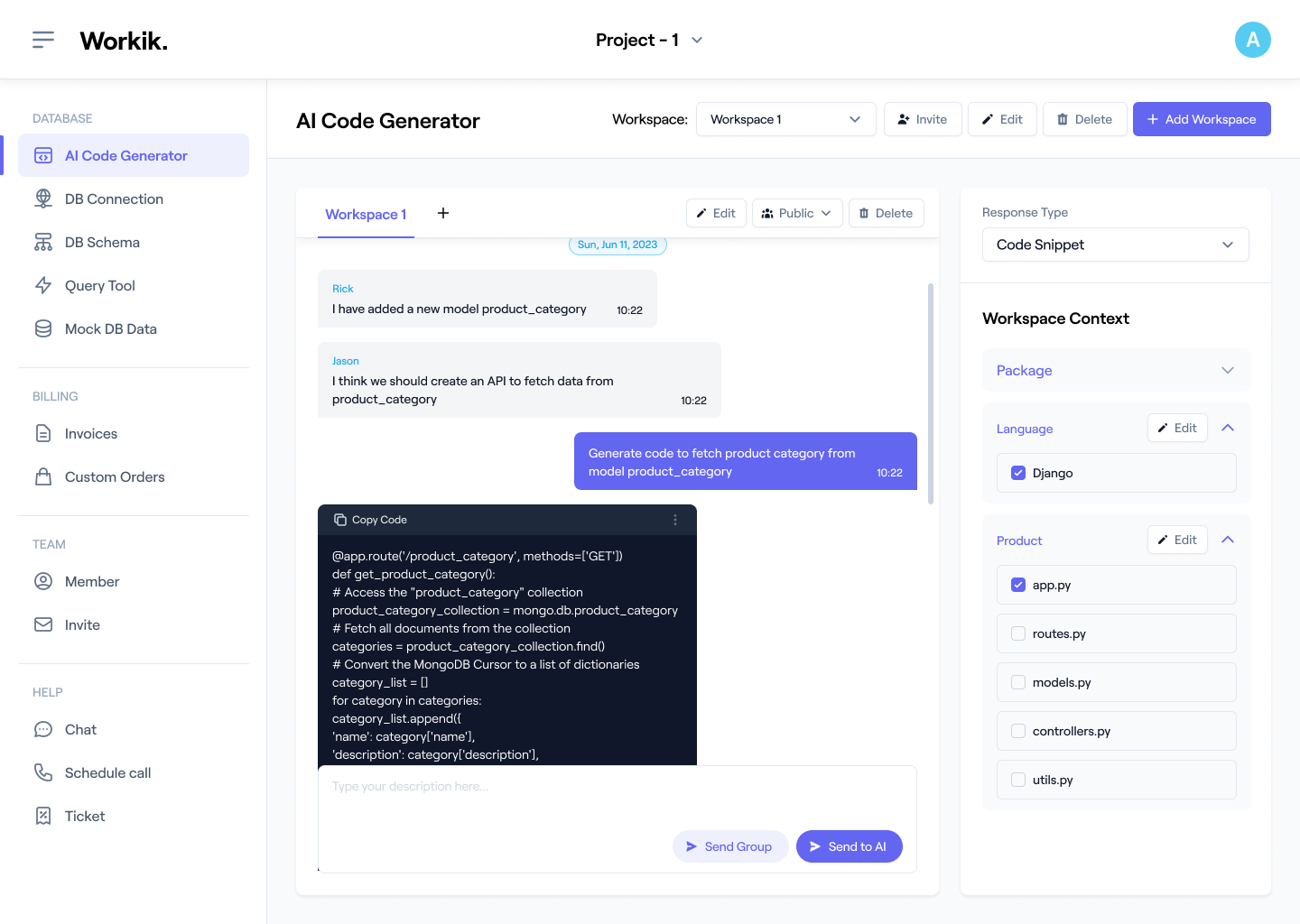

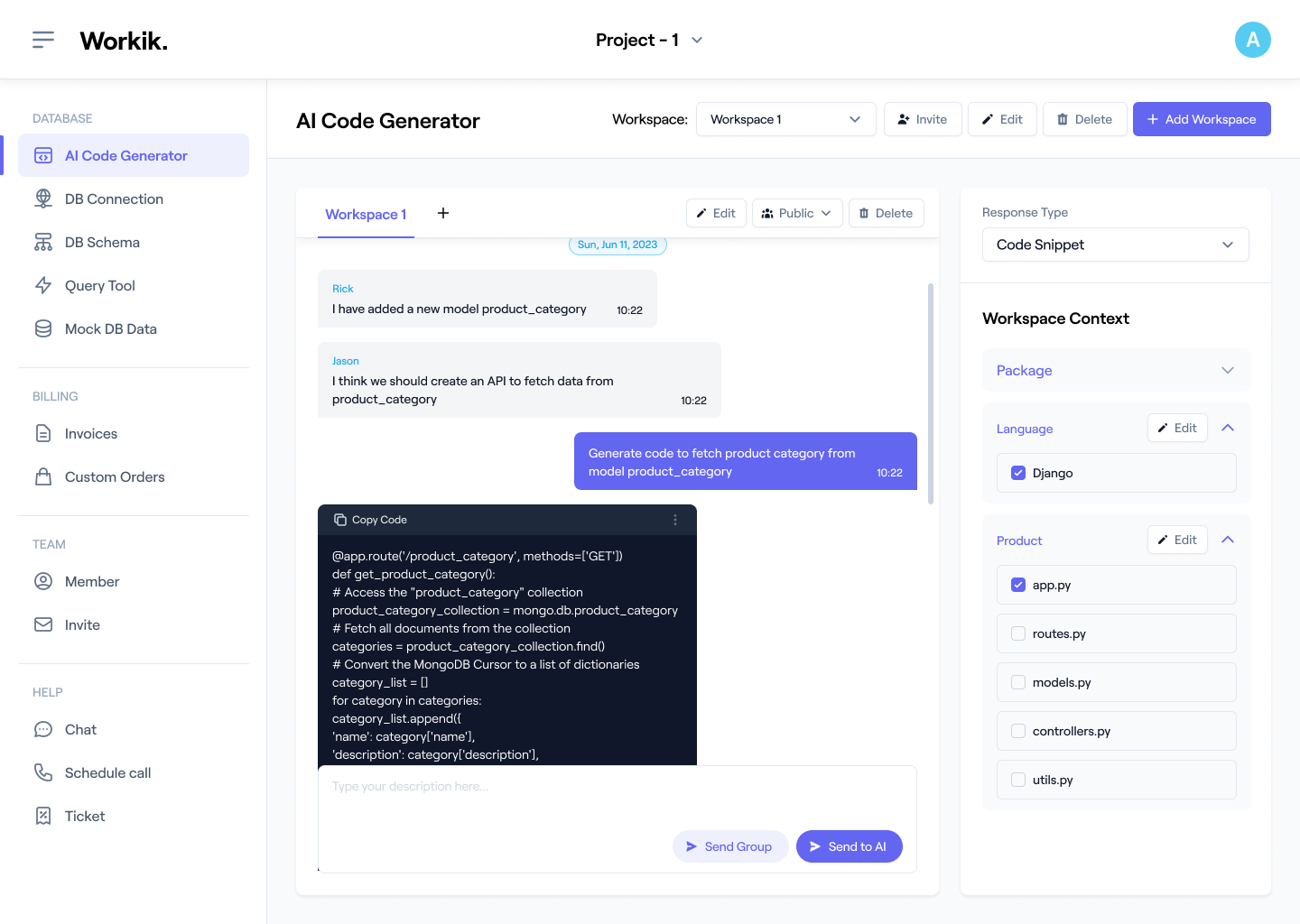

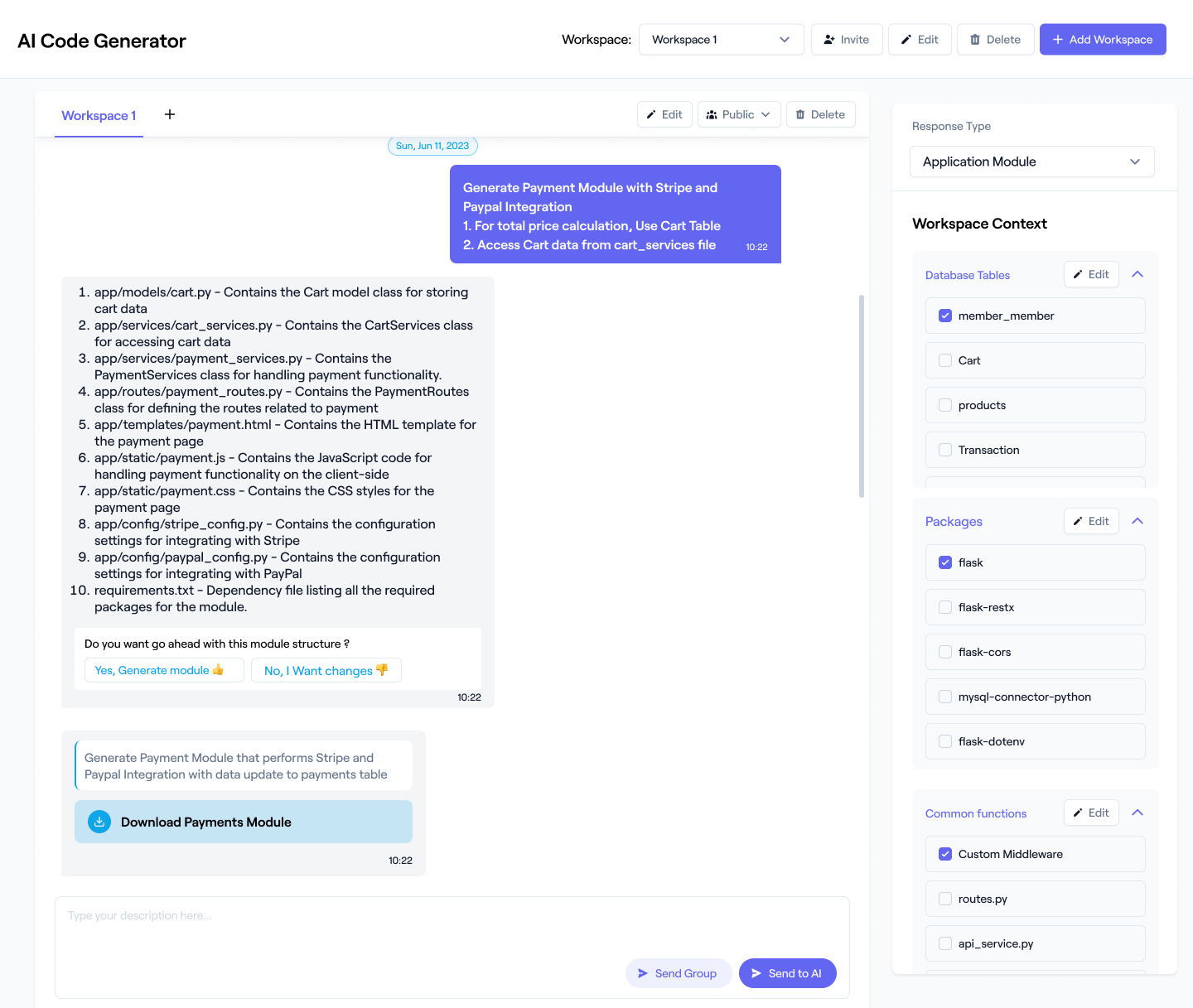

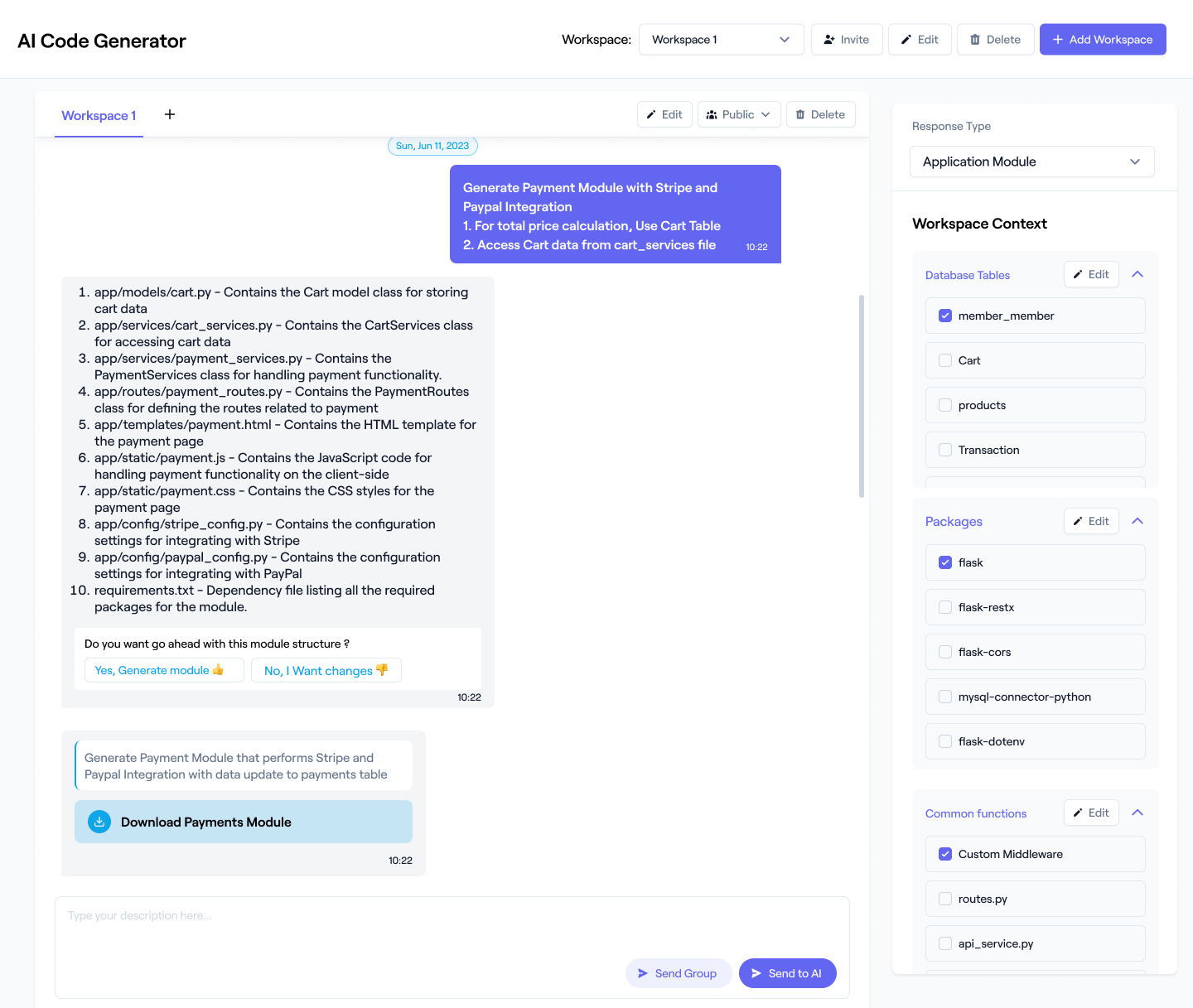

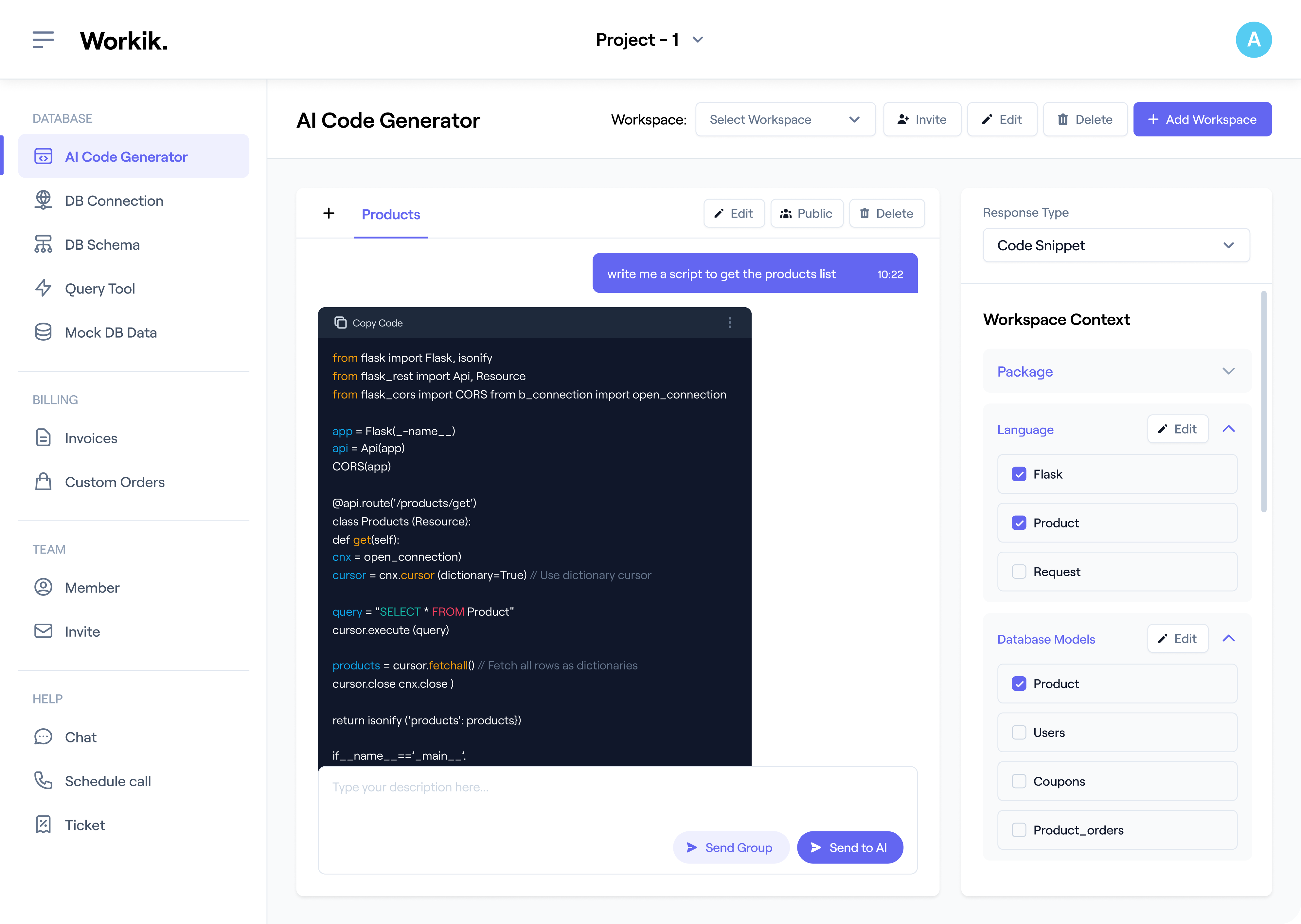

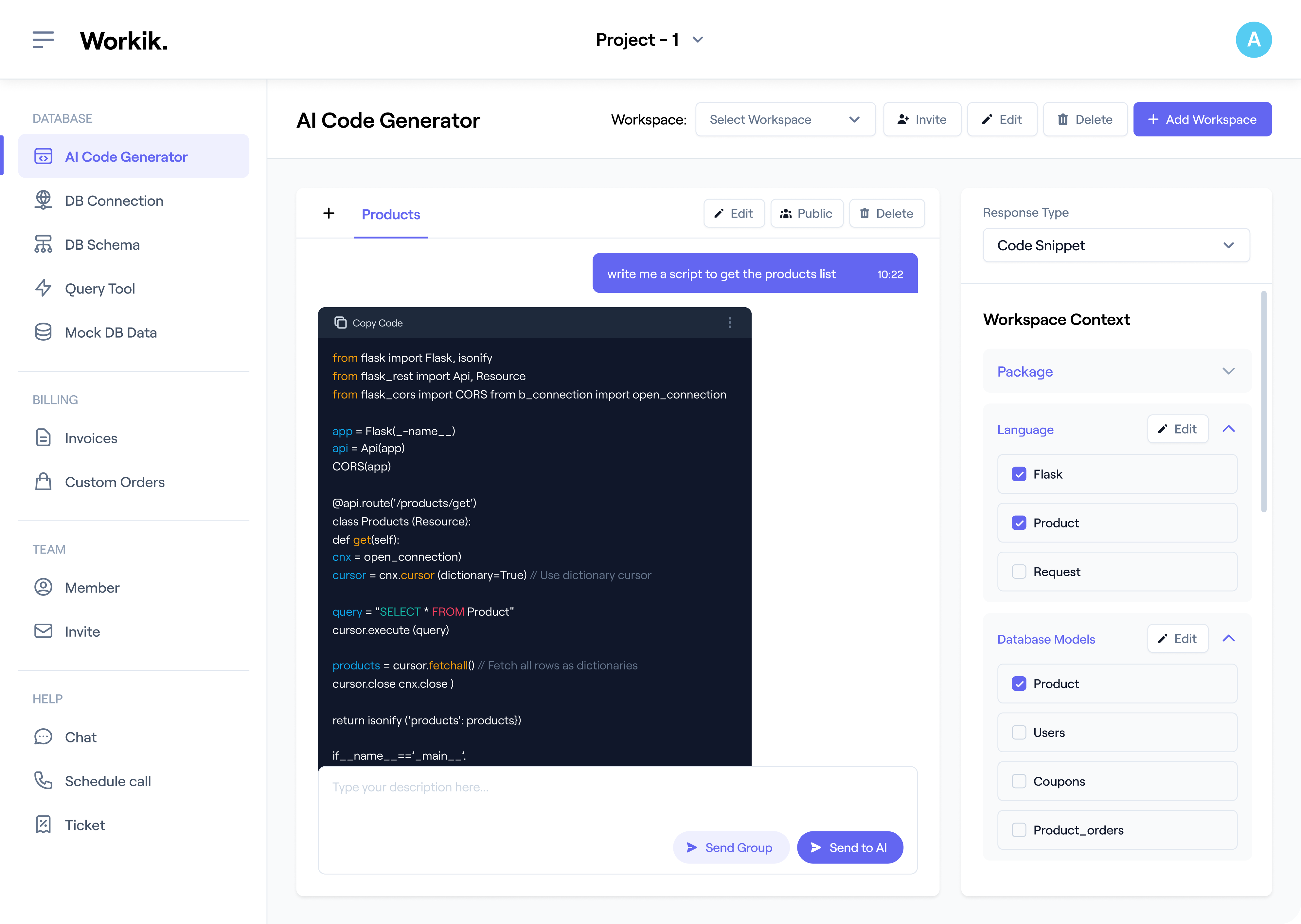

How it works

Sign up in seconds using Google or email. Create your workspace instantly and start generating PySpark code right away.

Add project context for accurate AI understanding. Connect GitHub, GitLab, or Bitbucket repo, and include Spark SQL schemas, ETL logic, and more for tailored PySpark output.

Leverage Workik AI to generate, debug, and optimize PySpark code. Workik AI supports ETL creation, DataFrame transformations, and more within your workspace.

Invite teammates to share workspaces for joint debugging, and code reviews. You can also create pipelines to automate PySpark testing and execution using AI.

Expand

Expand

Expand

Expand

Expand

Expand

Expand

TESTIMONIALS

Real Stories, Real Results with Workik

"Our team used Workik AI to optimize MLlib pipelines and manage feature engineering with almost zero manual coding."

.png)

Aisha Khan

Machine Learning Engineer

"Workik AI handled everything from Spark SQL tuning to DataFrame transformations flawlessly. It’s really impressive."

.png)

Carlos Rivera

Big Data Developer

"I generated clean ETL scripts, debugged them with AI, and deployed them in record time. Game-changer for data engineers."

.png)

Priya Mehta

Senior Data Engineer

What are the most popular use cases for the Workik PySpark Code Generator?

Developers use the PySpark Code Generator for a wide variety of big data and machine learning tasks, including but not limited to:

* Build and automate ETL and ELT pipelines for batch and streaming data.

* Create optimized DataFrame transformations and Spark SQL queries.

* Generate MLlib pipelines for model training, evaluation, and feature engineering.

* Refactor existing PySpark scripts for performance tuning or migration to Databricks or EMR.

* Create Delta Lake jobs for merges, upserts, and schema evolution.

* Automate streaming pipelines for real-time data sources like Kafka.

* Generate validation and testing scripts for PySpark transformations.

* Produce inline documentation and workflow summaries for collaboration and maintenance.

What context-setting options are available when using Workik for PySpark projects?

Adding context is optional — it simply helps AI personalize outputs to your development setup. You can:

* Connect repos from GitHub, GitLab, or Bitbucket for instant access to your PySpark codebase.

* Define languages, frameworks, and libraries (e.g., PySpark, MLlib, Delta Lake).

* Upload schemas or data samples to guide ETL logic.

* Add API blueprints or endpoints if Spark interacts with REST services.

* Include existing PySpark scripts for debugging or refactoring.

* Add Spark cluster configurations (executor memory, partition strategy) for performance-aware code.

* Provide dataset metadata (S3 paths, Hive tables, or file formats) for precise read/write operations.

How can AI help improve performance tuning in PySpark jobs?

AI can detect bottlenecks and inefficiencies in your Spark DAGs and suggest optimizations like partition pruning, broadcast joins, and caching strategies. It can also tune configurations such as spark.sql.shuffle.partitions or memory settings dynamically based on workload patterns and data volume to ensure cluster efficiency.

How does AI assist with Spark SQL query optimization?

AI analyzes query plans (explain() output) to identify costly operations and rewrites queries to improve performance. For instance, it can recommend pushing filters before joins, replacing UDFs with native Spark functions, or restructuring nested subqueries for faster execution across large datasets.

Can I use AI to generate PySpark code for both batch and streaming workflows?

Yes. Developers can choose between batch for offline data processing or structured streaming for real-time analytics. For example, AI can create streaming jobs that read events from Kafka and aggregate them every few seconds or batch jobs that transform and load Parquet files into Delta Lake tables.

What are some practical machine learning workflows I can build in PySpark with AI?

You can use AI to scaffold full ML pipelines — from feature extraction to model evaluation. For example, generate code to:

* Process data using VectorAssembler and StandardScaler

* Train classification models with RandomForestClassifier or GBTClassifier

* Evaluate accuracy using MulticlassClassificationEvaluator

* Save and reload models using MLlib’s persistence API

Can AI help with ETL, data processing, and handling complex file formats like Parquet or ORC?

Yes. AI can generate ETL pipelines that efficiently handle Parquet, ORC, or Avro formats with correct schema inference, compression, and partitioning. It can also suggest performance optimizations such as predicate pushdown and vectorized reads for faster I/O during data transformations.

Is it possible to use AI for automating PySpark testing, validation, and data quality checks?

Yes — AI can automatically generate unit tests for PySpark transformations using pytest or chispa. It can also create data validation layers that check schema consistency, null handling, and outlier detection before loading data into production pipelines.

Can AI assist with Spark job monitoring and troubleshooting?

AI can analyze Spark job logs, task metrics, and executor-level statistics to pinpoint issues like data skew, unpersisted RDDs, or failed stages. It then recommends fixes — for instance, repartitioning large joins, adjusting memory allocation, or persisting intermediate DataFrames.

Generate Code For Free

PySpark Question & Answer

PySpark is the Python API for Apache Spark, a powerful open-source framework for distributed data processing and analytics. It allows developers to work with massive datasets across clusters of machines using a high-level Python interface.

Popular frameworks and libraries in PySpark development include:

Data Processing and Storage:

Apache Spark Core, Delta Lake, Hadoop HDFS

Data Querying and Analysis:

Spark SQL, Hive, Pandas API on Spark

Machine Learning and AI:

MLlib, TensorFlow, PyTorch, Scikit-learn integration

Streaming and Real-Time Processing:

Spark Structured Streaming, Apache Kafka

Data Orchestration and Automation:

Apache Airflow, Workik Pipelines

Data Formats and Connectors:

Parquet, Avro, ORC, JDBC, Cassandra, Snowflake connectors

Development and Debugging:

Jupyter Notebooks, Databricks, PySpark Shell

Popular use cases of PySpark include:

ETL and Data Pipelines:

Build and automate large-scale ETL and ELT workflows for structured and unstructured data.

Data Warehousing and Analytics:

Run distributed Spark SQL queries and aggregations across petabyte-scale datasets.

Machine Learning and AI:

Train and evaluate MLlib models or integrate PyTorch/TensorFlow for advanced analytics.

Streaming and Real-Time Processing:

Process real-time data streams from Kafka or IoT sources using Structured Streaming.

Data Engineering Automation:

Schedule, test, and optimize data pipelines with orchestration tools like Airflow or Workik.

Data Migration and Refactoring:

Convert legacy scripts or optimize existing PySpark jobs for modern data lakes.

Data Quality and Governance:

Validate schema consistency, monitor pipeline integrity, and maintain data lineage across jobs.

Career opportunities and technical roles for PySpark professionals include Data Engineer, Big Data Developer, Machine Learning Engineer, ETL Developer, Data Architect, Analytics Engineer, DevOps/DataOps Specialist, Cloud Data Engineer.

Workik AI supports a wide range of PySpark development and data engineering tasks, including:

Code Generation:

Create ETL pipelines, DataFrame transformations, and MLlib workflows automatically from context.

Debugging Assistance:

Identify logic or performance bottlenecks, suggest partitioning, and optimize Spark configurations.

Data Management:

Generate code for schema validation, data cleaning, and loading datasets into Delta Lake or Hive tables.

SQL and Query Automation:

Build Spark SQL queries, joins, and aggregations based on schema or dataset inputs.

Machine Learning:

Generate MLlib pipelines for feature engineering, model training, and evaluation.

Performance Optimization:

Refactor jobs for memory efficiency, caching, and shuffle minimization.

Testing and Validation:

Auto-generate unit tests for PySpark transformations using pytest or chispa.

Workflow Automation:

Integrate PySpark jobs with Airflow DAGs or Workik pipelines for scheduling and monitoring.

Documentation:

Produce code summaries, schema documentation, and job-level metadata for team collaboration.

Explore more on Workik

Top Blogs on Workik

Get in touch

Don't miss any updates of our product.

© Workik Inc. 2026 All rights reserved.