Join our community to see how developers are using Workik AI everyday.

Supported AI models on Workik

GPT 5.2 Codex, GPT 5.2, GPT 5.1 Codex, GPT 5.1, GPT 5 Mini, GPT 5

Gemini 3.1 Pro, Gemini 3 Flash, Gemini 3 Pro, Gemini 2.5 Pro

Claude 4.6 sonnet, Claude 4.5 Sonnet, Claude 4.5 Haiku, Claude 4 Sonnet

Deepseek Reasoner, Deepseek Chat, Deepseek R1(High)

Grok 4.1 Fast, Grok 4, Grok Code Fast 1

Models availability might vary based on your plan on Workik

Features

Generate Chain Instantly

Use AI to automatically generate and configure LangChain chains, prompts, and agents from minimal context or instructions.

Integrate API Seamlessly

Produce LangChain-ready API calls for OpenAI, Anthropic, or Hugging Face with authentication and request schemas autogenerated.

Automate RAG Pipelines

Build complete retrieval-augmented generation flows with embedding, retrieval, and context injection handled automatically.

Evaluate and Debug with AI

AI adds tracing, logging, and evaluation hooks effectively compatible with LangSmith for better debugging and monitoring.

How it works

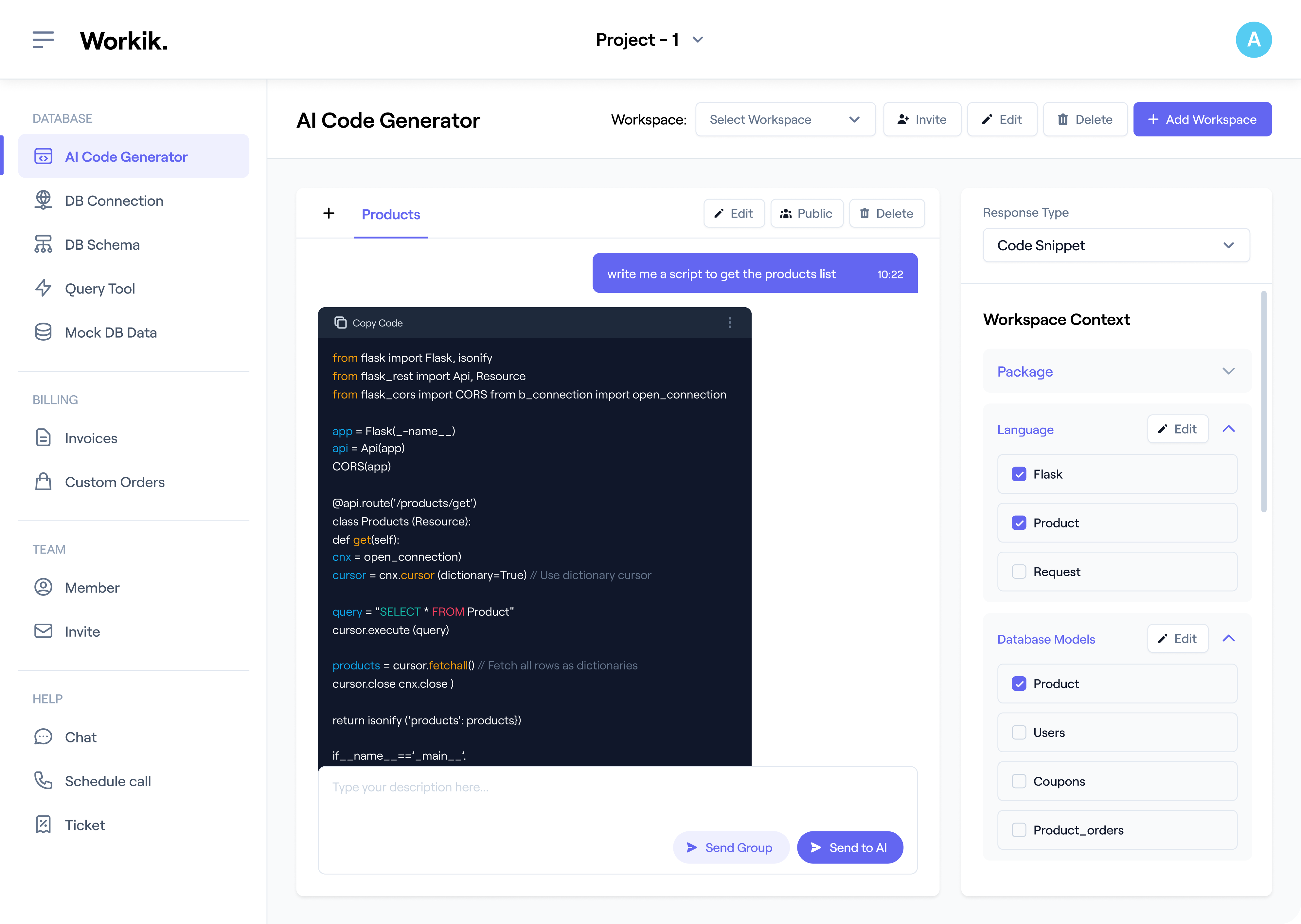

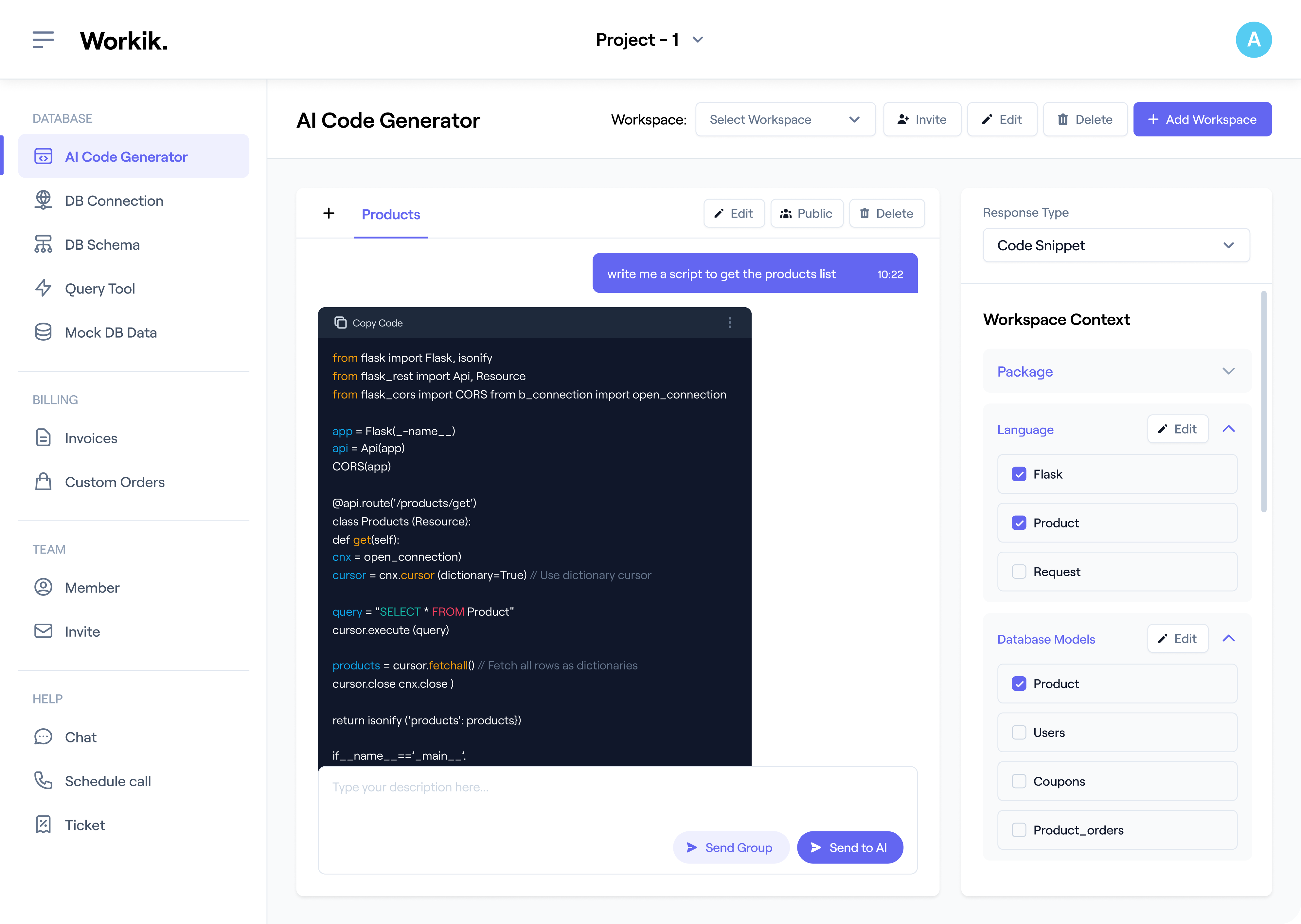

Create your free workspace in seconds using Google or manual sign-up. Begin generating LangChain code instantly.

Connect GitHub, GitLab, or Bitbucket to import LangChain files or add API blueprints and dynamic context for tailored AI output.

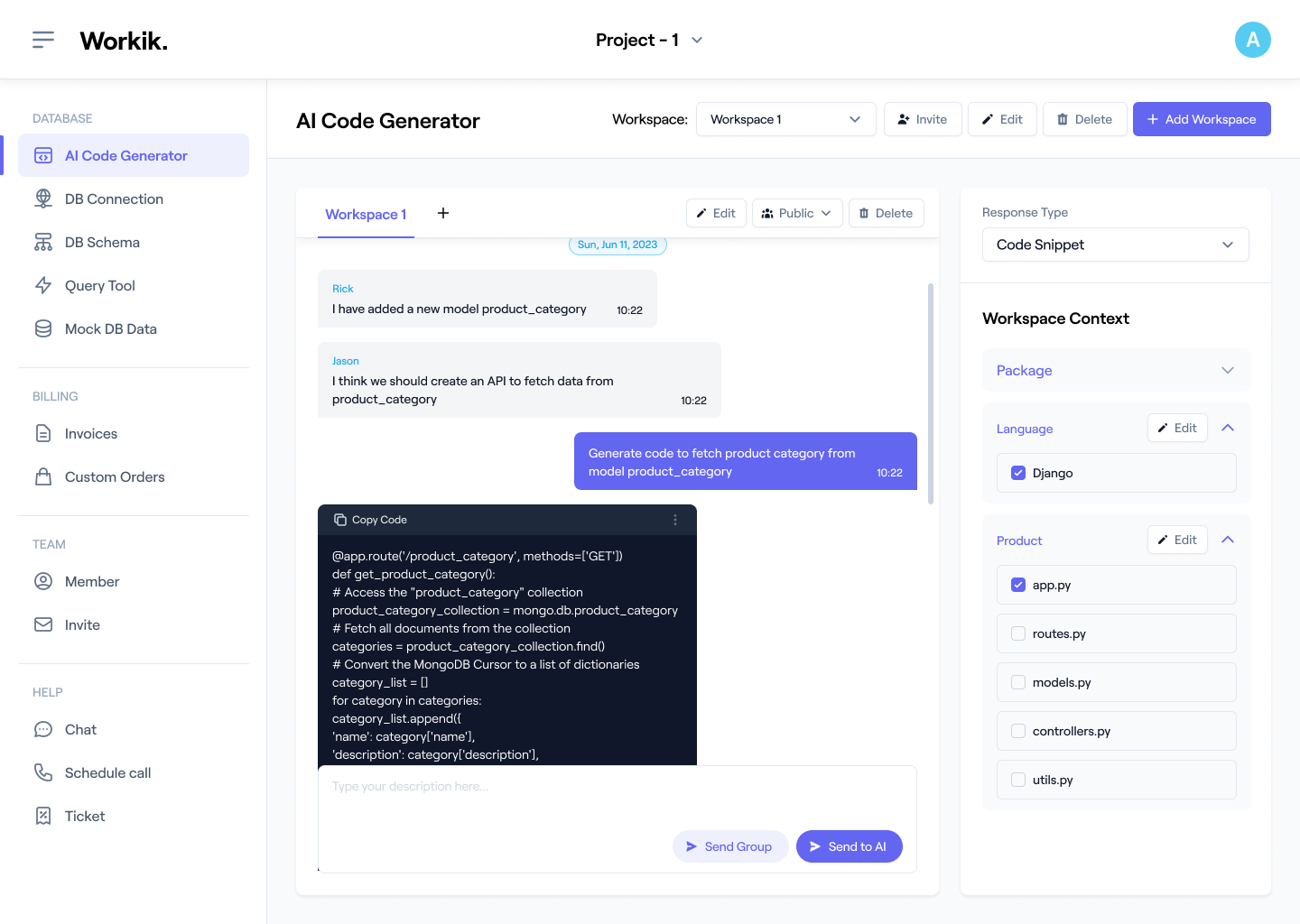

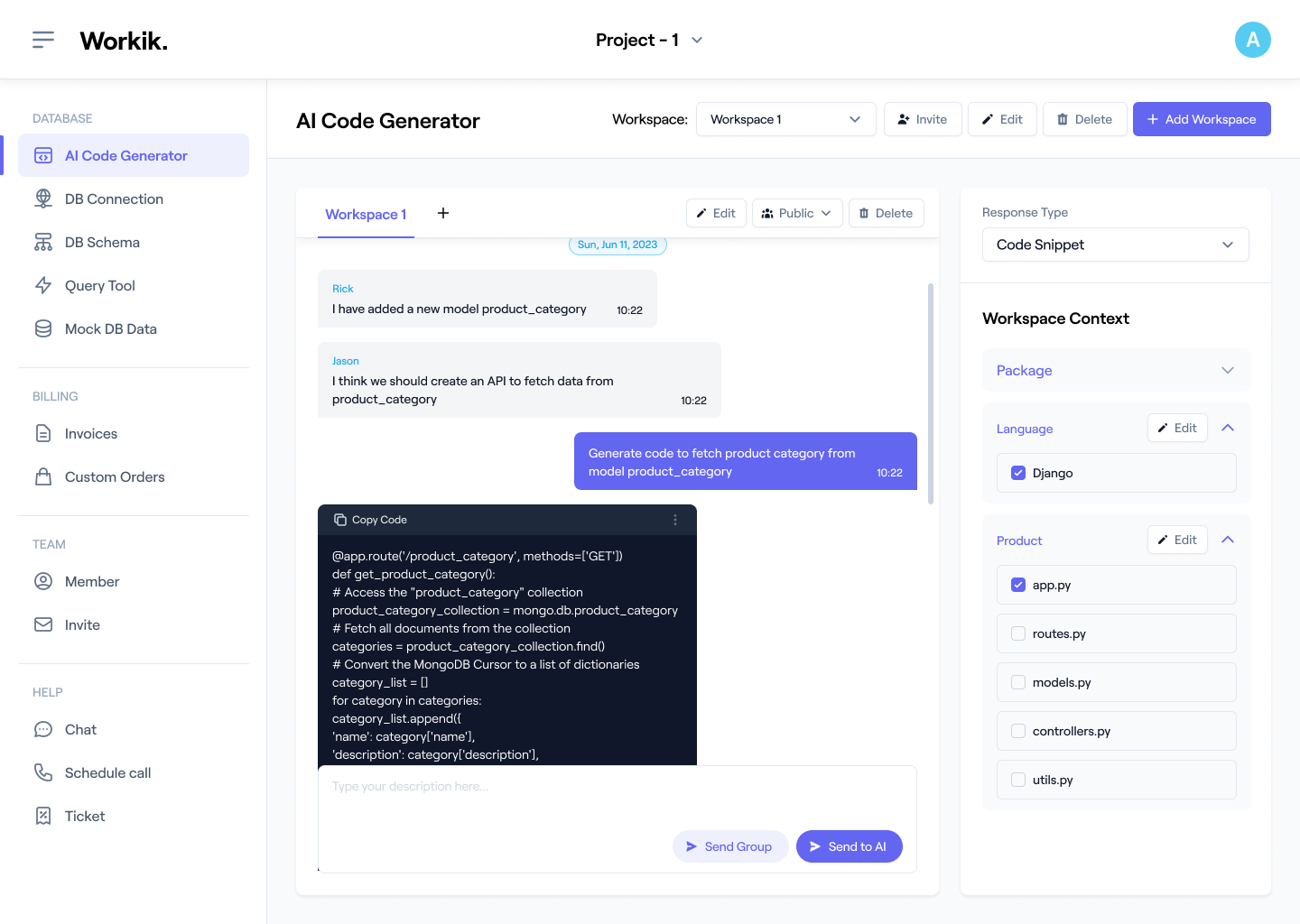

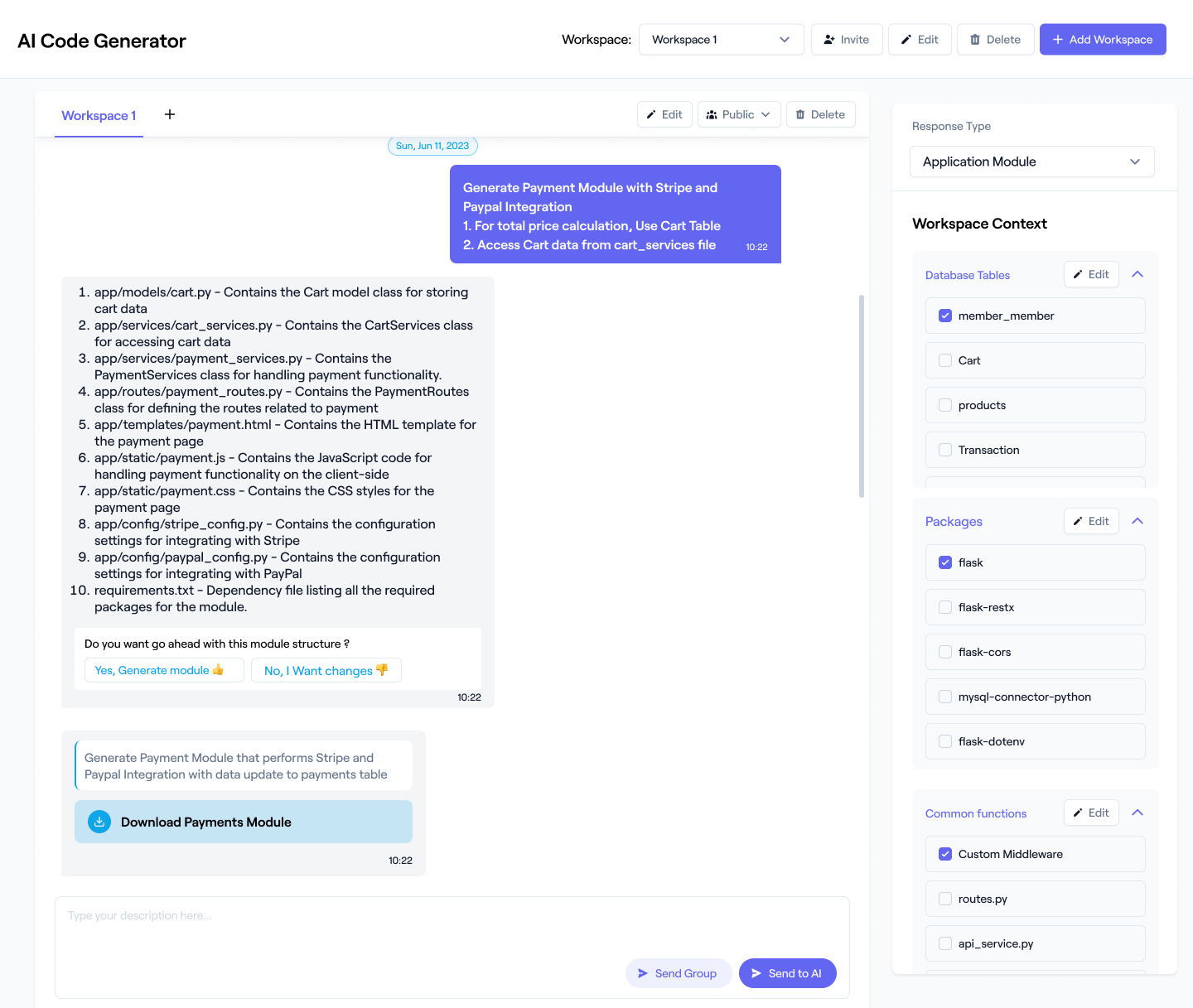

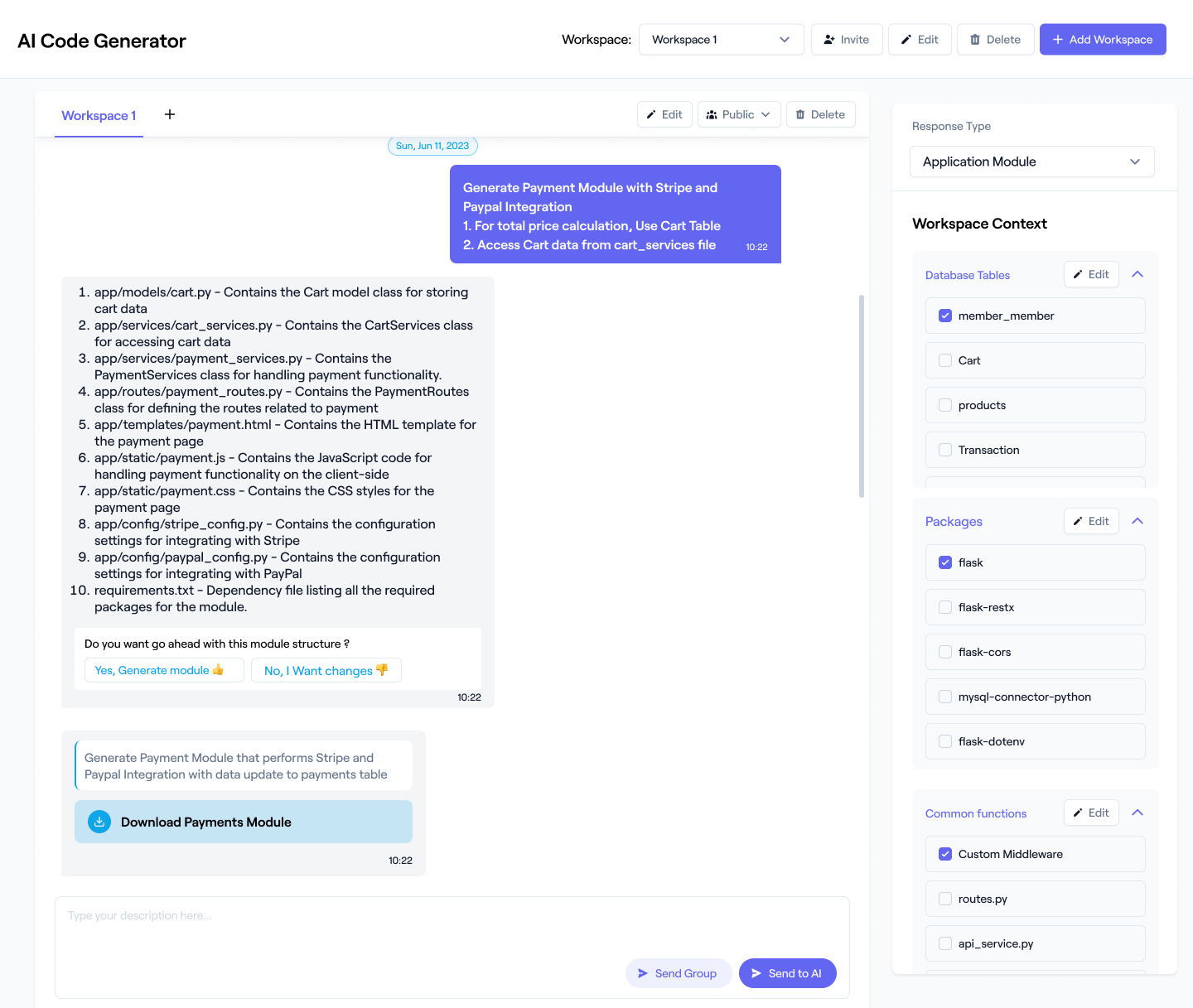

Use AI to build, debug, and optimize LangChain chains, agents, and more. Generate production-ready code tailored to your workflow.

Invite teammates to the same workspace, and co-edit LangChain workflows. Set pipelines to automate testing and code updates.

Expand

Expand

Expand

Expand

Expand

Expand

Expand

TESTIMONIALS

Real Stories, Real Results with Workik

"The Workik AI assistant helped me debug and refine multi-agent workflows effortlessly. It just gets how LangChain logic fits together."

.png)

Leah Brooks

AI Automation Engineer

"Workik AI helped me save hours on setting up RAG pipelines. The AI-generated chain templates are production-ready."

.png)

Tanisha Sharma

Machine Learning Engineer

"I built an entire LangChain demo in under an hour. Workik AI code suggestions are practical and align with real-world API structures."

.png)

Ivy Jones

Developer Advocate

What are the most popular use cases of the Workik LangChain Code Generator for developers?

Developers use the LangChain Code Generator for a wide range of AI-driven workflows, including but not limited to:

* Building RAG-based document assistants that query private knowledge bases and return context-aware responses.

* Creating LangChain-powered chatbots or customer support agents that connect to APIs and handle dynamic user queries.

* Generating multi-agent automation flows where AI agents collaborate to perform tasks like report generation or API calls.

* Scaffolding custom retrievers and vector store logic with integrations for Pinecone, Chroma, or Weaviate.

* Developing AI-driven API orchestration layers for apps built with FastAPI, Remix, or Next.js.

* Using AI to debug, optimize, or refactor LangChain code, ensuring efficient chain logic and memory handling.

* Automating evaluation and observability hooks through LangSmith for testing model accuracy and chain stability.

What context-setting options are available, and how do they help with LangChain projects?

Developers can optionally add context to help the AI generate more accurate, project-specific LangChain code. Workik supports:

* GitHub, GitLab, Bitbucket integrations – e.g., connect a repo containing your LangChain RAG pipeline or agent scripts.

* Codebase files – upload .py, .js, or .ts files containing LangChain chain, retriever, or prompt template files for direct reference.

* API blueprints – import Postman or Swagger files for generating API-connected LangChain agents.

* Database schemas – add Pinecone, Weaviate, or Chroma DB structures for vector retrieval logic.

* Common functions – share embedding or text preprocessing utilities used in your LangChain setup.

* Dynamic context – describe workflows like chatbot with memory or multi-agent coordination.

* Framework details – specify dependencies like LangGraph, LangSmith, or FastAPI for aligned code generation.

Can I use the LangChain Code Generator to create agents for specific tasks?

Yes. You can prompt AI to generate specialized LangChain agents such as summarizers, data retrieval assistants, or automation bots. Workik’s AI automatically scaffolds tools, memory, and logic flow, making them deployable with minimal modification.

Can I collaborate with other developers inside the same LangChain workspace?

Yes. You can invite teammates to co-develop and review LangChain workflows in real time. Shared workspaces allow tracking AI-generated changes, testing modules collaboratively, and managing documentation together — ideal for agile LangChain development teams.

How does automation work for LangChain projects in Workik?

Automation pipelines let developers schedule recurring actions like refreshing embeddings, reindexing data, or validating chain responses. For instance, you can set a nightly pipeline to regenerate your RAG pipeline when new documents are added — ensuring updated and consistent LangChain performance without manual work.

Which frameworks and environments does the LangChain Code Generator support?

The AI supports both Python and JavaScript/TypeScript with frameworks like FastAPI, Remix.js, and Next.js. You can generate LangChain code compatible with local setups (FAISS, Chroma) or cloud deployments (Pinecone, Weaviate, LangSmith). All outputs are modular for easy integration into existing applications.

How does AI help with LangChain project maintenance and optimization?

AI assistance helps developers review logs, detect bottlenecks, and optimize retrieval or prompt structures. It can flag redundant embeddings, suggest caching improvements, or restructure chains for better token efficiency. These insights make LangChain pipelines faster, cleaner, and more maintainable over time.

Generate Code For Free

LangChain Question & Answer

LangChain is an open-source framework designed for building powerful applications that use large language models (LLMs). It enables developers to connect models to external data, tools, and APIs, creating intelligent workflows known as chains and agents. LangChain offers components for prompt engineering, memory management, tool integration, and multi-step reasoning.

Popular frameworks and libraries used in LangChain development include:

Model Providers:

OpenAI, Anthropic (Claude), Hugging Face Transformers, Cohere

Vector Databases:

Pinecone, Weaviate, Chroma, FAISS

Workflow and Orchestration:

LangGraph, LangSmith, LangServe

Backend Frameworks:

FastAPI, Flask, Next.js, Remix.js

Data and Retrieval:

LlamaIndex, ElasticSearch, PostgreSQL

Visualization and Prototyping:

Streamlit, Gradio

Testing and Evaluation:

LangSmith, pytest, unit test frameworks

Authentication and API Tools:

OAuth, Postman, Swagger

Popular use cases of LangChain include:

Retrieval-Augmented Generation (RAG):

Build intelligent knowledge assistants that query private datasets or documentation.

Chatbots and Conversational Agents:

Create contextual AI assistants that integrate with APIs or business logic.

Multi-Agent Systems:

Develop autonomous AI agents that collaborate to perform tasks like research, code writing, or workflow automation.

Data Analysis and Summarization:

Generate structured reports or summaries from unstructured data sources.

API Orchestration:

Use LLMs to plan and execute API calls dynamically based on user intent.

Custom Tooling:

Build AI-powered developer utilities for refactoring, testing, and documentation.

Enterprise AI Integration:

Connect LLMs with existing data systems, CRMs, or dashboards securely and efficiently.

Career opportunities and technical roles for LangChain professionals include LLM Application Engineer, AI Software Developer, LangChain Backend Engineer, AI Automation Specialist, Agentic Workflow Architect, RAG System Developer, Vector Database Engineer, Full-Stack Developer (AI-integrated), and Prompt Engineering Specialist.

Workik AI assists developers across every phase of LangChain development:

Code Generation:

Generate LangChain chains, agents, retrievers, and tool integrations automatically.

Debugging Assistance:

Identify broken chains, resolve API errors, and optimize prompt logic.

RAG Pipeline Setup:

Create complete retrieval-augmented generation flows including embedding, retrieval, and context injection.

API Integration:

Connect with OpenAI, Hugging Face, or Anthropic APIs using secure authentication.

Performance Optimization:

Refactor agent logic, memory handling, and retriever configurations for efficiency.

Testing and Evaluation:

Generate LangSmith evaluation hooks and automated test cases for consistent performance.

Deployment and Automation:

Suggest FastAPI endpoints, Docker configurations, and pipeline automation for production-ready LangChain apps.

Explore more on Workik

Top Blogs on Workik

Get in touch

Don't miss any updates of our product.

© Workik Inc. 2026 All rights reserved.