Join our community to see how developers are using Workik AI everyday.

Features

Generate Optimized Kernels

AI can create high-performance kernels tailored for tasks with cuBLAS or cuFFT for efficient computation.

Manage GPU Memory

AI handles memory allocation and data transfer patterns for minimal latency with unified or pinned memory techniques.

Debug and Optimize Code

AI detects kernel bottlenecks and refines warp execution using insights from Nsight Compute for peak efficiency.

Simplify Parallel Programming

Use AI to generate boilerplate for reduction, prefix sum, and stencil patterns, optimizing thread and block configurations.

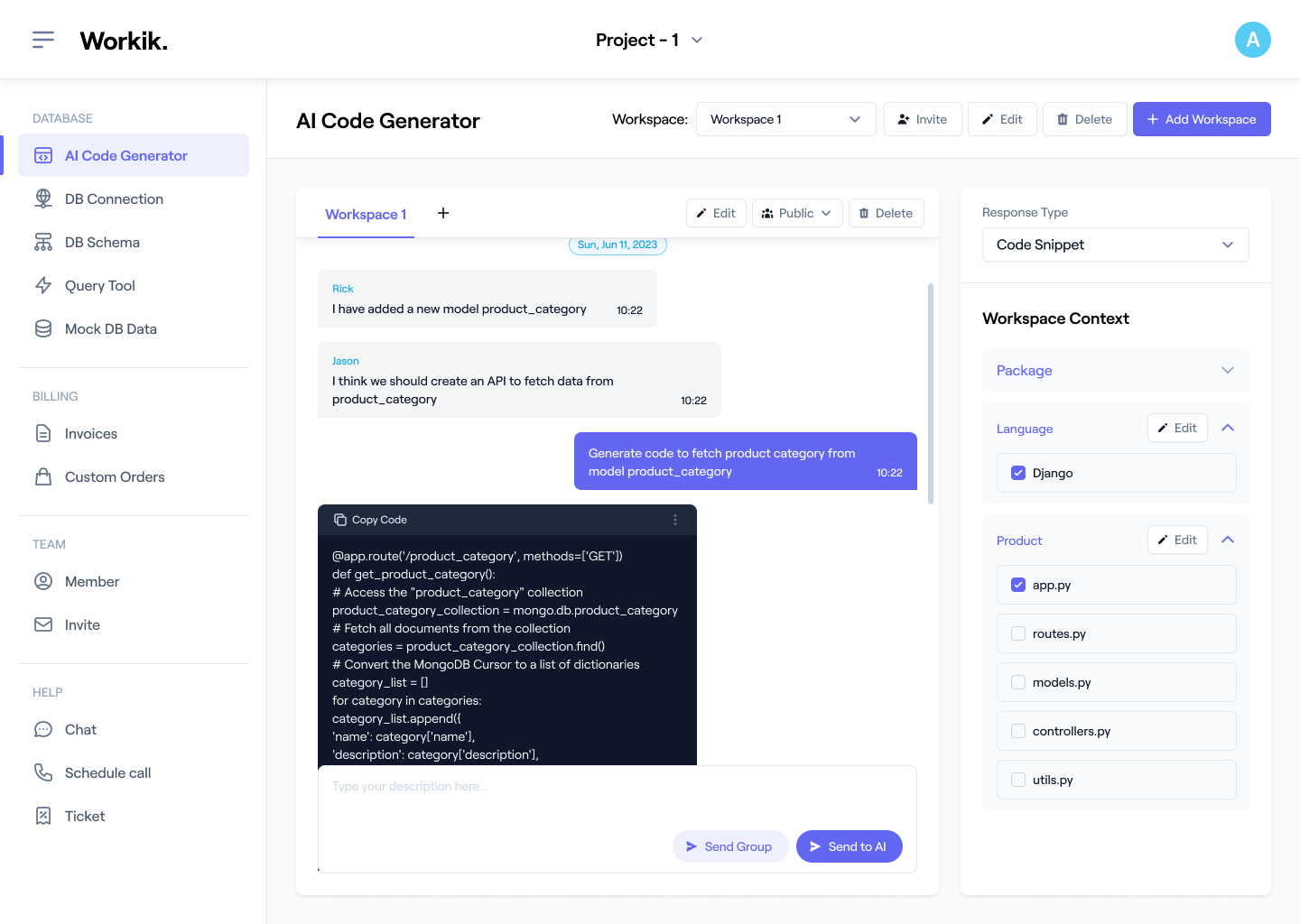

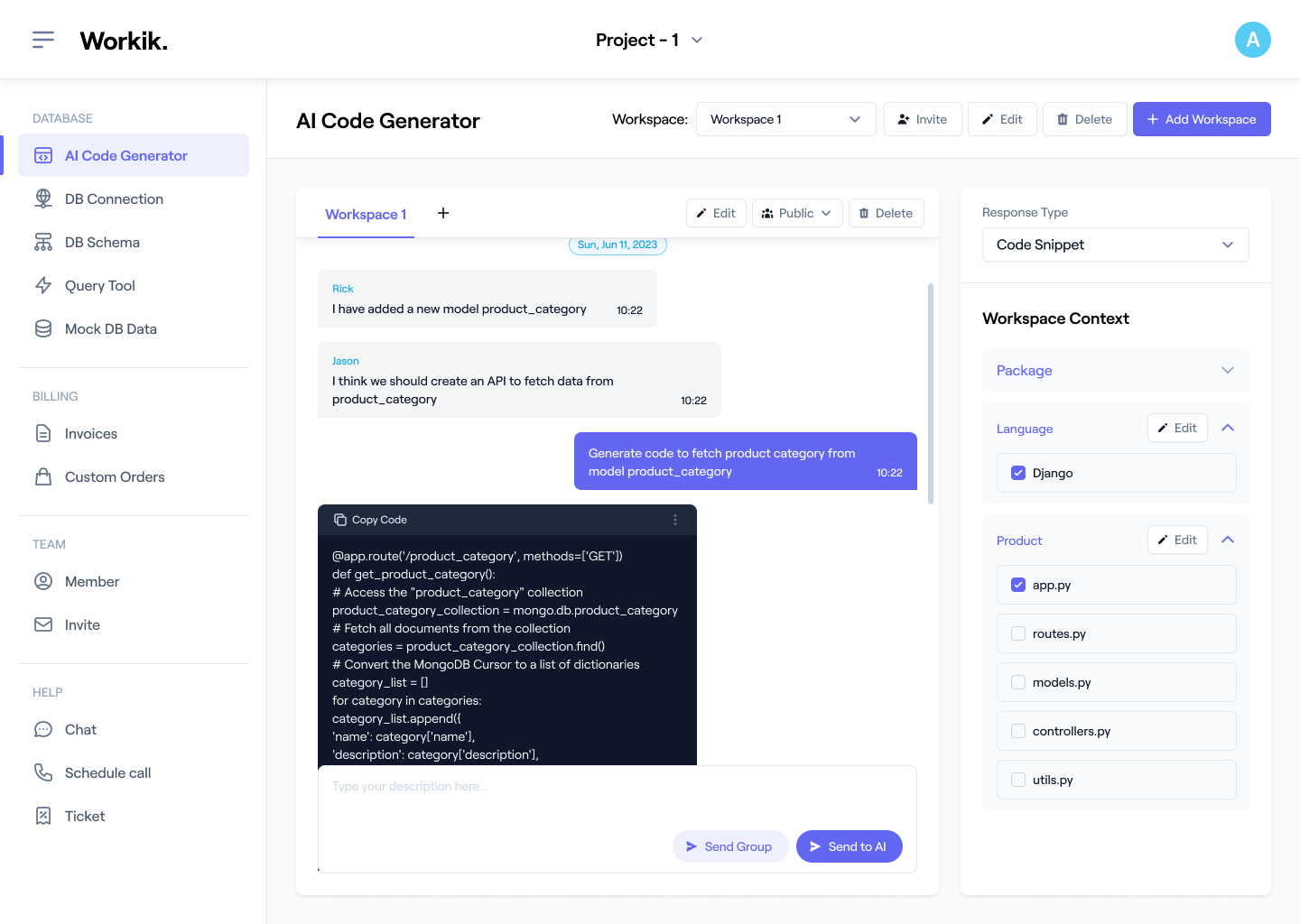

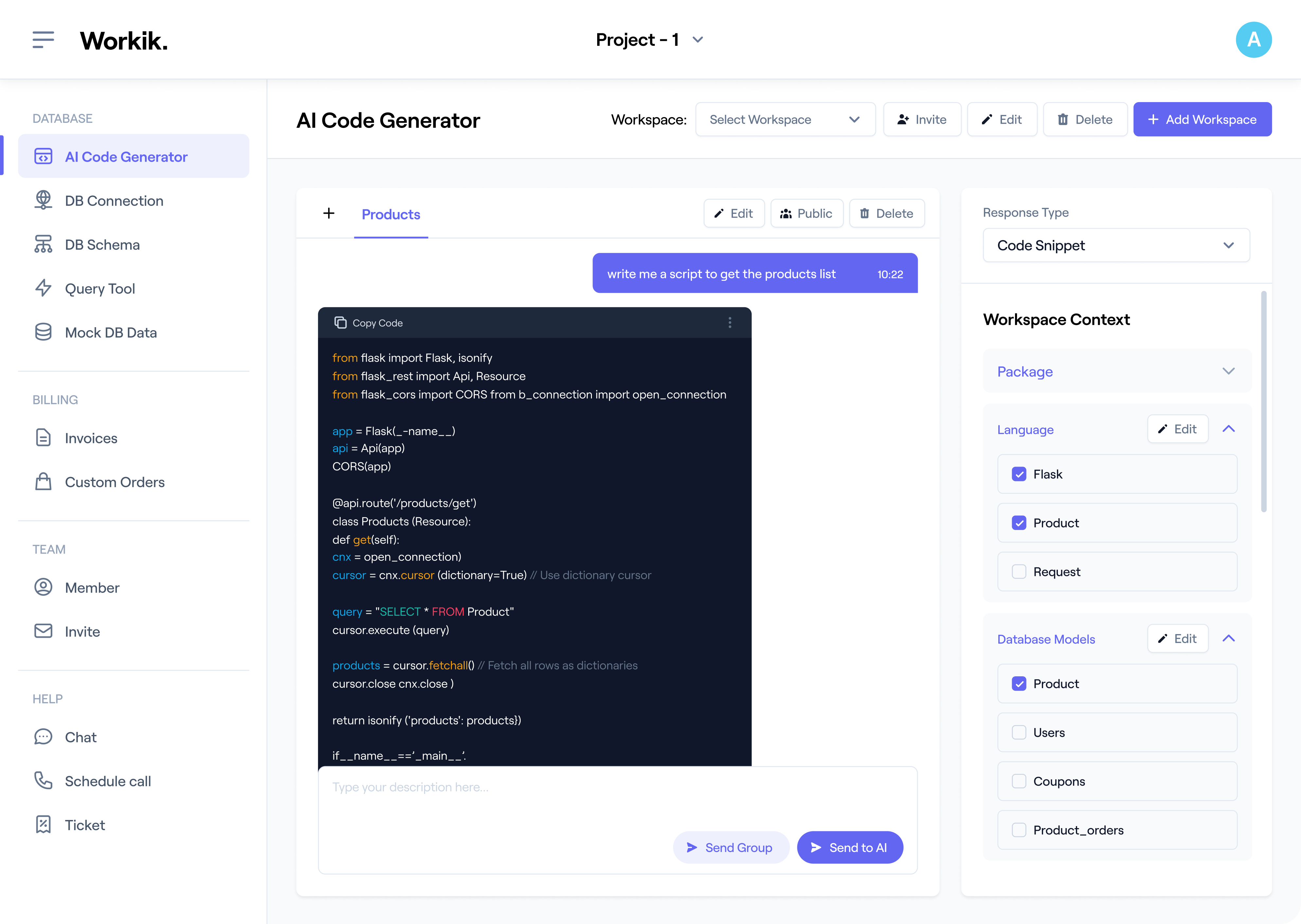

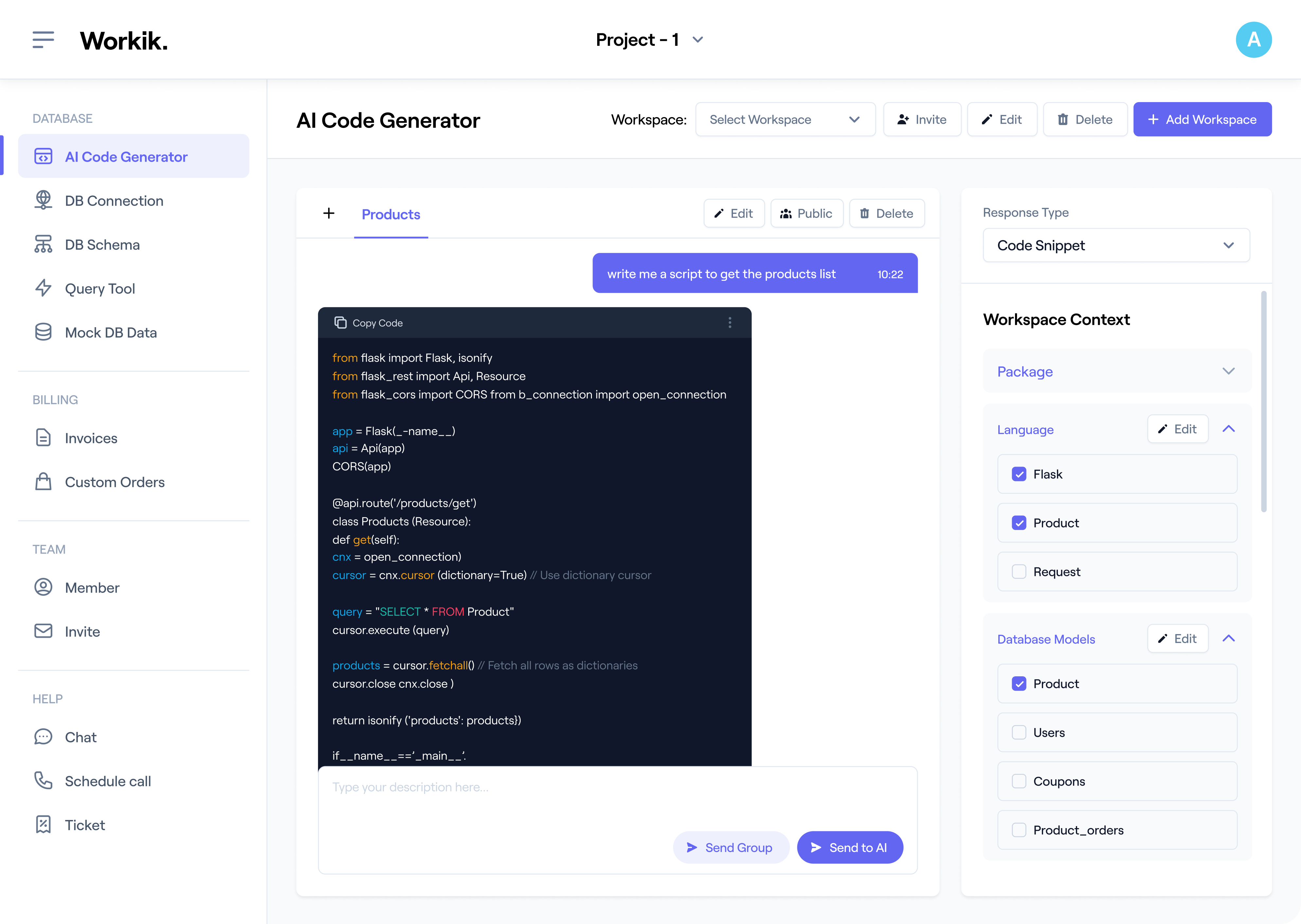

How it works

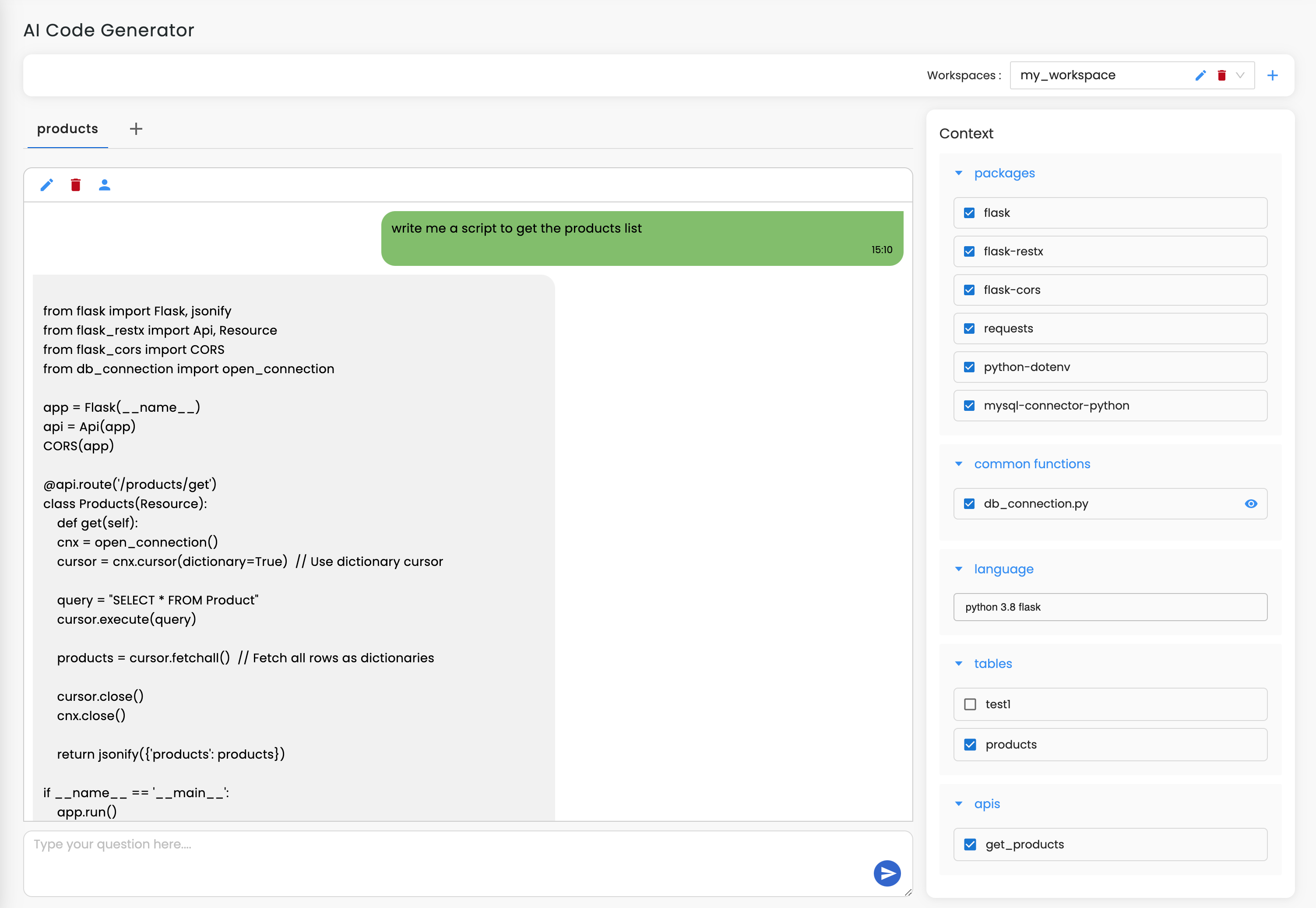

Create your Workik account using Google or enter your details manually to access AI-powered CUDA code generation tools.

Connect your GitHub, GitLab, or Bitbucket repositories to import your CUDA codebase. Add relevant libraries and specify frameworks for AI-driven kernel generation and optimization.

Use AI to generate CUDA kernels, optimize thread configurations, and identify bottlenecks like warp divergence or memory access conflicts. Get custom performance enhancements tailored to your GPU tasks.

Invite your team to review, edit, and expand your CUDA projects collaboratively in the shared Workik environment with AI-enhanced debugging and performance tuning.

Expand

.png)

.png)

Expand

Expand

Expand

Expand

Expand

Expand

TESTIMONIALS

Real Stories, Real Results with Workik

Workik AI made kernel optimization and debugging seamless. It’s a must-have!

Michael Chang

GPU Developer

Workik AI simplified CUDA coding, letting me focus on model building without GPU complexity.

Logan Chalamet

Junior ML Engineer

Workik AI automated CUDA kernel generation with precision, saving me hours on large-scale data tasks.

John Anderson

Data Scientist

What are the popular use cases of Workik AI-Powered CUDA Code Generator?

Popular CUDA code generation use cases of Workik AI for developers include, but are not limited to:

* Generate optimized CUDA kernels tailored for parallel computation tasks.

* Identify and resolve warp divergence, memory access conflicts, and unaligned memory usage.

* Automate performance tuning for GPU-intensive operations like matrix multiplications and deep learning workloads.

* Debug CUDA code for kernel execution errors, race conditions, and out-of-bound accesses.

* Streamline collaboration by producing clean, efficient CUDA code for team reviews and integration.

* Suggest context-aware improvements for thread and block configurations.

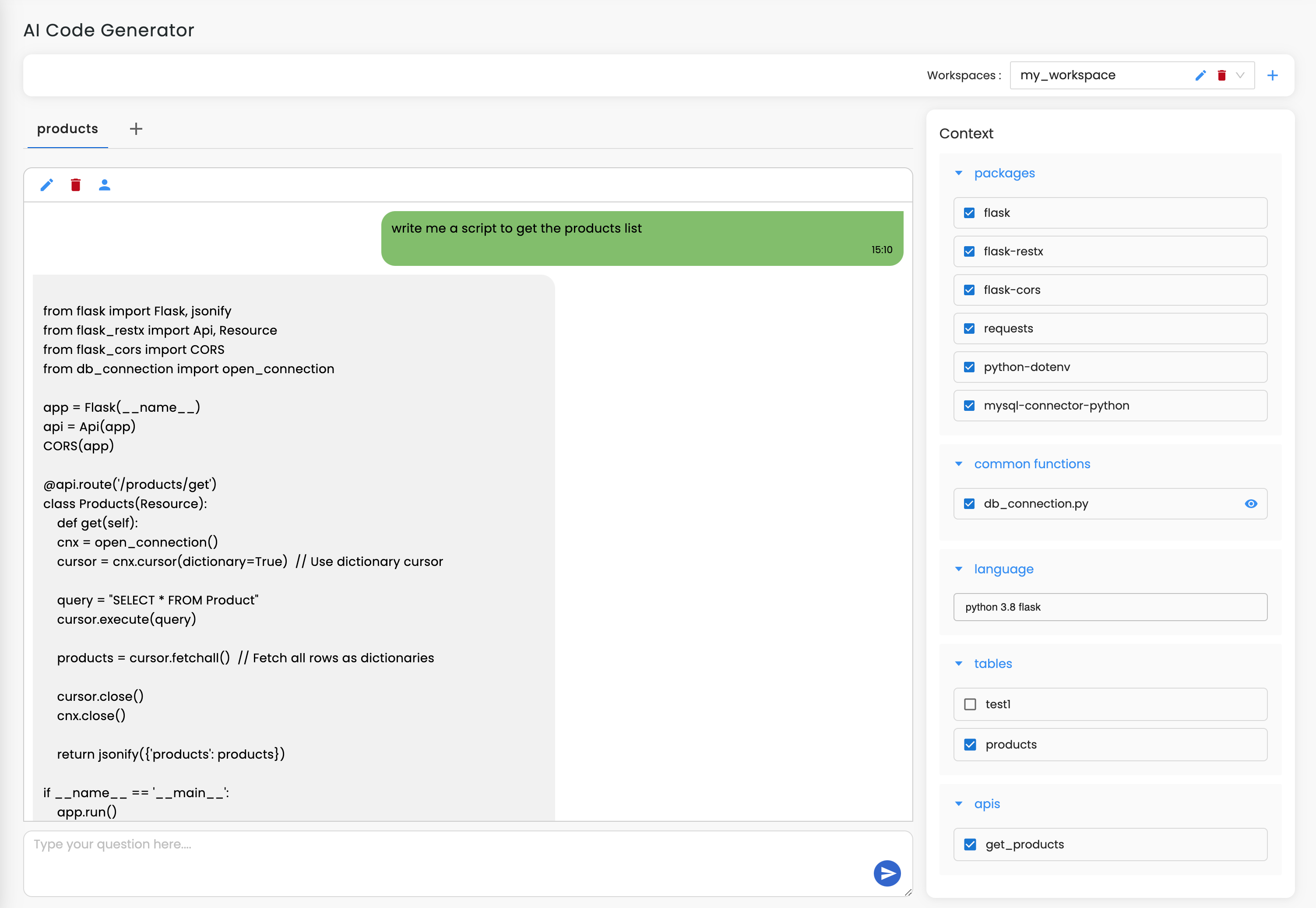

What kind of contexts can I add in Workik AI-Powered CUDA Code Generator?

Workik offers several context-setting options to tailor your CUDA code generation, allowing users to:

* Connect to GitHub, GitLab, or Bitbucket to integrate and analyze your CUDA codebase.

* Specify frameworks and libraries like cuBLAS, cuFFT, or Thrust for enhanced code generation.

* Add project-specific dependencies and APIs for custom CUDA kernel development.

* Provide GPU specifications such as architecture (e.g., Ampere, Volta) for targeted optimizations.

* Upload configuration files or dataset schemas to align CUDA operations.

How does Workik AI optimize CUDA kernel execution time?

Workik AI identifies inefficiencies in thread and block configurations, offering tailored solutions. For example, it adjusts shared memory usage in tasks like 3D image rendering or particle simulations, ensuring optimal GPU occupancy without manual profiling.

Can Workik AI handle multi-GPU setups for CUDA development?

Yes, Workik AI can generate code that efficiently distributes workloads across devices. For instance, it can create kernels for parallel training of deep learning models on multiple GPUs, leveraging libraries like NCCL for inter-device communication.

How does Workik AI help in scaling CUDA code for larger datasets?

Workik AI can generate scalable kernels by adapting grid and block sizes based on input dataset dimensions. For example, when processing terabytes of data for climate simulations, the AI ensures the kernels dynamically adjust to handle varying workloads without recompiling.

Can Workik AI generate custom CUDA templates for niche applications?

Absolutely. Workik AI can generate reusable templates for domain-specific CUDA tasks like Monte Carlo simulations or fluid dynamics modeling. These templates are built to include best practices, such as memory prefetching and load balancing.

Can Workik AI integrate with existing CI/CD pipelines for CUDA projects?

Yes, Workik AI can automate CUDA code linting, testing, and optimization. For instance, it can analyze and optimize kernels during code pushes on platforms like GitLab or Jenkins, ensuring that each deployment is GPU-optimized and production-ready.

Generate Code For Free

CUDA: Question and Answer

CUDA is a parallel computing platform and API developed by NVIDIA that enables developers to harness the power of GPUs for general-purpose computing tasks. It allows for the creation of high-performance applications in fields like scientific simulations, deep learning, and real-time rendering by providing a programming model that utilizes GPU cores for parallel processing.

Popular frameworks and libraries used with CUDA include:

Deep Learning:

TensorFlow, PyTorch

GPU Libraries:

cuBLAS, cuDNN, cuFFT

Scientific Computing:

Numba, Thrust

Visualization:

OpenCV

Debugging Tools:

Nsight Systems, Nsight Compute

Development Environments:

Visual Studio, JetBrains CLion

Popular use cases of CUDA include:

Deep Learning Training:

Accelerate model training for neural networks with frameworks.

Real-Time Video Processing:

Use CUDA for tasks like frame interpolation or object detection in video streams.

Scientific Simulations:

Run large-scale simulations for fluid dynamics or climate modeling.

Gaming Physics:

Power physics engines for realistic gaming experiences.

Big Data Analytics:

Process and analyze terabytes of data using GPU-optimized kernels.

Medical Imaging:

Enhance 3D image reconstruction and processing speeds in diagnostics.

Career opportunities and technical roles include GPU Software Engineer, Deep Learning Engineer, High-Performance Computing Specialist, Computer Vision Engineer, Data Scientist with GPU Expertise, Research Scientist in AI or Scientific Computing, and Game Developer for GPU-Accelerated Graphics.

Workik AI provides unparalleled assistance in CUDA development, including:

Kernel Generation:

Automates the creation of optimized CUDA kernels for specific tasks like matrix multiplication.

Performance Tuning:

Offers recommendations for thread and block configurations to maximize GPU utilization.

Debugging Support:

Detects and resolves errors like warp divergence or memory misalignment.

Scalability:

Generates code adaptable to multi-GPU environments for tasks like distributed training.

Integration:

Works seamlessly with GitHub, GitLab, and Bitbucket to streamline code management.

Code Optimization:

Refines memory management strategies, such as shared memory usage, for performance gains.

Documentation:

Provides clear, inline documentation for CUDA code, making it easier to onboard and maintain codebases.

Explore more on Workik

Get in touch

Don't miss any updates of our product.

© Workik Inc. 2026 All rights reserved.