Join our community to see how developers are using Workik AI everyday.

Supported AI models on Workik

GPT 5.2, GPT 5.1 Codex, GPT 5.1, GPT 5 Mini, GPT 5, GPT 4.1 Mini

Gemini 3 Flash, Gemini 3 Pro, Gemini 2.5 Pro, Gemini 2.5 Flash

Claude 4.5 Sonnet, Claude 4.5 Haiku, Claude 4 Sonnet, Claude 3.5 Haiku

Deepseek Reasoner, Deepseek Chat, Deepseek R1(High)

Grok 4.1 Fast, Grok 4, Grok Code Fast 1

Models availability might vary based on your plan on Workik

Features

Efficient Data Parsing

Rapid parsing of HTML, JSON, XML; transform web data into structured formats.

Language & Framework Versitality

Generate crawling scripts in various languages (Python, JavaScript, etc.) and frameworks.

AI-Assisted Optimization

Use suggestions for optimize scripts ensuring maximum efficiency & speed

Data Extraction from Complex Websites

Easily navigate and extract from multi-layered websites with nested navigation.

How it works

Begin your journey by signing up either through Google or manually register in seconds.

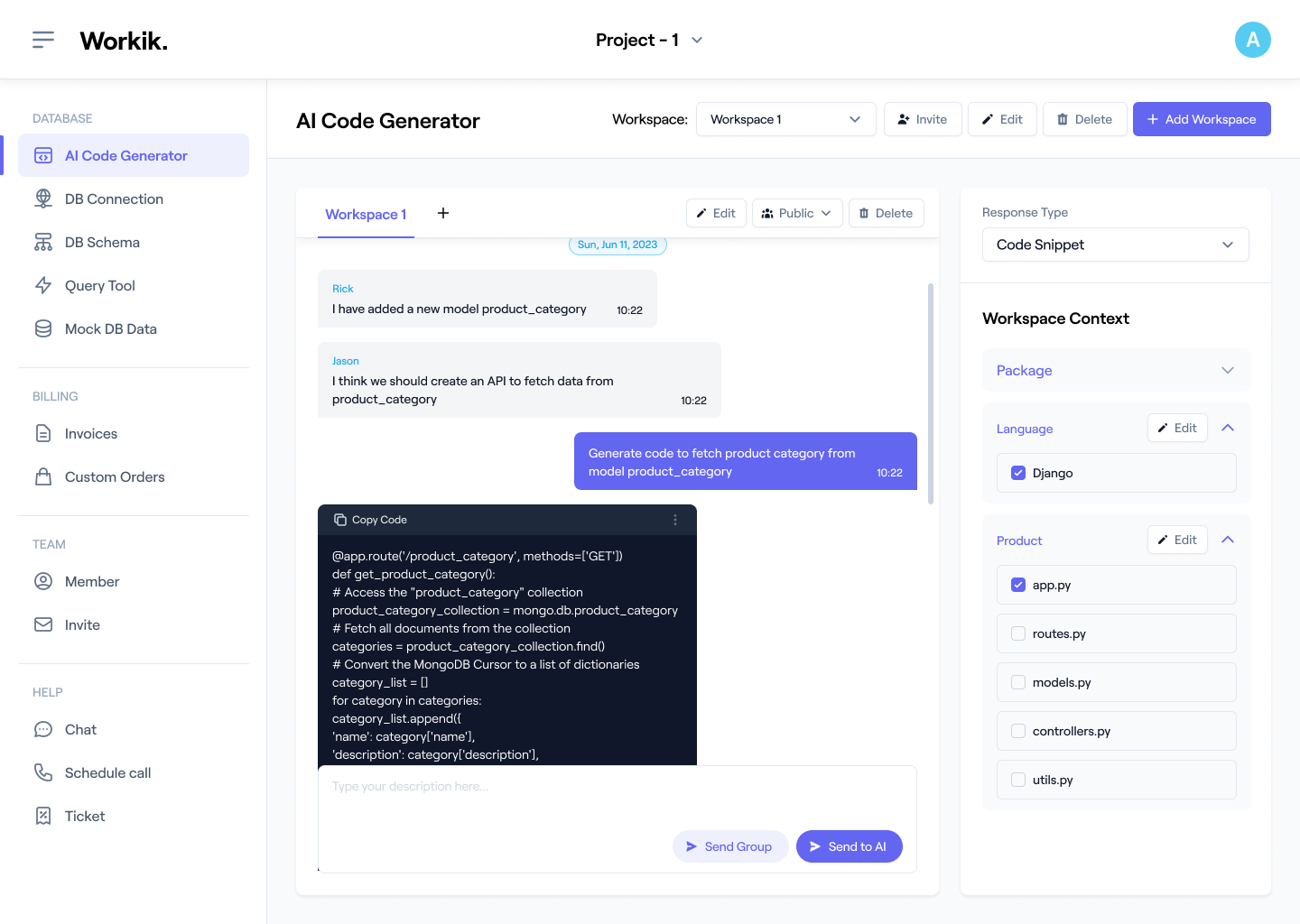

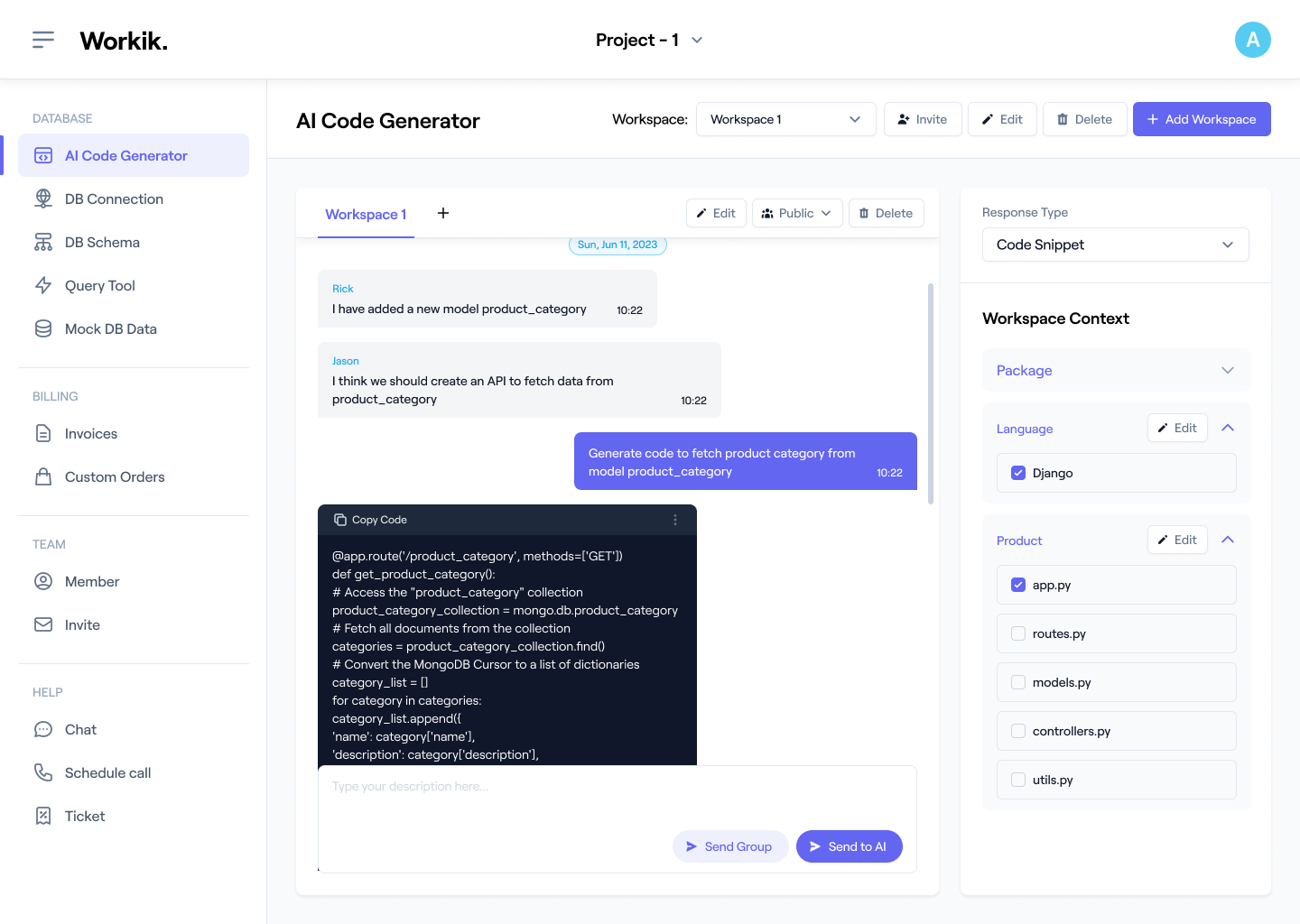

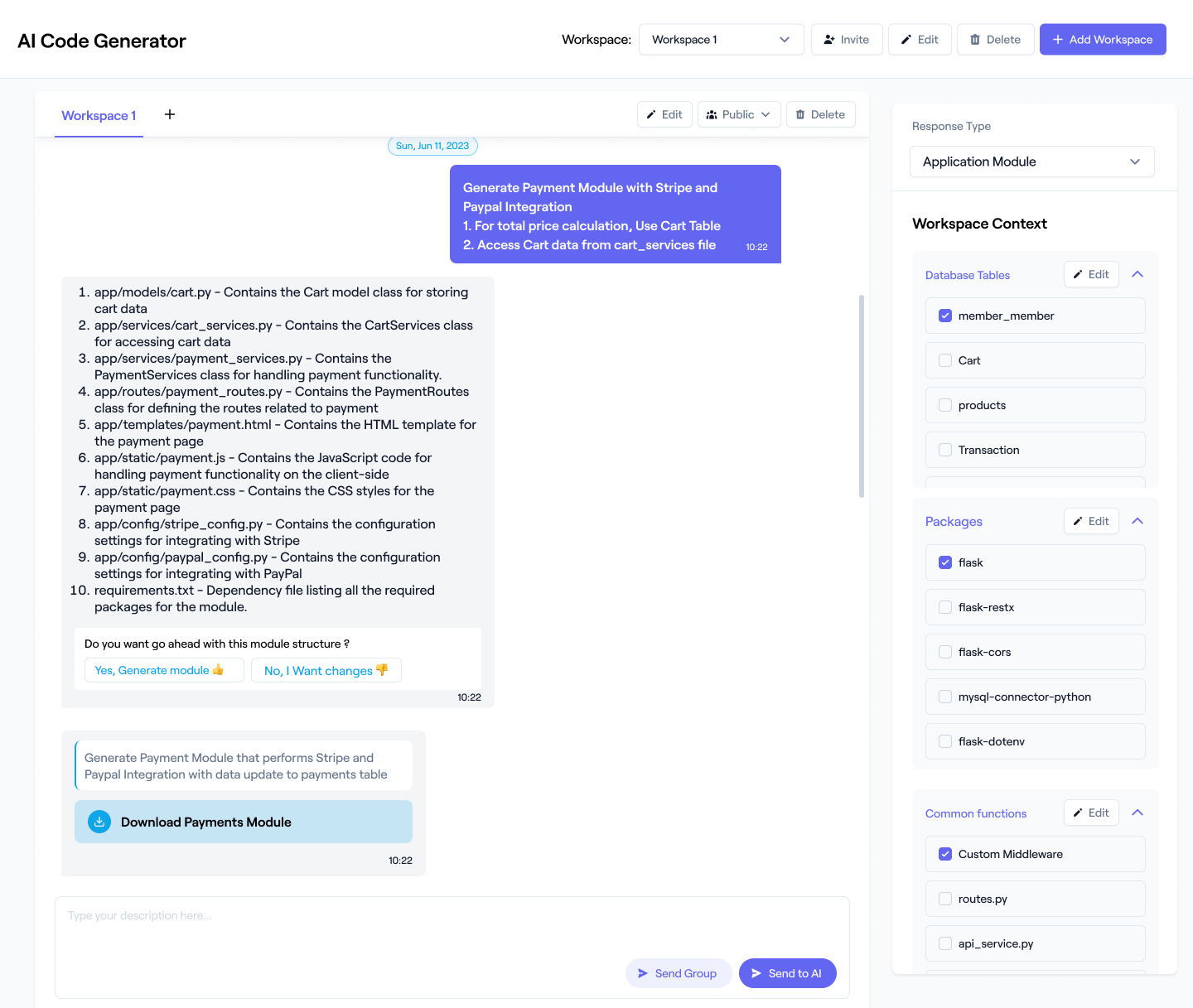

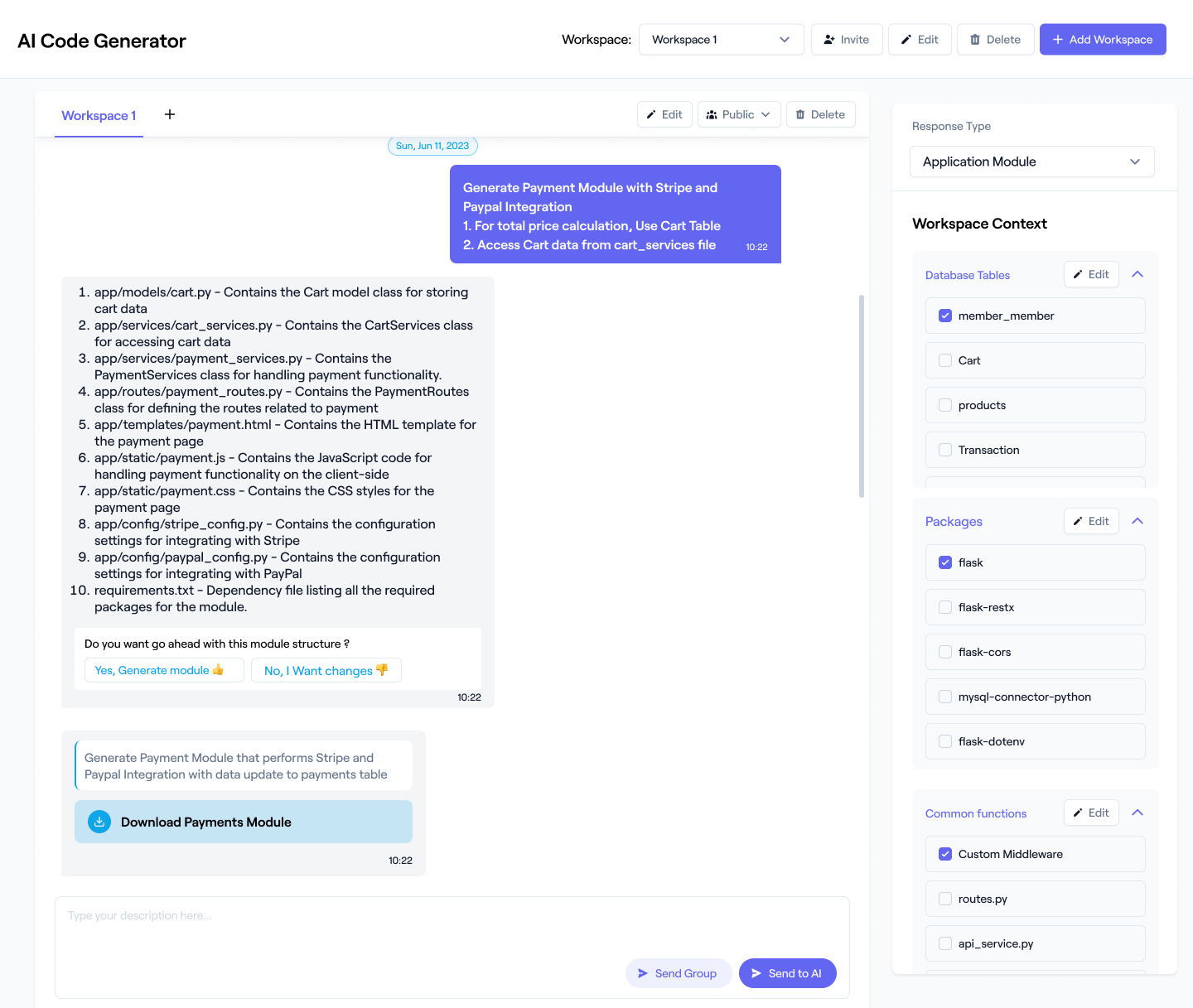

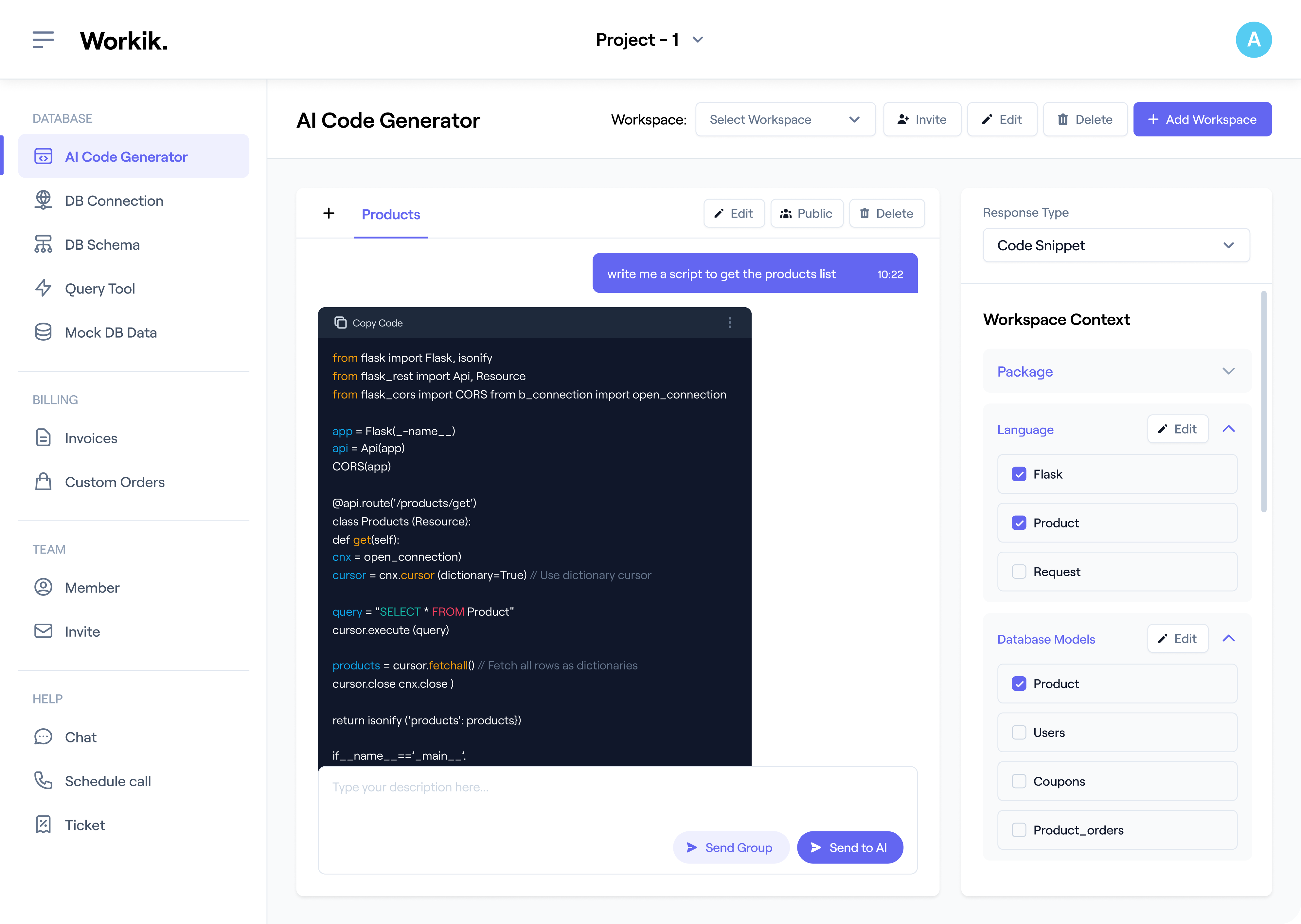

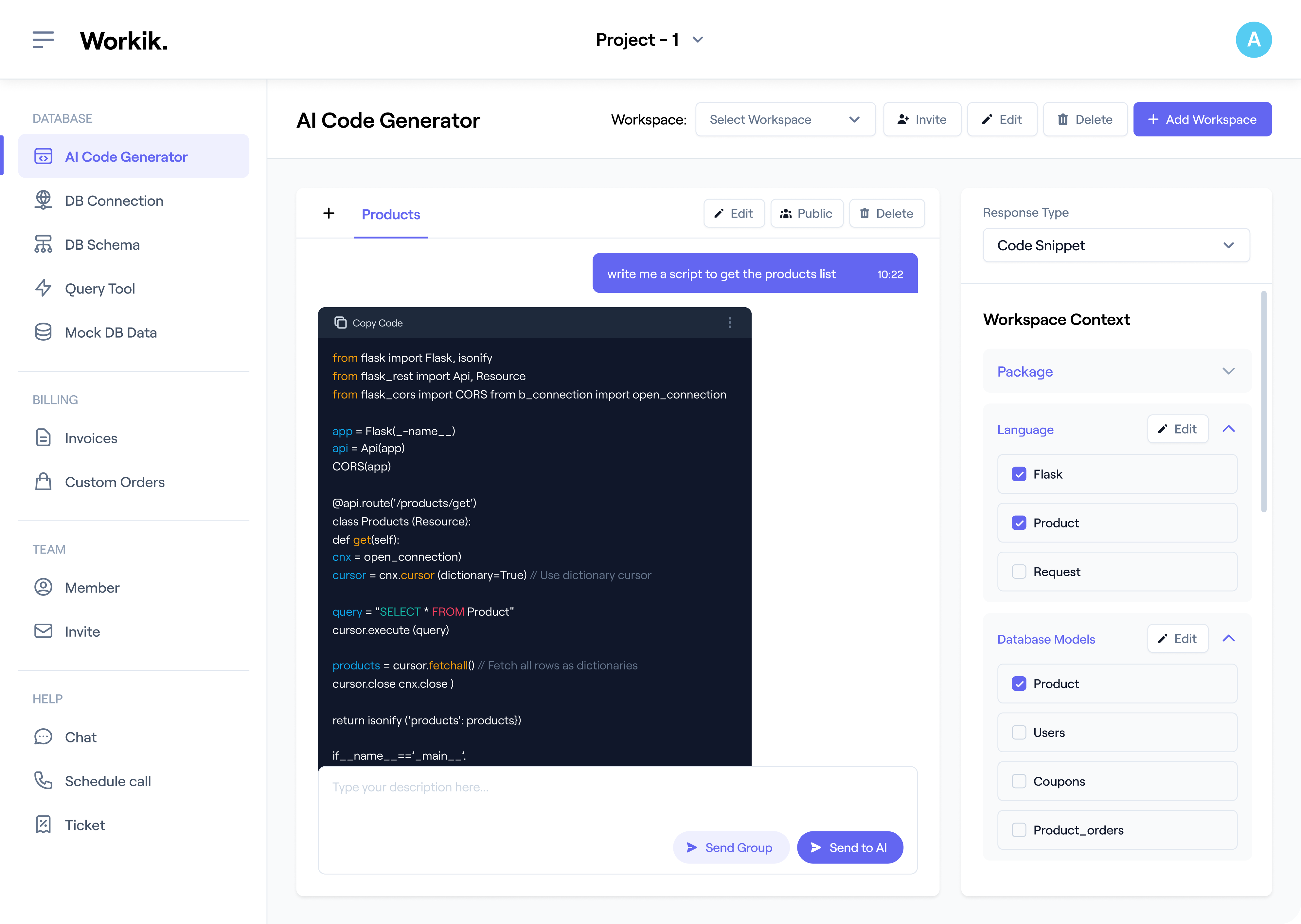

Set context for AI to personalize your output. Specify your stack, like Python with Scrapy, and upload database schemas or API blueprints.

Utilise AI assistance to seamlessly create and optimize efficient web crawling scripts according to your needs.

Fosters team collaboration in script development, enhancing productivity and collective problem-solving in web crawler script creation.

supercharge development

Try For Free

FEATURES

Bypass advanced web protections with anti-bot solutions.

Efficiently handle and process large-scale data sets.

Tailor scripts to specific site architectures with customizable crawler logic.

Achieve optimized script performance through AI-driven efficiency.

Scale web crawling operations with distributed crawling support.

Try For Free

FEATURES

Create web crawling scripts easily, no advanced coding required.

Access AI support for assistance with challenges.

Simplify API connectivity for easy handling of web data sources.

Learn web crawling effectively through AI insights.

Make complex data extraction tasks straightforward with AI help.

Expand

Expand

Expand

Expand

Expand

Expand

Expand

TESTIMONIALS

Real Stories, Real Results with Workik

Workik AI's assistance for scripts boosted efficiency and opened new analytical avenues. A true game changer for data teams!

Miles Bennett

Data Scientist

Workik excels in streamlining data harvesting. Its AI scripts integrate seamlessly with our databases, enhancing both speed and data quality.

Kim Tan

Database Administrator

Workik brings unmatched control and customization in web crawling. Our backend processes are now not just faster, but more efficient.

Sonia Mehra

Senior Backend Engineer

What are the popular use cases for Workik's web crawling scripts generator?

The popular use cases for Workik's web crawling scripts generator include:

1) Generate scripts for price and stock monitoring on e-commerce sites.

2) Create scripts to extract and analyze social media data for trend insights and marketing strategies.

3) Develop scripts for news data aggregation, aiding in content curation.

4) Craft scripts for competitor website analysis to track SEO updates and content changes.

5) Simplify scientific data extraction from research publications with AI enhanced web crawling scripts.

How does the context-setting feature enhance script generation?

Adding context is optional but beneficial for personalized AI enhanced scripts. Users can include:

* Programming languages and frameworks (e.g., Python, Scrapy).

* Desired data formats (JSON, XML).

* Databases

* Target website characteristics.

* Integration with GitHub, GitLab, Bitbucket (optional) for tailored script generation.

What automation pipeline can I run on Workik for web crawling tasks?

Workik's automation pipeline is designed to significantly enhance web crawling tasks by offering features like intelligent script generation, automated data extraction, and real-time analytics. It streamlines the process by using AI to optimize crawling paths, manage data collection more efficiently, and rapidly adapt to changes in web page structures.

Is Workik suitable for handling complex, JavaScript-heavy sites?

Absolutely. Workik is well-equipped to manage complex, JavaScript-heavy sites for web crawling. It utilizes advanced techniques like headless browsers and can execute JavaScript, ensuring that dynamically loaded content is effectively captured. This ability is especially valuable for scraping modern web applications and sites where content is loaded asynchronously or relies heavily on client-side scripts. Workik’s AI-driven scripts are designed to interact with these complex elements, making it possible to extract comprehensive data from such sites.

How does Workik support collaborative web crawling projects?

Workik enhances collaborative web crawling projects through its workspace feature. Teams can share and work on scripts, contexts, and data collectively within these workspaces. This facilitates synchronized development, sharing of updates, and efficient teamwork.

Can't find answer you are looking for?

Request question

Request question

Please fill in the form below to submit your question.

Generate Code For Free

WEB CRAWL SCRIPT QUESTION & ANSWER

A web crawl script is a program designed to automatically extract data from websites. It is used for various purposes, including data extraction, SEO monitoring, price comparison, content aggregation, and research. By leveraging libraries such as Scrapy, Beautiful Soup, Puppeteer, and Jsoup, developers can build efficient web crawlers tailored to their specific needs.

Popular libraries used in Web Crawl Scripts are:

1. Python:

Scrapy, Beautiful Soup, Requests, Selenium

2. JavaScript/Node.js:

Puppeteer, Cheerio, Axios

3. Java:

Jsoup, Apache Nutch

4. Ruby:

Nokogiri, Wombat

5. PHP:

Goutte, cURL

6. Go:

Colly, Goquery

Popular use cases of Web Crawl Scripts include:

1. Data Extraction:

Collecting data from websites for analysis or processing.

2. SEO Monitoring:

Scraping websites to monitor SEO metrics and content changes.

3. Price Comparison:

Aggregating pricing information from multiple online retailers.

4. Content Aggregation:

Collecting articles, blog posts, or news from various sources.

5. Research:

Gathering information from various websites for research purposes.

Career opportunities and technical roles available for professionals in Web Crawl Scripts include Data Engineer, Web Developer, Data Scientist, Research Analyst, and more.

Workik AI provides broad assistance for Web Crawl Scripts, which includes:

1. Script Generation:

Produces web crawling scripts in various languages for rapid development.

2. Debugging:

Identifies and fixes issues in web crawl scripts with intelligent suggestions.

3. Optimization:

Recommends performance improvements and best practices for efficient web crawling.

4. Automation:

Automates the scheduling and execution of web crawling tasks.

5. Data Parsing:

Assists in parsing and processing the extracted data.

6. Error Handling:

Provides robust error handling and retry mechanisms for web crawlers.

7. Anti-Scraping Measures:

Suggests techniques to avoid detection and blocking by websites.

Explore more on Workik

Get in touch

Don't miss any updates of our product.

© Workik Inc. 2026 All rights reserved.