Join our community to see how developers are using Workik AI everyday.

Features

Generate Complex Queries

Use AI to create Snowflake queries for complex joins, subqueries, CTEs, aggregations, and more.

Optimize Data Loading

AI can craft efficient COPY INTO and MERGE statements for rapid bulk loading from sources like Amazon S3.

Boost Query Performance

AI can generate optimized SQL queries, refining CLUSTER BY and PARTITION BY to lower costs.

Craft Analytics-Ready Queries

AI enhances analytical queries using Snowflake's parallel execution and pruning for improved speed.

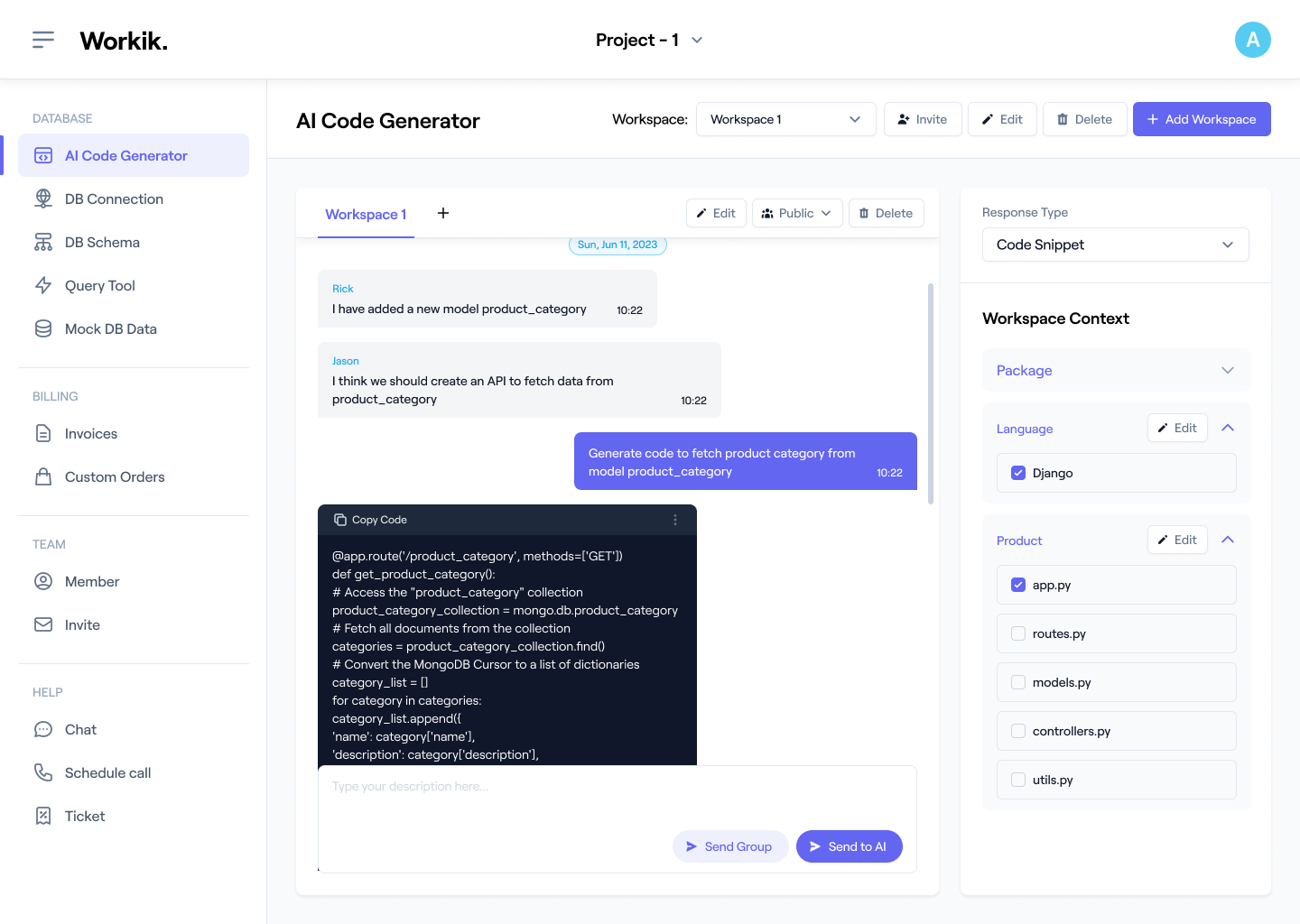

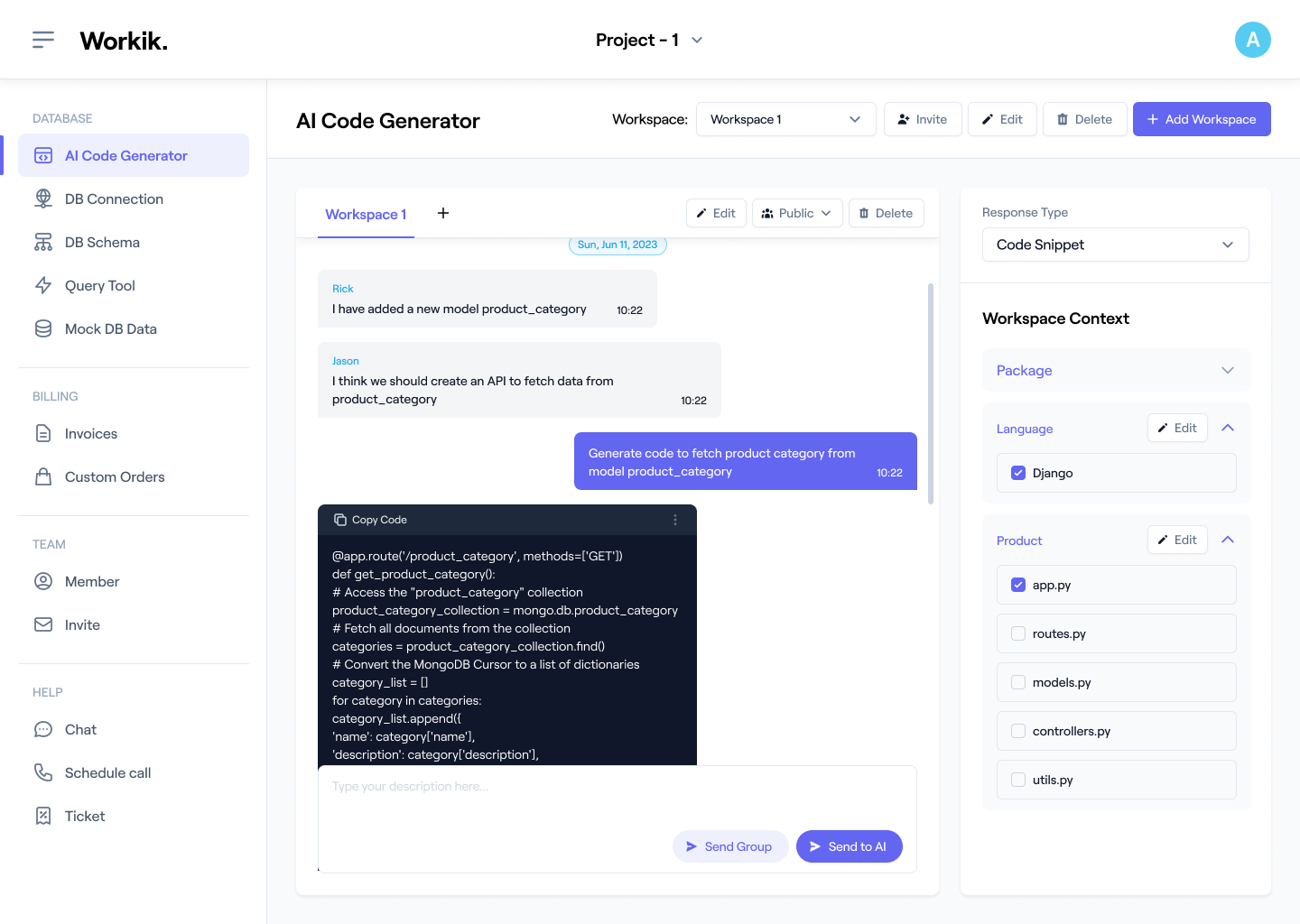

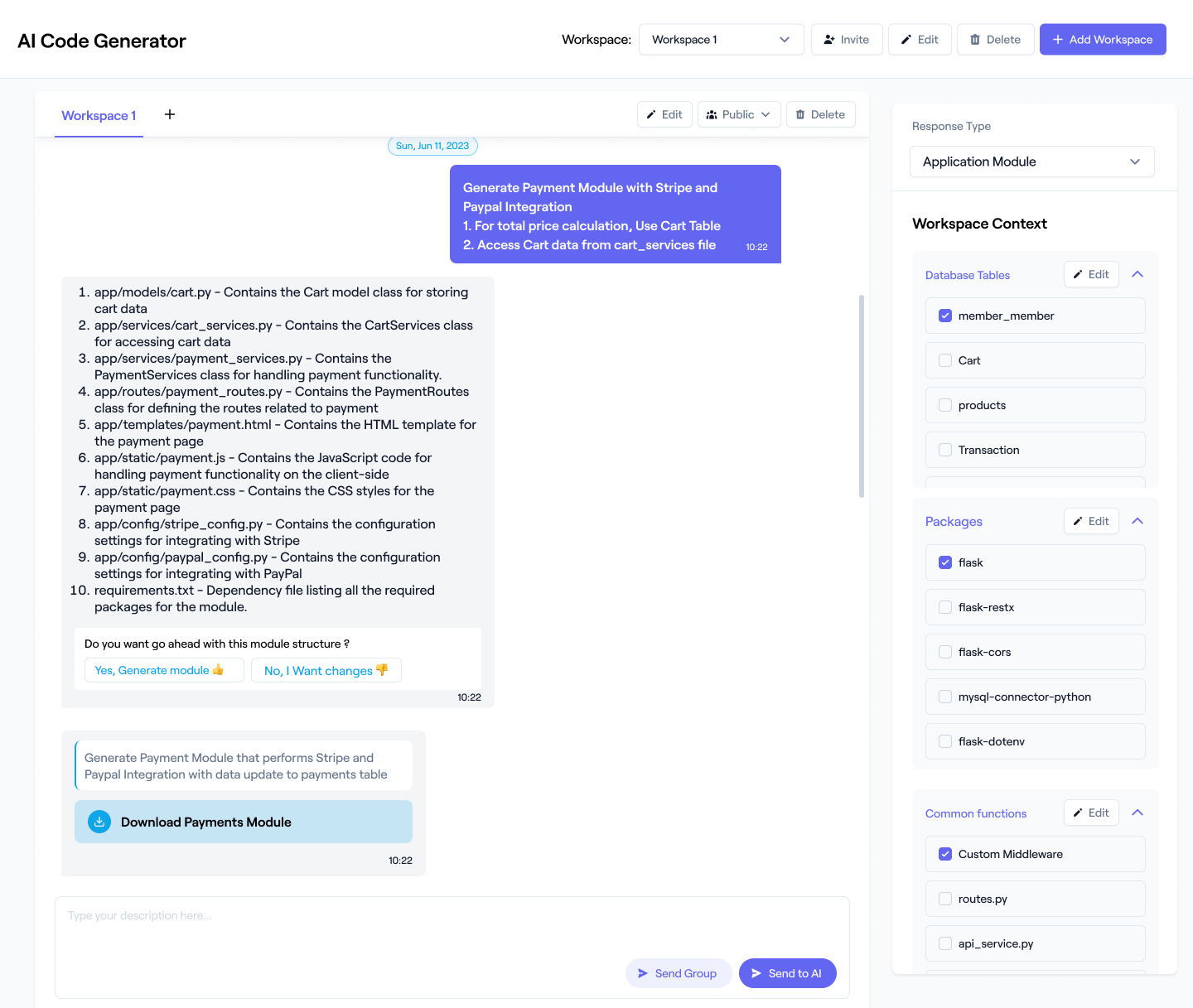

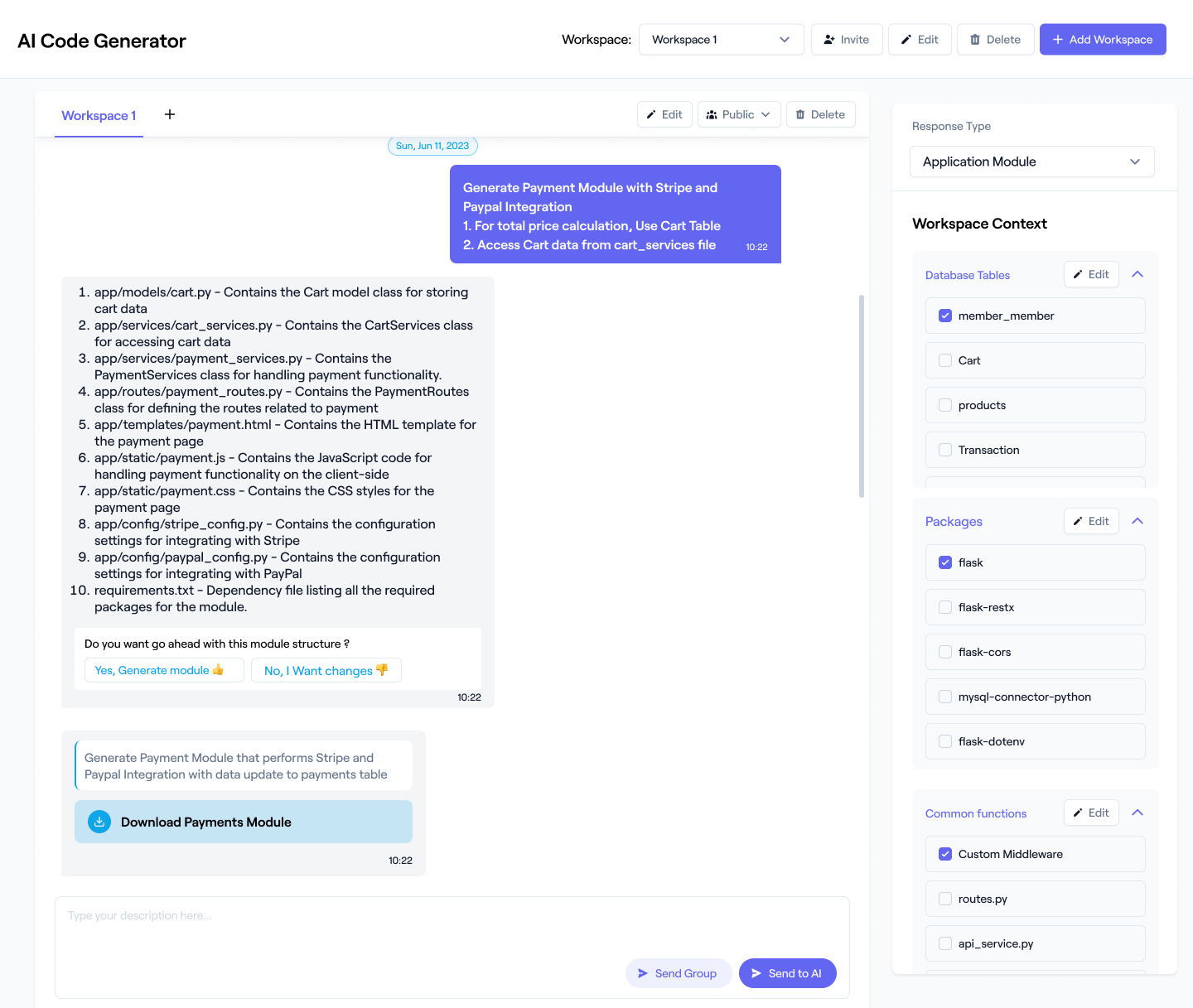

How it works

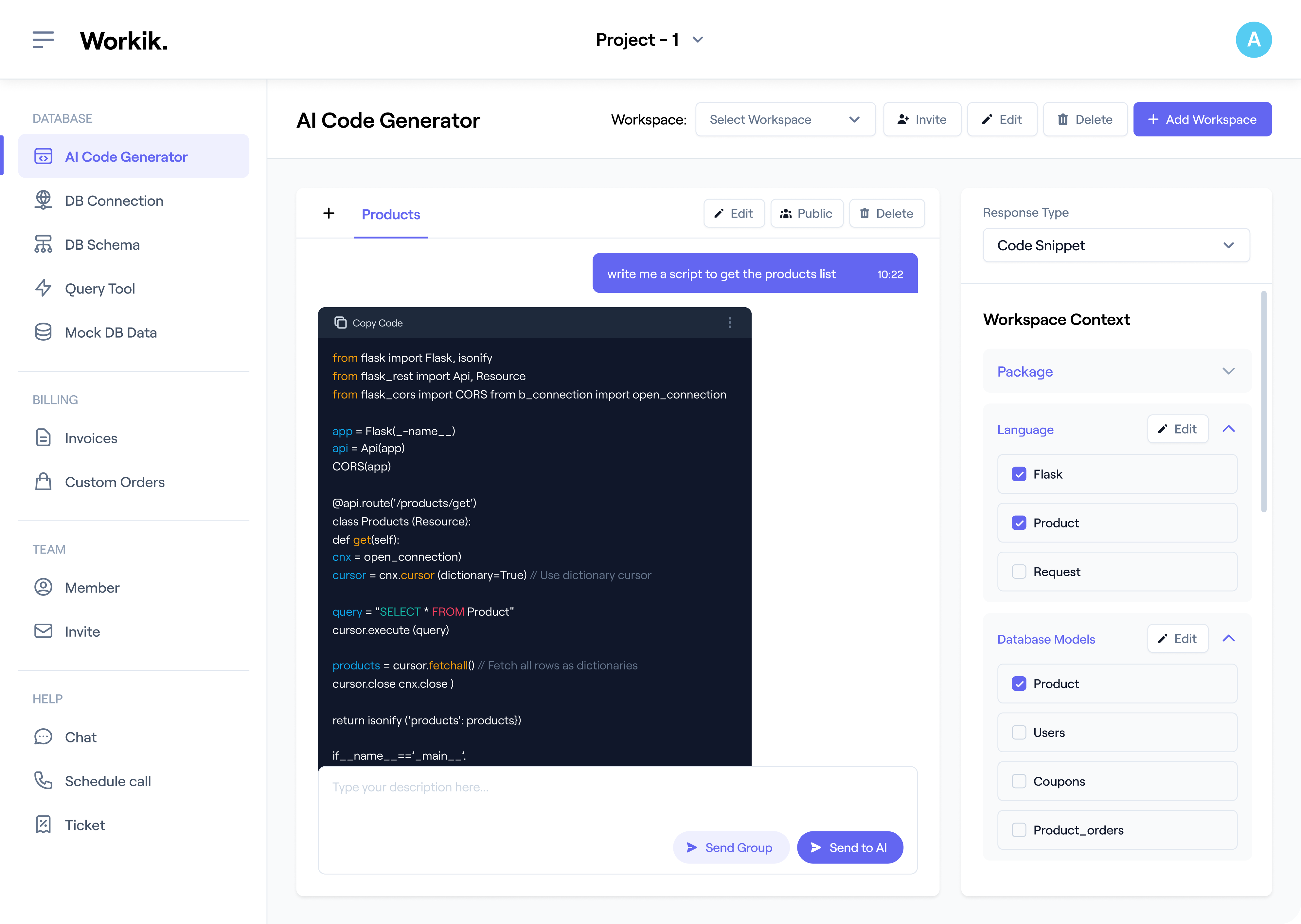

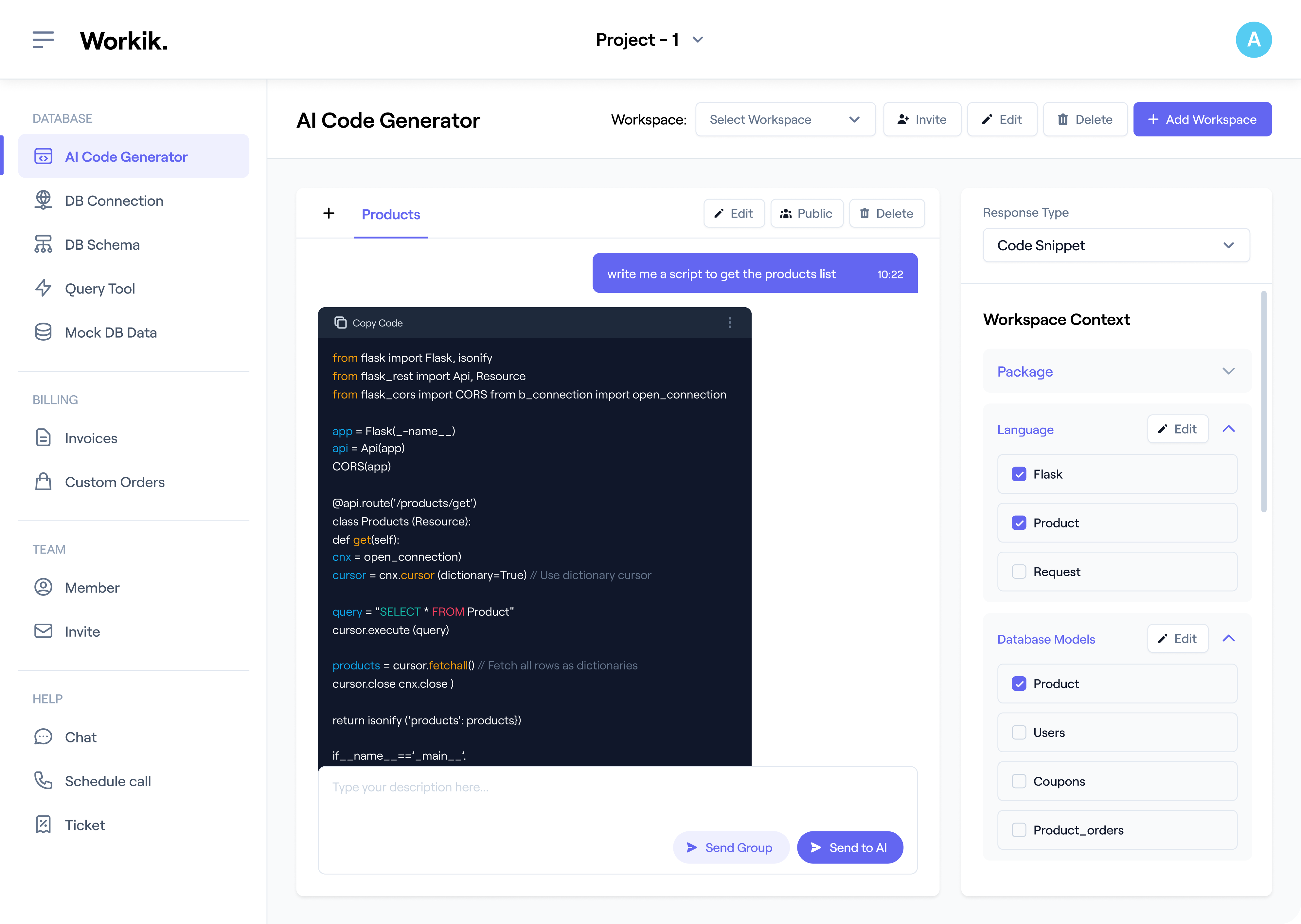

Create your Workik account in seconds and begin your Snowflake development journey with AI-powered assistance.

Connect your existing projects from GitHub, GitLab, or Bitbucket. Import datasets, queries, and schemas, and define tools like dbt, SnowSQL, and Python for precise AI-driven insights.

Utilize AI to automate query generation, optimize performance, manage data analytics, and facilitate debugging, testing, and documentation in Snowflake.

Work collaboratively with your team in real-time, share insights, and integrate AI with your existing Snowflake data pipelines for seamless deployment and insights generation.

Expand

Expand

Expand

Expand

Expand

Expand

Expand

TESTIMONIALS

Real Stories, Real Results with Workik

Workik AI automates complex Snowflake ETL tasks, cutting our processing time by 50%. A total game-changer!

John Richards

Senior Data Engineer

Workik’s seamless integration optimizes Snowflake schema migrations and query tuning effortlessly. It’s a must-have!

Amanda Li

Cloud Solutions Architect

With Workik AI, I could generate real-time Snowflake queries in minutes, boosting our analytics speed and accuracy!

Rajesh Kumar

Data Analyst

What are popular use cases of Workik AI for Snowflake query generation?

Popular use cases of Workik's AI for Snowflake query generation for developers include, but are not limited to:

* Generate SQL queries for complex data retrieval based on user inputs.

* Utilize NLP to translate natural language queries into efficient SQL statements.

* Analyze execution plans and suggest optimizations like indexing and partitioning.

* Provide interactive query builders with AI-driven suggestions for exploring datasets.

* Create parameterized SQL queries for consistent reporting and data integrity.

* Automate data aggregation and filtering for faster insights.

* Optimize for cost efficiency by reducing data scans and adjusting compute resources.

How does context-setting work in Workik for Snowflake projects?

Setting context in Workik is optional but enhances AI responses for your Snowflake projects. Here are the types of context you can add for Snowflake:

* Existing SQL scripts and stored procedures (sync your Snowflake project from GitHub, Bitbucket, or GitLab)

* Snowflake features (e.g., Snowpipe, Streams, or Tasks for data ingestion and processing)

* Database schemas (e.g., ER diagrams for accurate table relationships)

* Data types and structures (e.g., defining tables, views, and columns for optimal query generation)

* API specifications (e.g., OpenAPI or Swagger for generating integration-ready SQL queries)

How does Workik optimize Snowflake query performance?

Workik analyzes your Snowflake schema and data distribution, generating optimized queries that take advantage of Snowflake's MPP (Massively Parallel Processing) system. It can also suggest refactoring for faster execution and resource efficiency.

Can Workik AI handle Snowflake’s advanced features?

Absolutely. Workik AI generates queries that leverage Snowflake’s time-travel feature and efficiently handle semi-structured data stored in VARIANT columns, allowing you to work with JSON, Avro, or Parquet formats.

How does Workik AI handle Snowflake-specific functions in queries?

Workik AI supports Snowflake features like clustering keys and materialized views. For clustering keys, it generates queries that optimize data pruning, reducing scan times and improving performance. With materialized views, Workik leverages precomputed query results to speed up execution and lower compute costs.

Can Workik AI generate Snowflake queries for tasks involving large-scale data transformations?

Yes, Workik AI can generate complex queries such as pivoting, aggregations, and nested subqueries. It also supports creating efficient ETL (Extract, Transform, Load) queries that transform large datasets, optimizing performance using Snowflake's bulk data loading and parallel processing capabilities.

Generate Code For Free

Snowflake: Questions & Answers

Snowflake is a cloud-based data platform that enables secure and scalable data warehousing, analytics, and data sharing. It supports multi-cloud deployment on AWS, Azure, and GCP, allowing businesses to store and analyze structured and semi-structured data. Snowflake’s separation of compute and storage architecture provides flexible scaling, high performance, and optimized query execution across massive datasets.

Popular frameworks and libraries used for generating and executing queries in Snowflake include:

Data Ingestion:

Snowpipe, Apache Kafka

ETL:

dbt (Data Build Tool), Apache Spark

Query Optimization:

Snowflake's Query Profile tool

Data Transformation:

SQL, Python UDFs, Java UDFs

Orchestration:

Apache Airflow, Prefect

Popular use cases of the Snowflake Query Generator include:

Data Retrieval:

Automatically generate SQL queries to retrieve complex data sets using joins, subqueries, and filtering.

ETL Query Generation:

Build queries for efficient data transformation and loading into Snowflake using SQL and Python UDFs.

Real-Time Query Execution:

Generate queries for data ingestion and analysis using Snowpipe and Streams.

Data Reporting:

Create parameterized queries for consistent reporting across departments or clients.

Optimized Query Generation:

Generate queries for large datasets, leveraging Snowflake’s multi-cluster architecture.

Career opportunities and technical roles available for Snowflake professionals include Data Engineer, Data Architect, Cloud Data Analyst, ETL Developer, Snowflake Developer, BI Engineer, Data Operations Engineer, SQL Developer, Database Administrator (DBA), and Cloud Data Architect.

Workik AI provides comprehensive assistance for Snowflake development, including:

SQL Code Generation:

Generates complex SQL queries for Snowflake, handling joins, aggregations, and nested subqueries.

Query Optimization:

Analyzes query execution plans and suggests improvements like clustering keys, partitioning, and indexing.

Performance Tuning:

Refactors queries leveraging Snowflake’s MPP (Massively Parallel Processing).

ETL Query Generation:

Generates SQL queries to automate Extract, Transform, and Load (ETL).

Handling Semi-Structured Data:

Assists in creating queries for JSON, Avro, and Parquet data in Snowflake’s VARIANT columns.

Time-Travel Queries:

Generates queries using Snowflake’s Time Travel feature for historical data retrieval.

Explore more on Workik

Get in touch

Don't miss any updates of our product.

© Workik Inc. 2026 All rights reserved.