Join our community to see how developers are using Workik AI everyday.

Features

Optimize MapReduce Jobs

AI helps to generate efficient MapReduce code and adjust YARN settings to enhance scalability.

Simplify HDFS Management

AI can create HDFS scripts for file transfers, directories, and replication with AI-driven storage optimization.

Craft Hive Queries

Use AI to formulate HiveQL with optimized partitioning and indexing for faster query performance.

Streamline Sqoop Transfers

Create Sqoop scripts for smooth data migration, reducing errors and optimizing bandwidth with AI.

How it works

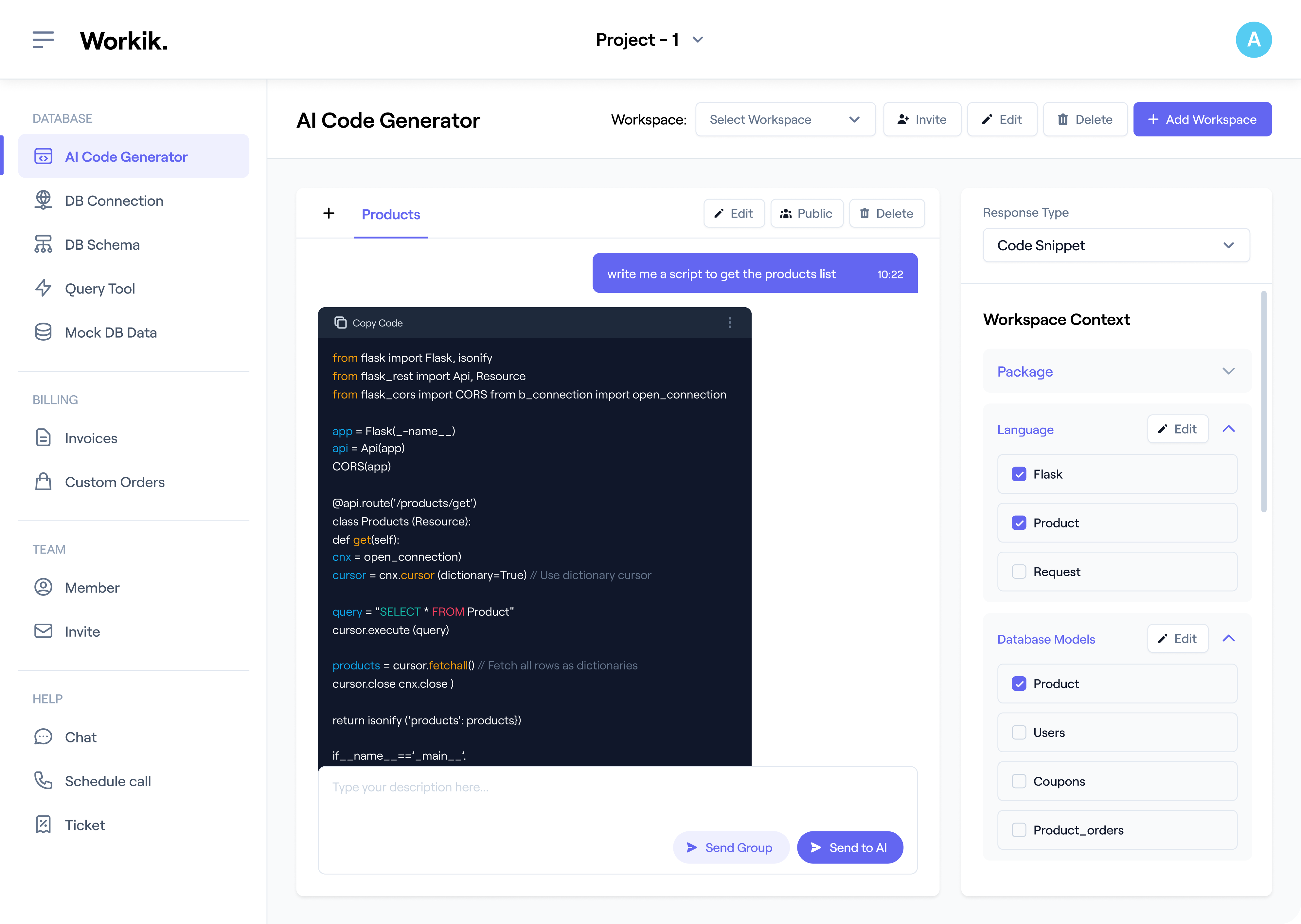

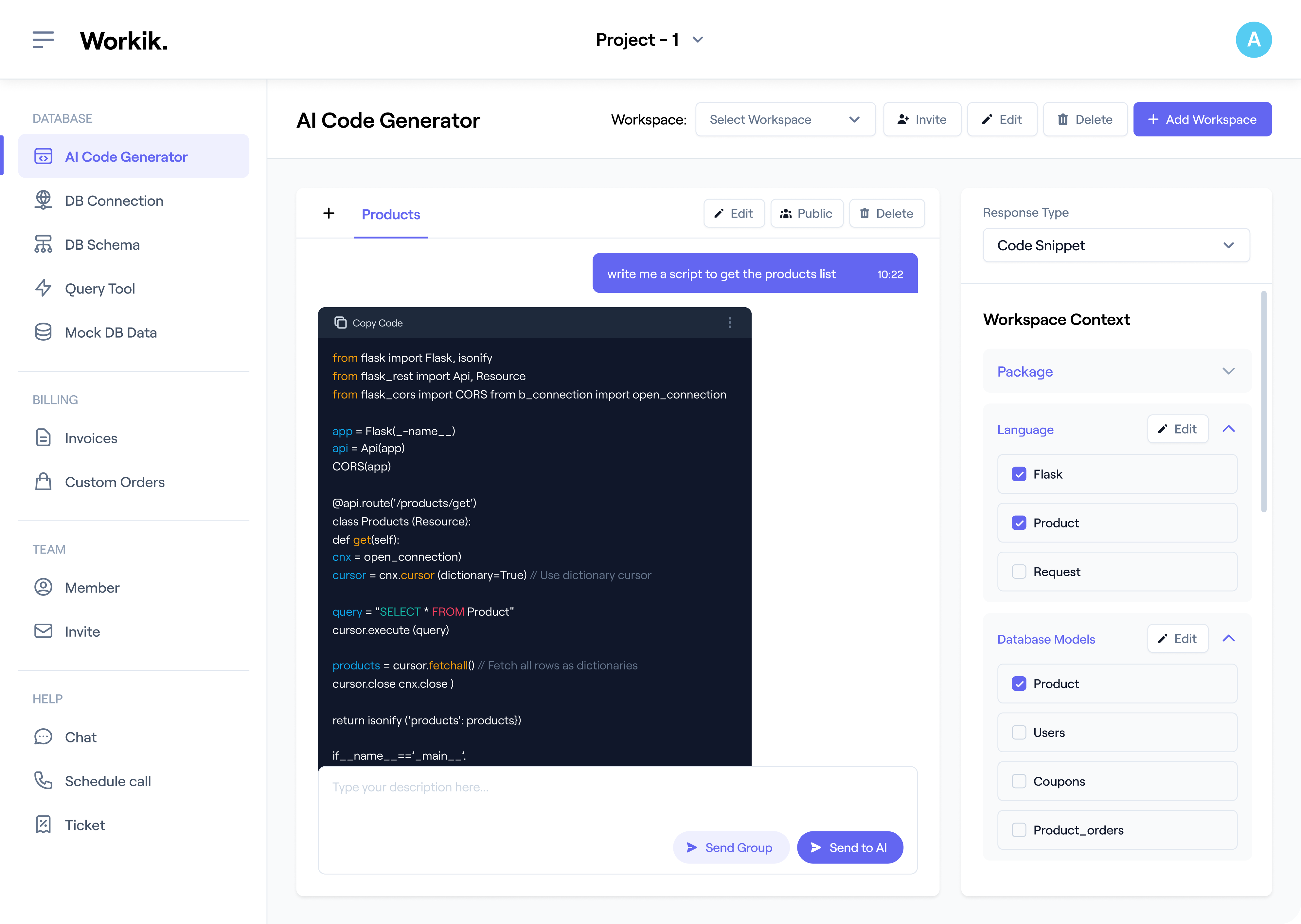

Sign up in seconds using Google or enter your details manually to access Workik’s AI-driven Hadoop coding tools.

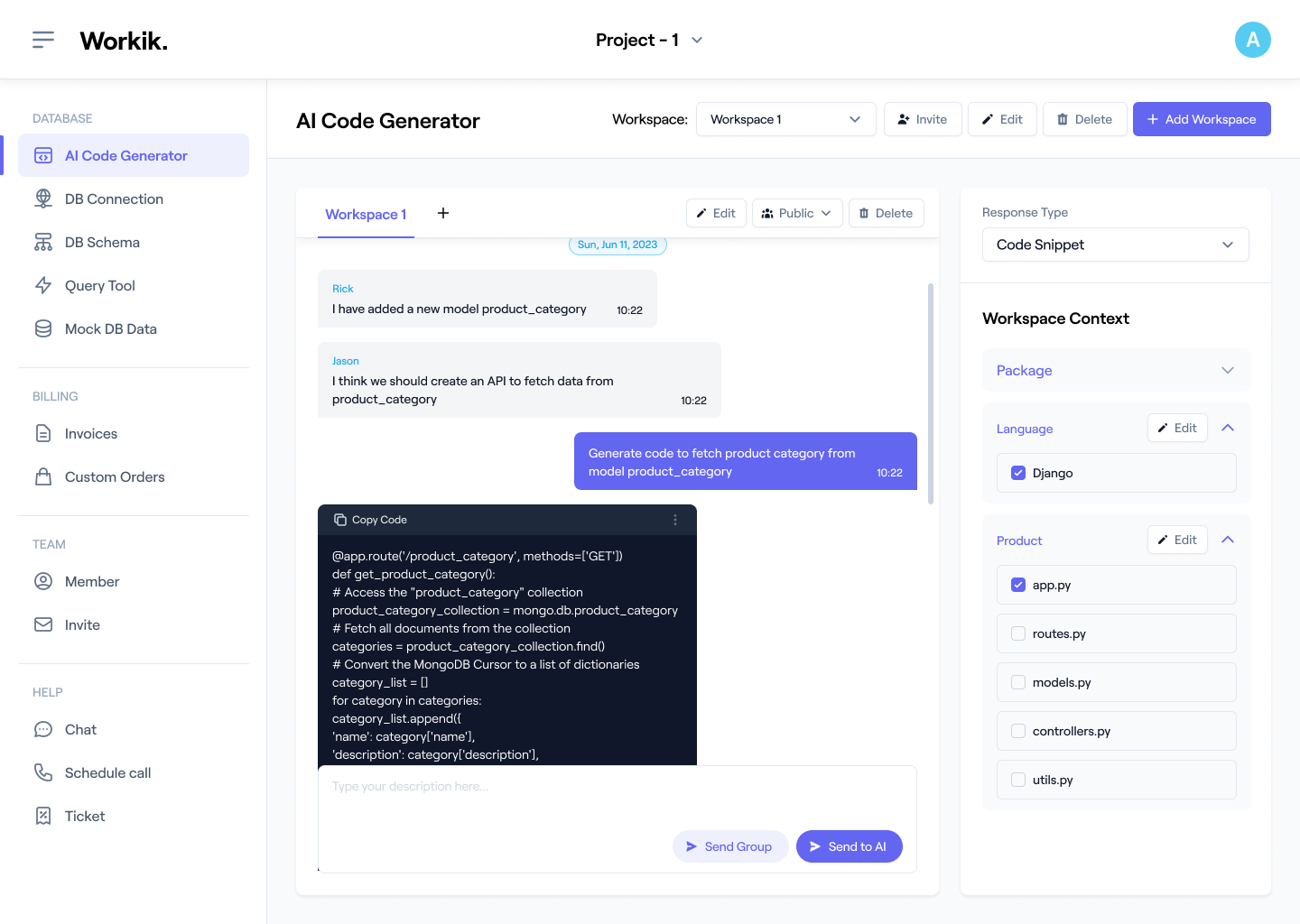

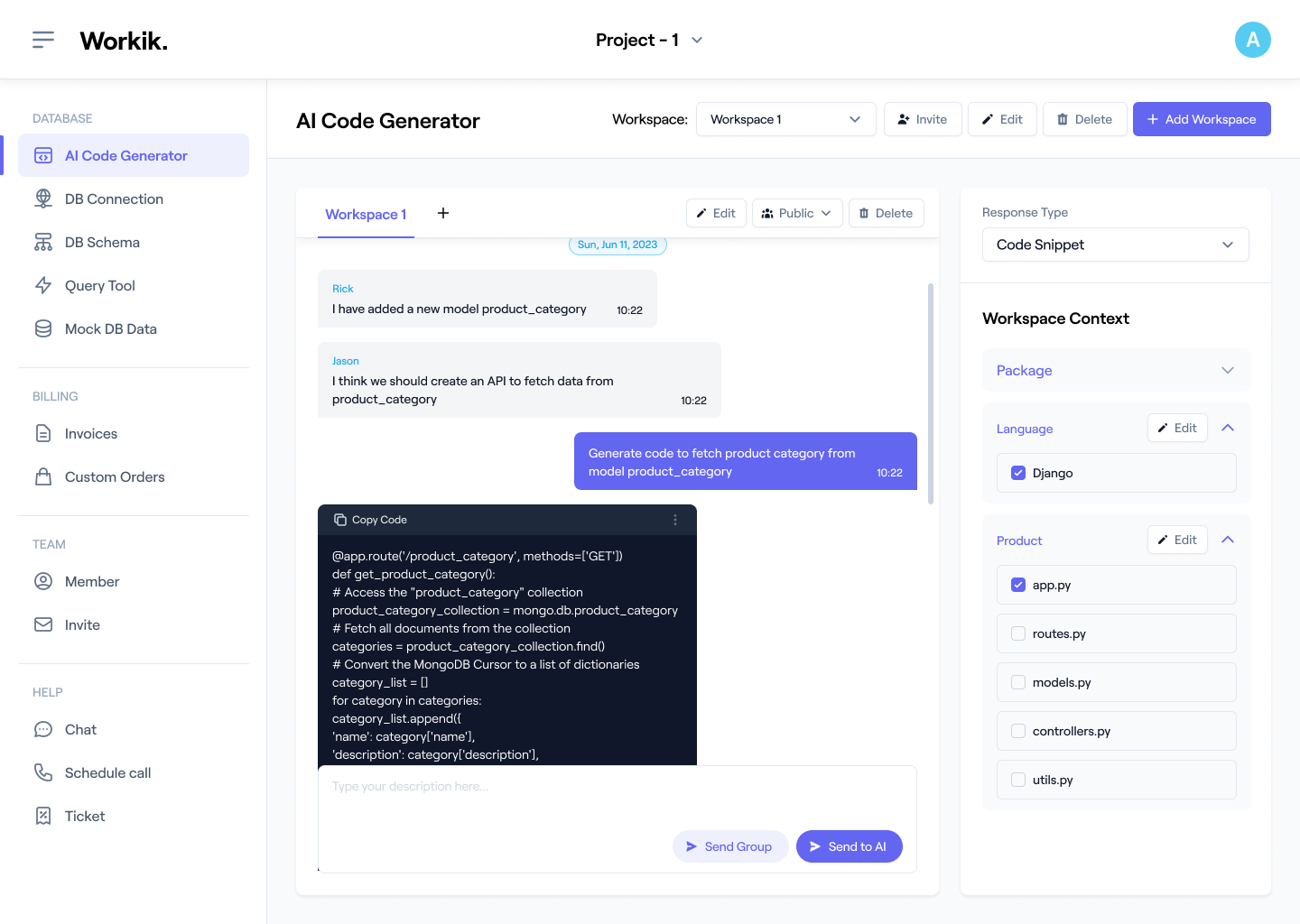

Link your GitHub, GitLab, or Bitbucket repositories. Specify your cluster configuration, data sources, and preferred libraries like Apache Hive or Spark for more precise AI assistance.

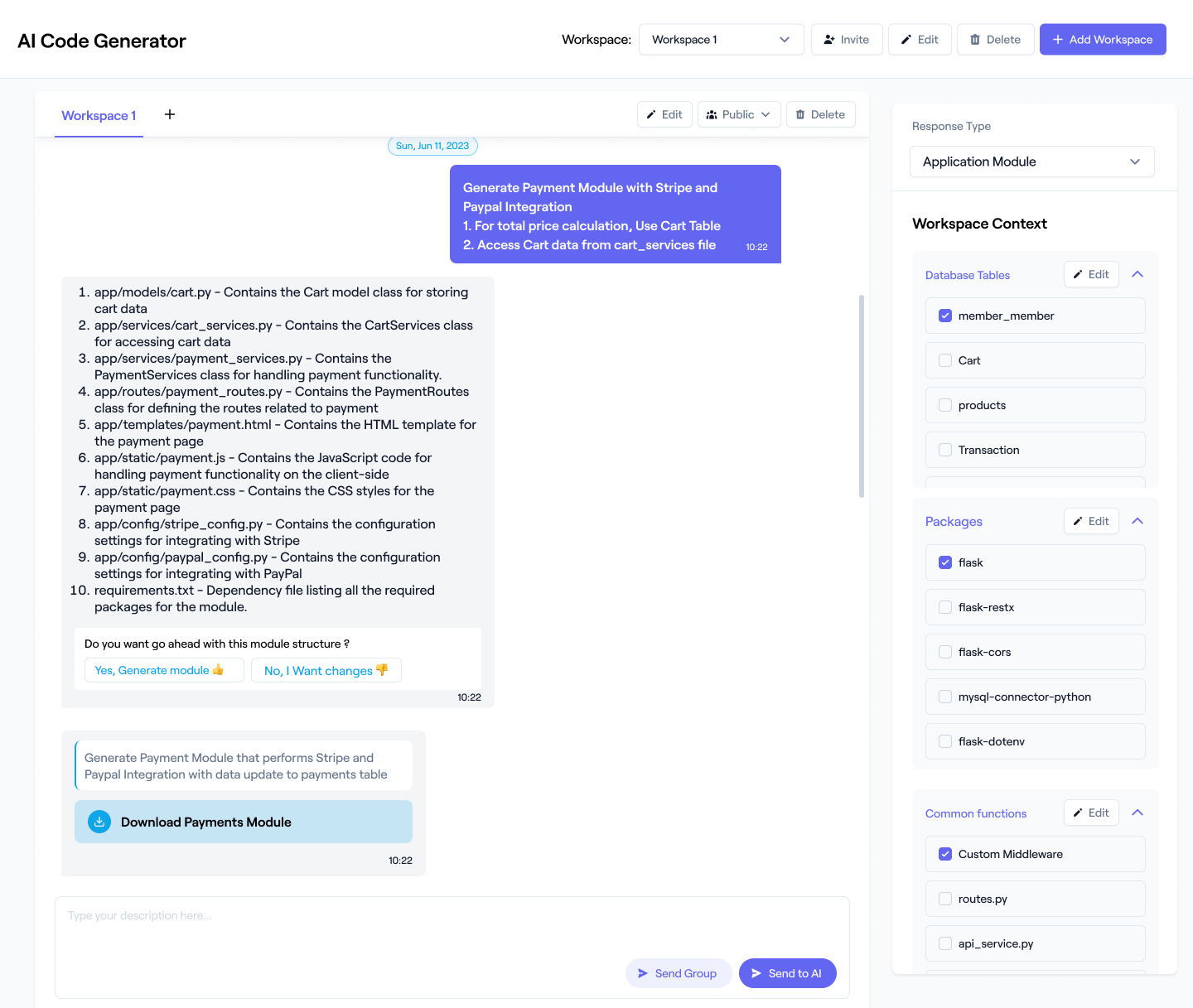

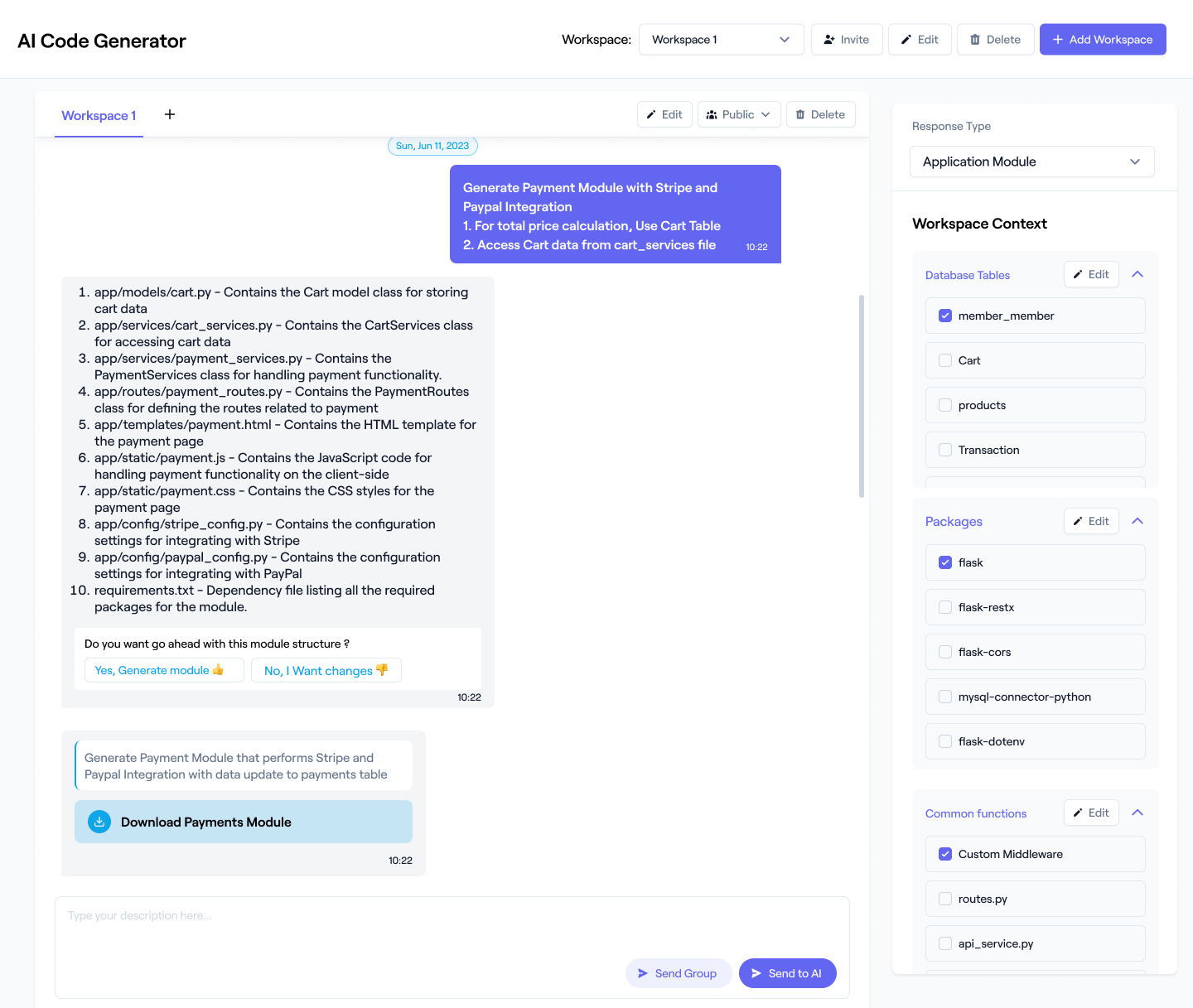

You can receive AI-driven suggestions, debug code snippets, or generate complete MapReduce jobs, Spark applications, or Hive queries tailored to your data processing needs.

Invite your team to collaborate on Hadoop projects within Workik. Share workspaces, assign tasks, and leverage AI-driven insights to optimize performance and scalability.

Expand

Expand

Expand

Expand

Expand

Expand

Expand

TESTIMONIALS

Real Stories, Real Results with Workik

Workik’s AI supercharged my Hadoop ETL processes! Fast code generation-huge time-saver!

Emily Chen

Data Engineer

Building data pipelines with Workik is a breeze! Efficient MapReduce jobs in minutes-game-changing!

David Patel

Software Developer

Crafting Hive queries was never easier! Workik’s AI optimization transformed my data analysis workflow!

Sarah Thompson

Data Scientist

What are the popular use cases of Workik's AI for Hadoop code generation?

Some popular use cases of Workik's Hadoop code generator include but are not limited to:

* Generate MapReduce jobs for processing large datasets efficiently in big data applications.

* Build ETL pipelines to extract, transform, and load data from various sources into Hadoop.

* Create HiveQL queries for data analysis and reporting in a Hadoop ecosystem.

* Simplify data ingestion from relational databases using Sqoop for seamless data integration.

* Generate Spark scripts for real-time data processing and analytics.

* Optimize HDFS file management and replication strategies for effective data storage.

What kind of context can I add in Workik AI related to my Hadoop project?

Setting context in Workik is optional but helps personalize the AI responses for your Hadoop projects. Here are the types of context you can add for Hadoop:

* Programming languages (e.g., Java, Scala, Python for Hadoop applications)

* Existing data sources and formats (import from databases or data lakes to sync your Hadoop project)

* Frameworks (e.g., Apache Spark for data processing, Apache Hive for data warehousing)

* Libraries (e.g., Hadoop Common, HDFS API, Apache Mahout for machine learning)

* Data schemas (e.g., defining table structures in Hive or HBase)

* Workflow tools (e.g., Apache Oozie or Apache Airflow for job scheduling and management)

* API specifications (e.g., for integrating with RESTful services that use Hadoop data)

How can Workik's AI enhance data transformation and machine learning workflows in Hadoop?

Workik's AI can generate optimized Hive scripts for complex data transformations and automate the creation of ETL processes that aggregate data from multiple sources. Additionally, it facilitates the integration of machine learning libraries like Apache Mahout and Spark MLlib, enabling the setup of training pipelines for predictive models using historical data stored in HDFS.

What role does Workik's AI play in Hadoop cluster monitoring?

Workik AI can produce scripts to integrate monitoring tools like Apache Ambari or Grafana. This helps set up alerts and dashboards for tracking cluster health and performance metrics, ensuring proactive management of resources.

Can Workik assist with data archival strategies in Hadoop?

Yes, Workik's AI can create scripts for implementing data lifecycle management policies. This includes automating the archival of old data from HDFS to cheaper storage solutions like Amazon S3, optimizing costs while ensuring data availability.

How does Workik support Hadoop ecosystem integration?

Workik's AI can generate code to connect Hadoop with various data sources and systems, such as NoSQL databases (like MongoDB) or data lakes. This enables seamless data flows for analytics across diverse platforms, enhancing overall data accessibility.

Generate Code For Free

Hadoop: Questions & Answers

Hadoop is an open-source framework designed for distributed storage and processing of large data sets across clusters of computers using simple programming models. Known for its scalability and fault tolerance, Hadoop is ideal for big data applications. It is widely used in data analytics, machine learning, and data warehousing.

Popular frameworks and libraries used with Hadoop include:

Data Processing:

Apache Spark, Apache Hive, Apache Pig, Apache Flink

Data Storage:

HDFS (Hadoop Distributed File System), Apache HBase, Apache Parquet

Data Ingestion:

Apache Sqoop, Apache Flume

Machine Learning:

Apache Mahout, MLlib (Spark’s machine learning library)

Workflow Management:

Apache Oozie, Apache Airflow

Monitoring and Management:

Apache Ambari, Cloudera Manager

Popular use cases of Hadoop encompass:

Big Data Analytics:

Processing and analyzing vast datasets for insights and trends.

Data Warehousing:

Storing and managing large volumes of structured and unstructured data.

Log Processing:

Collecting and analyzing log data from various sources for monitoring and troubleshooting.

Machine Learning:

Building and training models using large datasets in a distributed manner.

ETL Processes:

Extracting, transforming, and loading data from different sources into a centralized repository.

Real-time Data Processing:

Handling streaming data from sources like IoT devices and social media for immediate insights.

Career opportunities and technical roles for Hadoop developers include Big Data Developer, Data Engineer, Hadoop Administrator, Data Scientist, ETL Developer, Machine Learning Engineer, Cloud Engineer (Hadoop-focused), Business Intelligence Analyst, DevOps Engineer (with Hadoop skills), Systems Architect.

Workik AI provides extensive Hadoop code generation support, including:

Code Generation:

Automatically creating optimized MapReduce, HiveQL, and Spark code snippets.

Debugging:

Detecting and resolving issues in Hadoop jobs with intelligent suggestions.

Testing:

Supporting Hadoop testing frameworks and generating test cases for reliable data processing.

Optimization:

Profiling and improving job performance for better resource utilization.

Automation:

Automating repetitive tasks like data ingestion and job scheduling with generated scripts.

Refactoring:

Suggesting best practices for efficient and maintainable Hadoop code.

Cluster Management:

Assisting in optimizing cluster configurations for effective data processing.

Explore more on Workik

Get in touch

Don't miss any updates of our product.

© Workik Inc. 2026 All rights reserved.