Join our community to see how developers are using Workik AI everyday.

Features

Generate Diverse Inputs

AI creates tests based on function definitions for maximizing edge case discovery using QuickCheck.

Expand Test Coverage

AI helps find boundary inputs, ensuring thorough validation of corner cases in property-based principles.

Simplify Debugging

AI identifies minimal failing test cases through intelligent shrinking mechanisms, with FastCheck.

Integrate with Testing Pipelines

AI integrates with PyTest to enable automated testing which improves consistency in CI/CD environments.

How it works

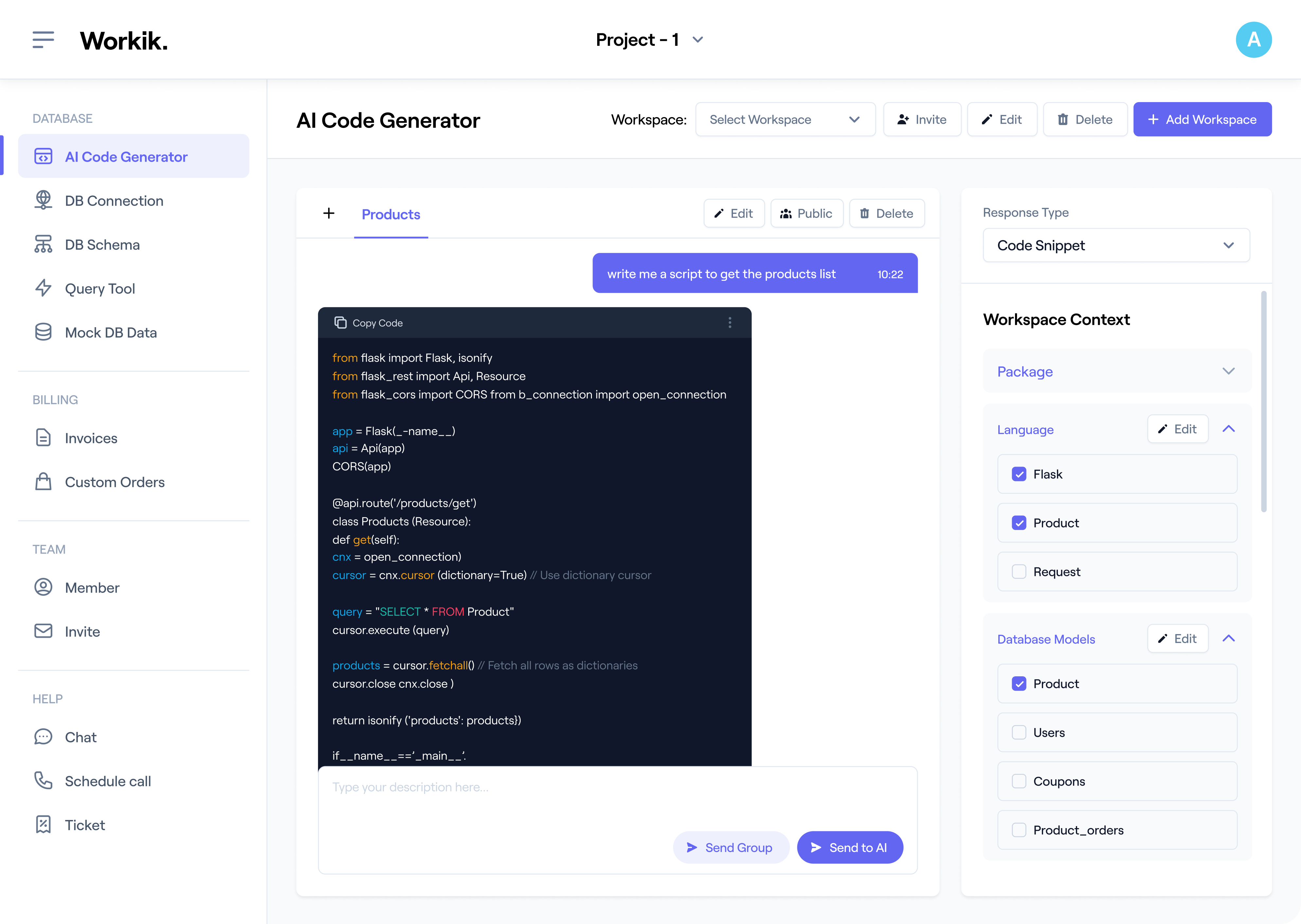

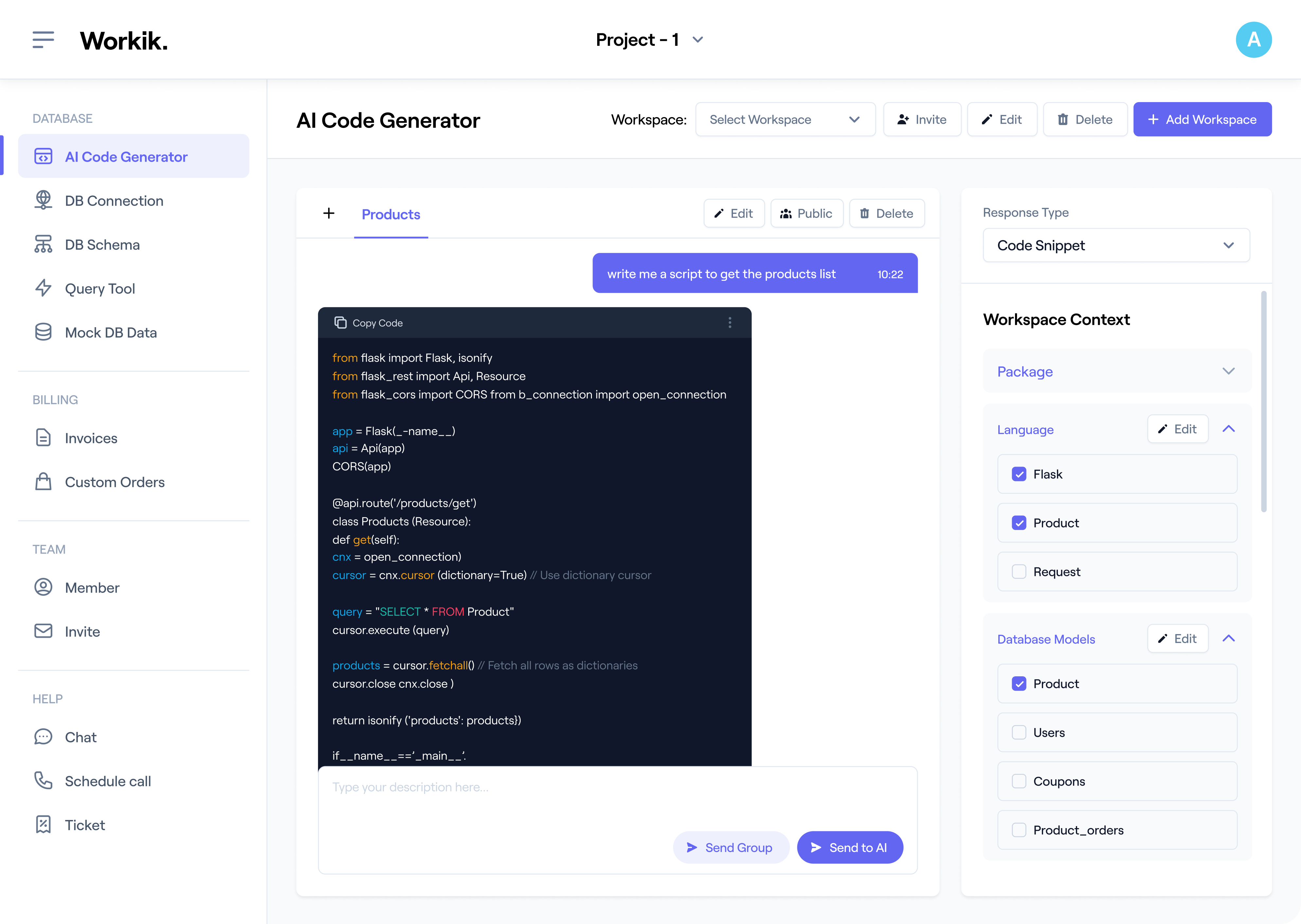

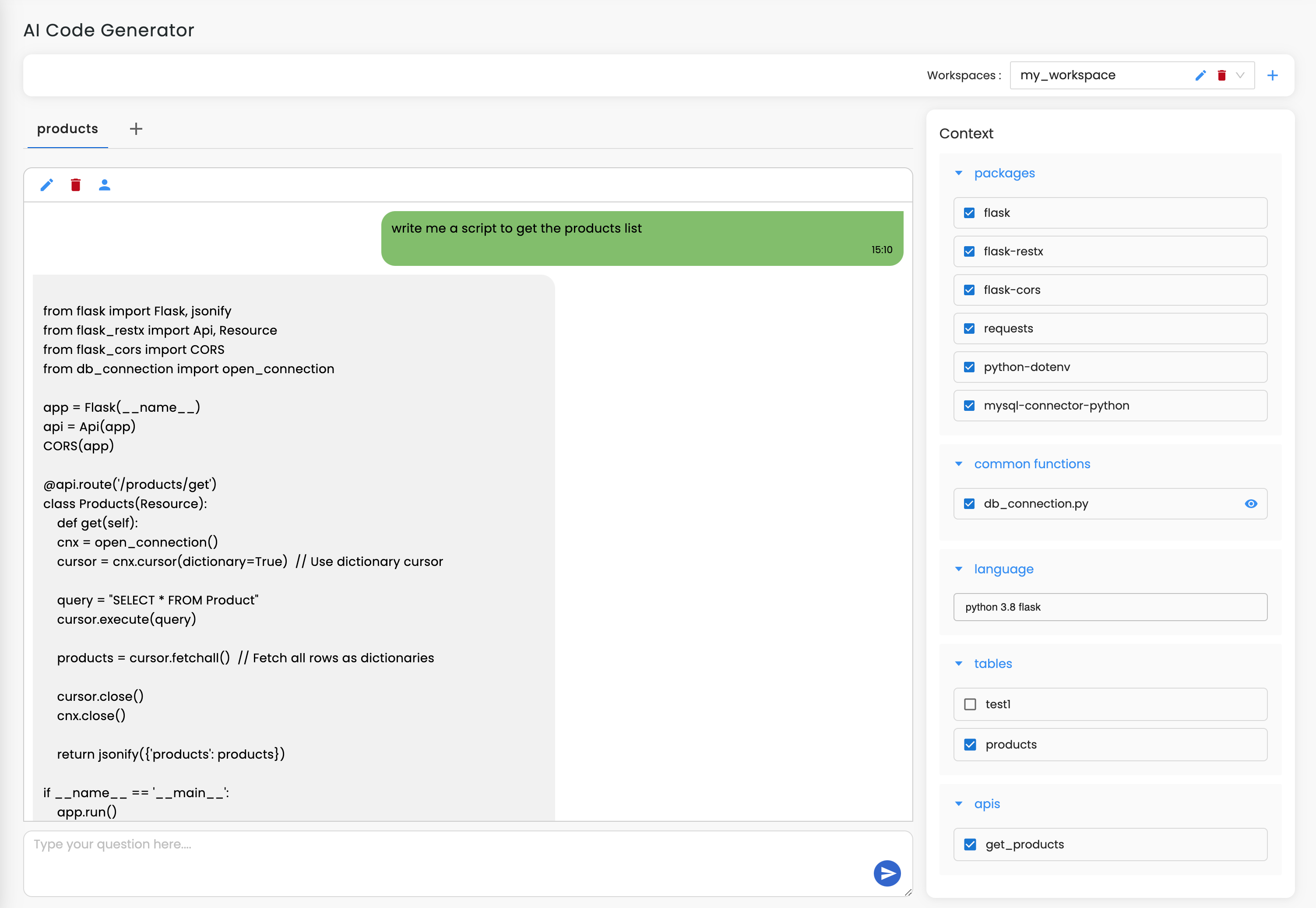

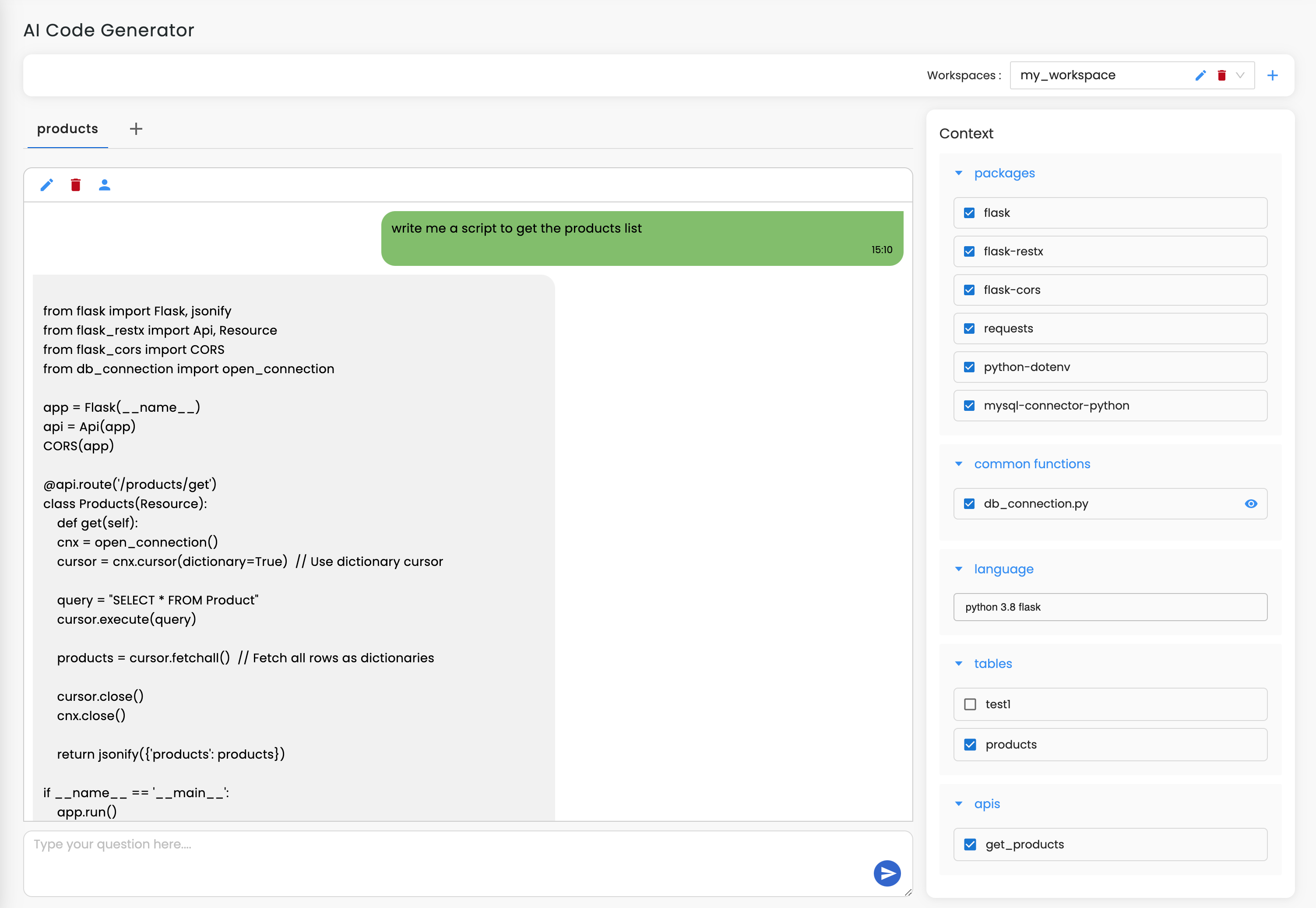

Sign up on Workik using Google or manually in seconds, then create your workspace for Hypothesis test case generation.

Define properties, constraints, and function specifications. Upload existing code or configurations to tailor property-based testing with Hypothesis.

Generate Hypothesis test cases with AI. It explores random inputs, discovers edge cases, shrinks failing examples, and optimizes coverage dynamically.

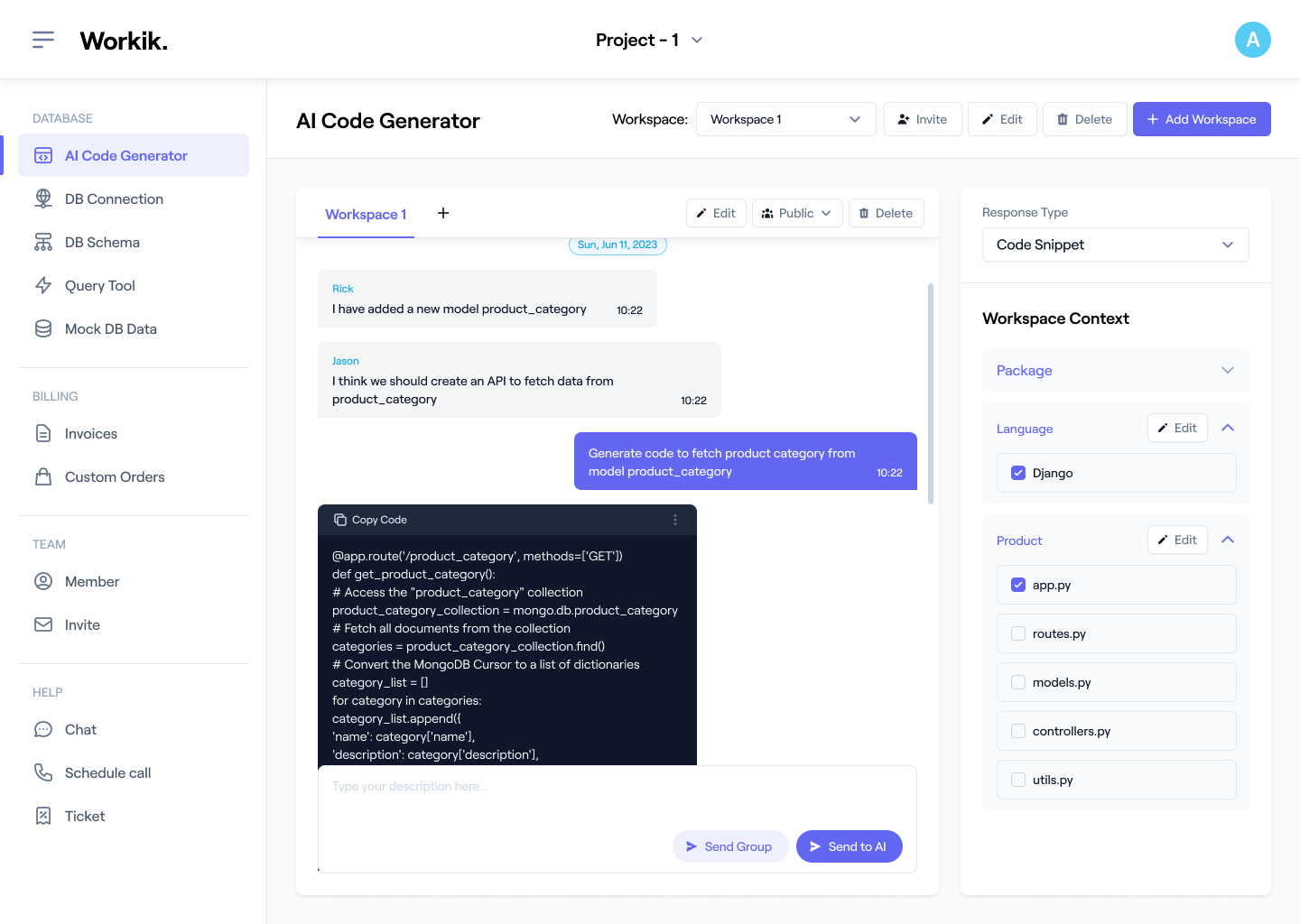

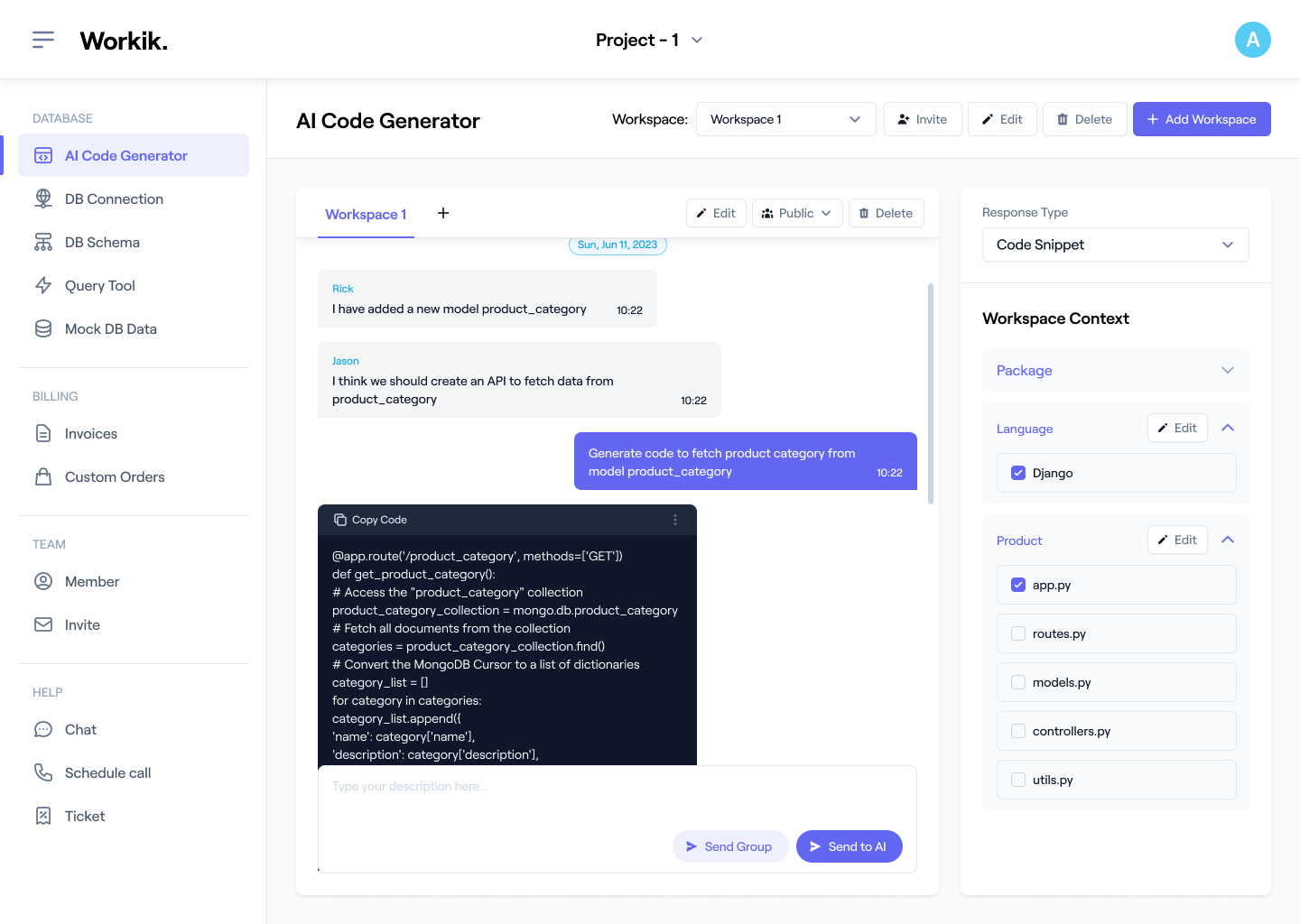

Share AI-generated test cases with your team for input. Refine collaboratively and execute tests seamlessly to validate properties and debug failures.

Expand

.png)

.png)

Expand

Expand

Expand

Expand

Expand

Expand

TESTIMONIALS

Real Stories, Real Results with Workik

Workik AI uncovered critical edge cases and streamlined debugging effortlessly.

Emma Clarke

Senior QA Engineer

Workik AI improved test coverage and enhanced code quality seamlessly.

Lara Martinez

DevOps Specialist

Workik AI made property-based testing easy, generating precise Hypothesis cases in no time.

Ayush More

Backend Developer

What are some popular use cases for Workik’s AI-powered Hypothesis Test Case Generator?

Workik’s AI-powered Hypothesis Test Case Generator is ideal for developers for various use cases which include but are not limited to:

* Generate property-based test cases for validating complex functional behaviors.

* Automate exploration of edge cases and boundary conditions dynamically.

* Shrink failing inputs to identify minimal reproducible cases for debugging.

* Test robustness of algorithms against randomized data inputs.

* Validate invariants and properties across diverse input domains.

* Simulate performance scenarios by scaling input sizes and complexity.

* Perform regression testing to ensure consistent behavior across updates.

What context-setting options are available in Workik’s AI for Hypothesis Test Case Generation?

Workik’s AI allows users to customize Hypothesis test case generation with a range of context-setting options:

* Upload function definitions, constraints, or test specifications for property validation.

* Include code snippets or libraries to guide test input generation.

* Define input domains and expected invariants for targeted property-based testing.

* Sync repositories from GitHub, GitLab, or Bitbucket for continuous integration.

* Specify failing scenarios or edge cases to prioritize during test generation.

* Configure runtime parameters for efficient and adaptive test execution.

How does Workik AI handle random input generation for Hypothesis test cases?

Workik AI uses adaptive algorithms to generate random inputs aligned with your defined constraints and properties. For instance, if you're testing a sorting algorithm, it can produce randomized datasets with edge cases like empty lists, duplicate entries, or highly skewed data distributions.

How does Workik AI assist with hypothesis-driven data validation?

Workik AI can validate data structures and formats against user-defined hypotheses. For example, it can test whether a JSON API always returns data conforming to specific schemas or if numerical data stays within valid ranges, ensuring data integrity across systems.

How does Workik AI enhance property-based testing for data validation workflows?

Workik AI generates test cases to validate properties like consistency, correctness, and completeness across data pipelines. For instance, in a data transformation workflow, it can ensure that all rows satisfy invariants like non-null values or consistent date formats, catching issues before production.

Can Workik AI discover hidden properties during property-based testing?

Yes. For example, while testing a string manipulation function, it might uncover properties like idempotence (applying the function twice produces the same result as applying it once) or length preservation, guiding you to validate these overlooked behaviors.

How does Workik AI enable property-based testing for financial applications?

Workik AI generates test cases tailored to financial algorithms, such as validating transaction properties like balance consistency, precision in tax calculations, or compliance with regulatory constraints. For example, it can test edge cases like negative balances, high-frequency transactions, or extreme currency conversions.

Generate Code For Free

Hypothesis Test Case: Question and Answer

A Hypothesis test case validates properties of code or functions by automatically generating inputs to test expected behaviors. It involves exploring edge cases, ensuring invariants hold, and debugging with simplified failing examples. The goal is to ensure robust code quality and uncover hidden issues with minimal manual effort.

Popular frameworks and tools:

Property Testing Libraries: QuickCheck

Testing Frameworks: PyTest, Unittest

Shrinking Tools: FastCheck, jqwik

Language Support: Python, Scala, Haskell

Integration Tools: GitHub, GitLab, Bitbucket

Data Validation Libraries: Pandas, JSON Schema

Popular use cases for Hypothesis test case generation include:

E-Commerce Platforms:

Validate cart calculations, discount rules, and inventory updates for correctness.

Financial Applications:

Test transaction workflows, compliance rules, and precision in calculations.

Data Pipelines:

Ensure schema consistency, non-null fields, and accurate transformations in ETL processes.

IoT Systems:

Validate sensor data processing, anomaly detection, and state transitions.

Gaming Applications:

Test game mechanics, user interactions, and random event outcomes for consistency.

Professionals skilled in Hypothesis test case generation can explore roles such as Test Automation Engineer, Backend Developer, QA Engineer, Data Scientist, Software Architect, and Performance Testing Specialist.

Workik AI offers advanced support for Hypothesis test case generation, including:

Dynamic Input Generation:

Create test cases with randomized inputs to explore edge cases and boundaries.

Property Validation:

Automate checks for function invariants and state consistency.

Failing Example Shrinking:

Simplify complex failing cases to identify root causes quickly.

Integration Testing:

Validate workflows involving APIs, databases, and business logic seamlessly.

Cross-Platform Testing:

Test Hypothesis cases across Python, Scala, and Haskell-based systems.

Debugging Assistance:

Leverage AI to refine test cases and suggest property enhancements.

CI/CD Integration:

Automate Hypothesis test cases in continuous integration pipelines for rapid feedback.

Performance Testing:

Generate and execute test cases for scalability and robustness under load.

Explore more on Workik

Get in touch

Don't miss any updates of our product.

© Workik Inc. 2026 All rights reserved.