Join our community to see how developers are using Workik AI everyday.

Supported AI models on Workik

GPT 5.2 Codex, GPT 5.2, GPT 5.1 Codex, GPT 5.1, GPT 5 Mini, GPT 5

Gemini 3.1 Pro, Gemini 3 Flash, Gemini 3 Pro, Gemini 2.5 Pro

Claude 4.6 sonnet, Claude 4.5 Sonnet, Claude 4.5 Haiku, Claude 4 Sonnet

Deepseek Reasoner, Deepseek Chat, Deepseek R1(High)

Grok 4.1 Fast, Grok 4, Grok Code Fast 1

Models availability might vary based on your plan on Workik

Features

Clean Data Instantly

AI generates precise scripts to drop duplicates, fix types, and clean unstructured records across SQL, CSV, and Excel.

Fix Formatting Accurately

Leverage AI to standardize dates, currency, and strings with intelligent transformations using Pandas, PySpark, or raw SQL.

Prep for Analysis or ML

AI builds structured pipelines with normalization, encoding, and outlier fixes for model-ready datasets.

Merge Datasets Seamlessly

AI resolves overlaps, unifies headers, and deduplicates rows across sources like CRM exports or web-scraped files.

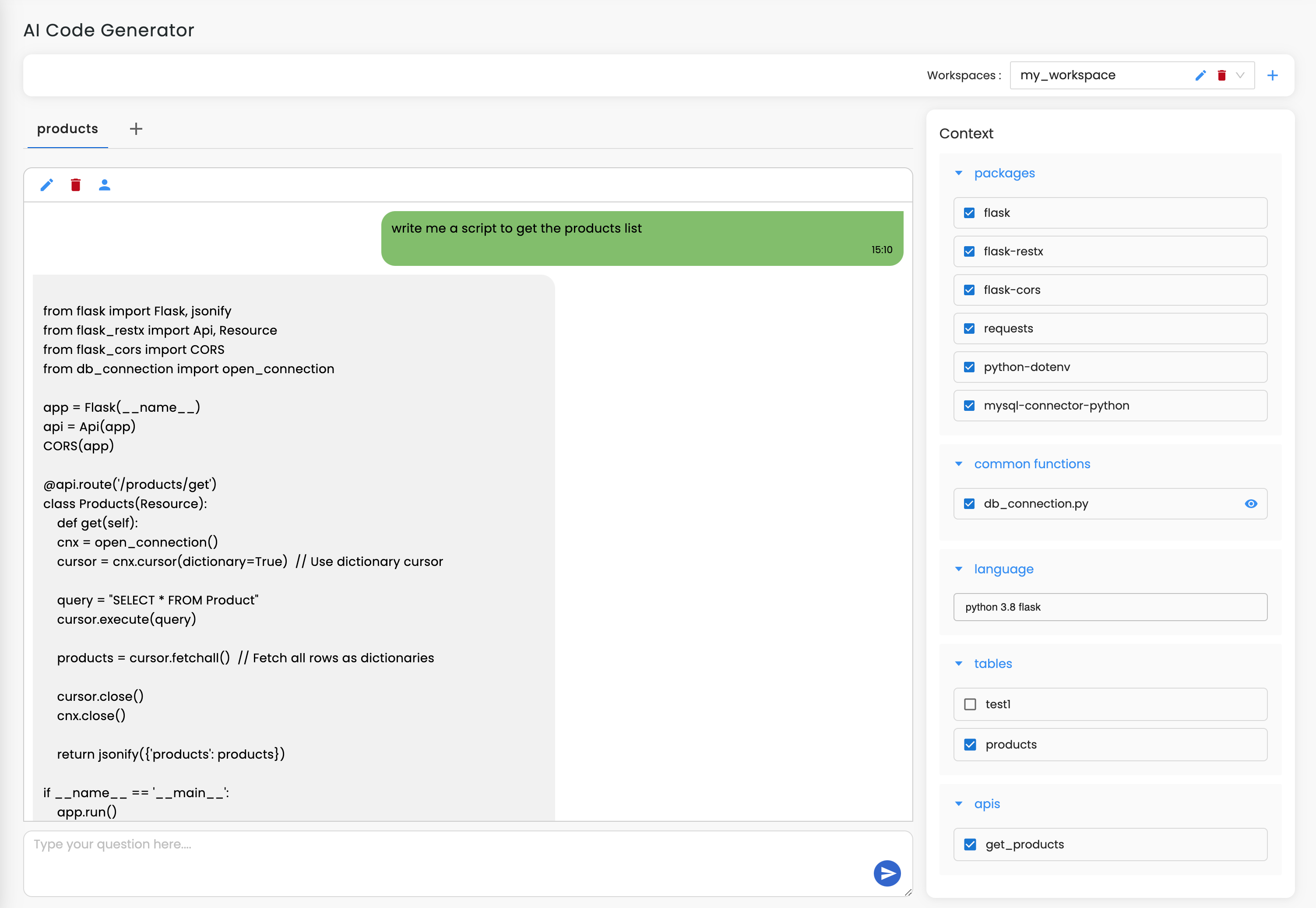

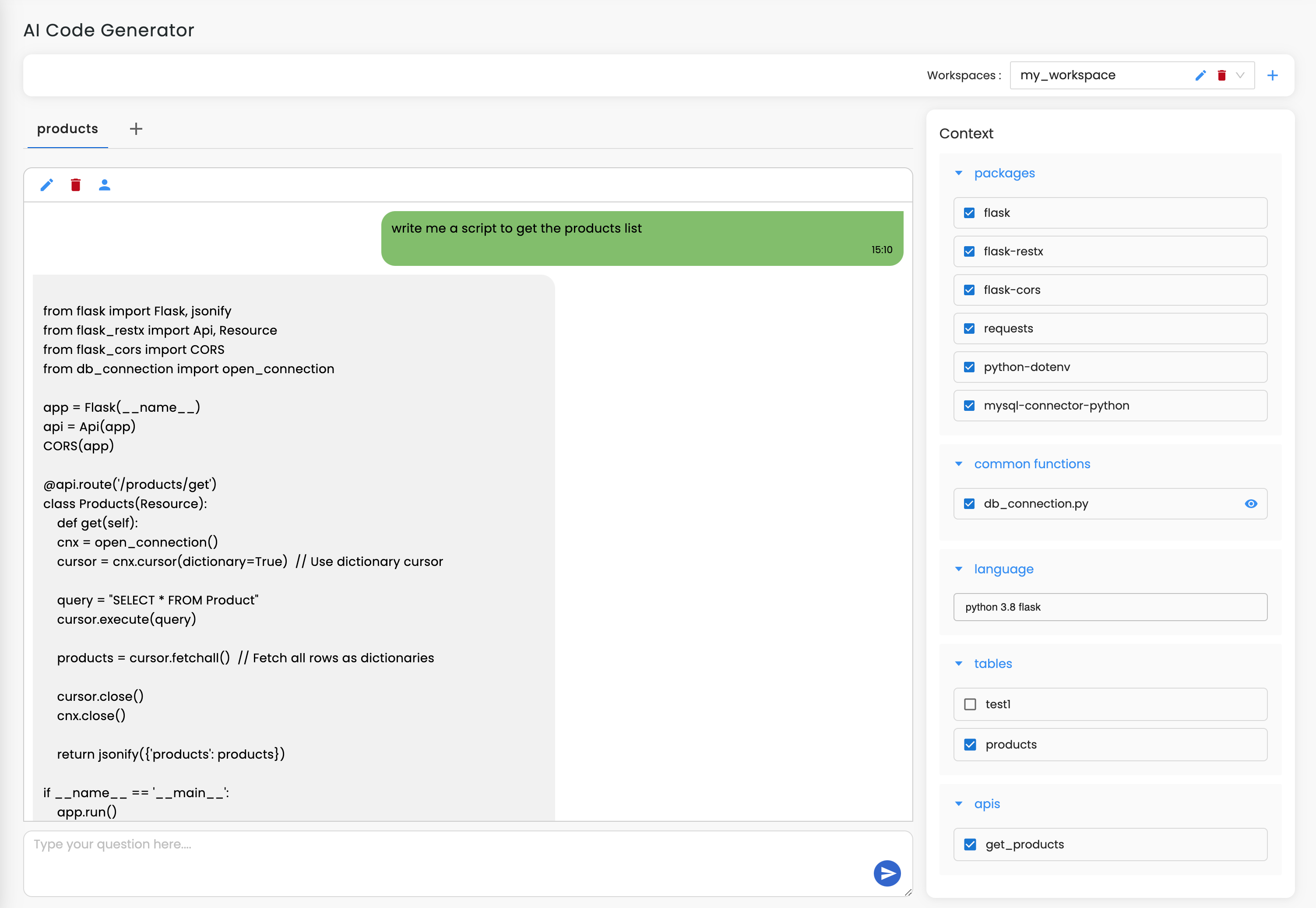

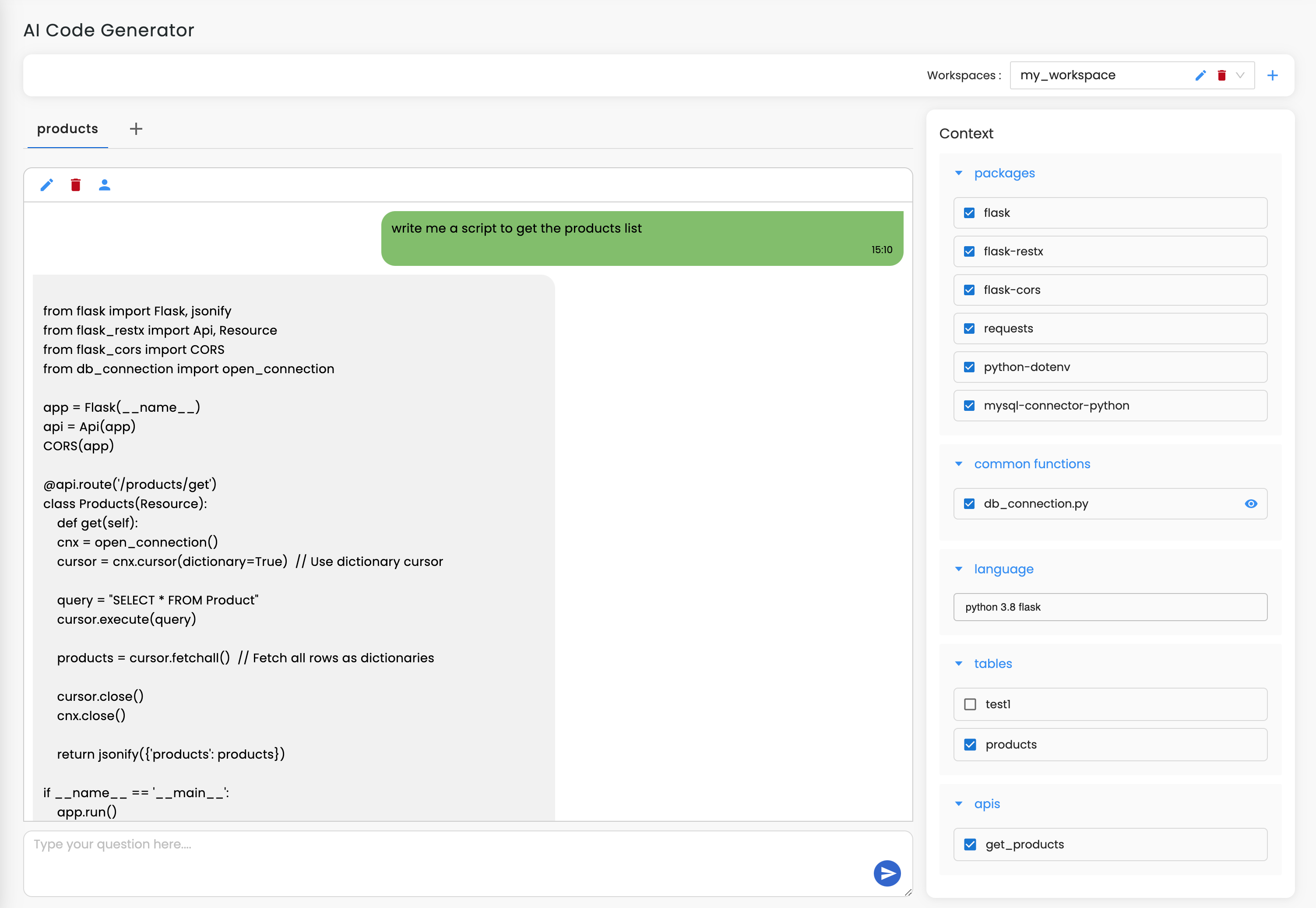

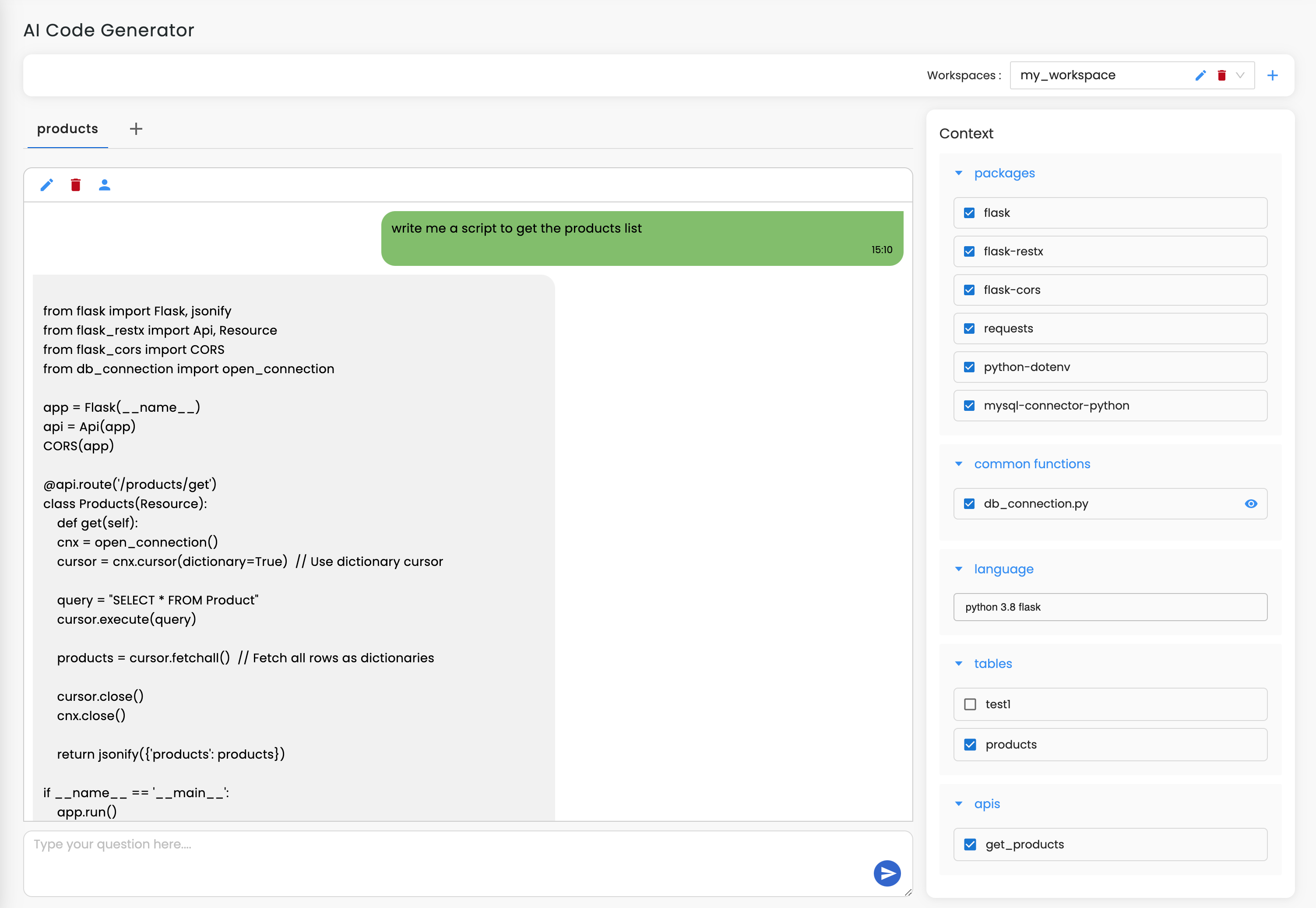

How it works

Create your Workik account using Google or email to access the AI-driven data cleaning environment.

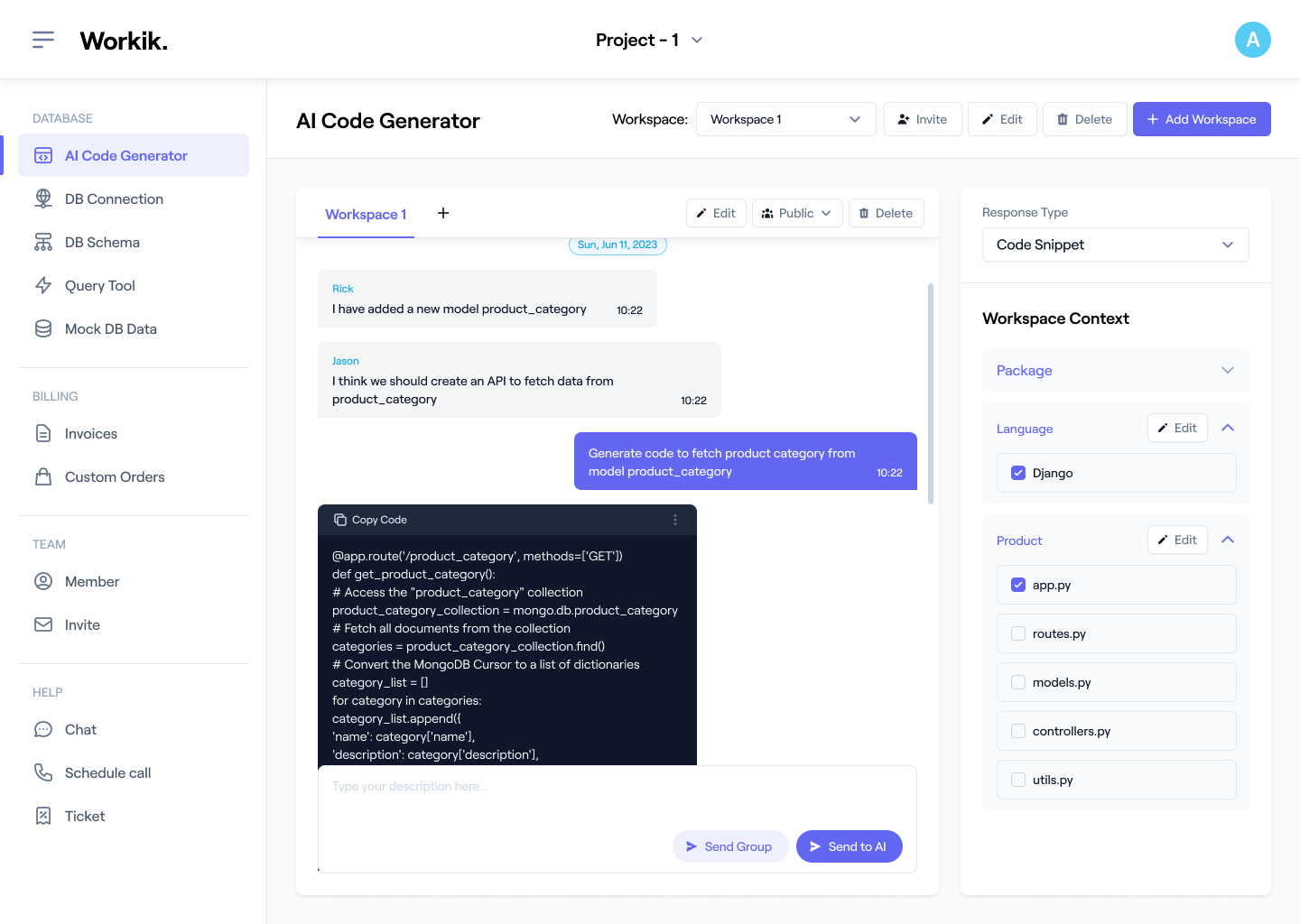

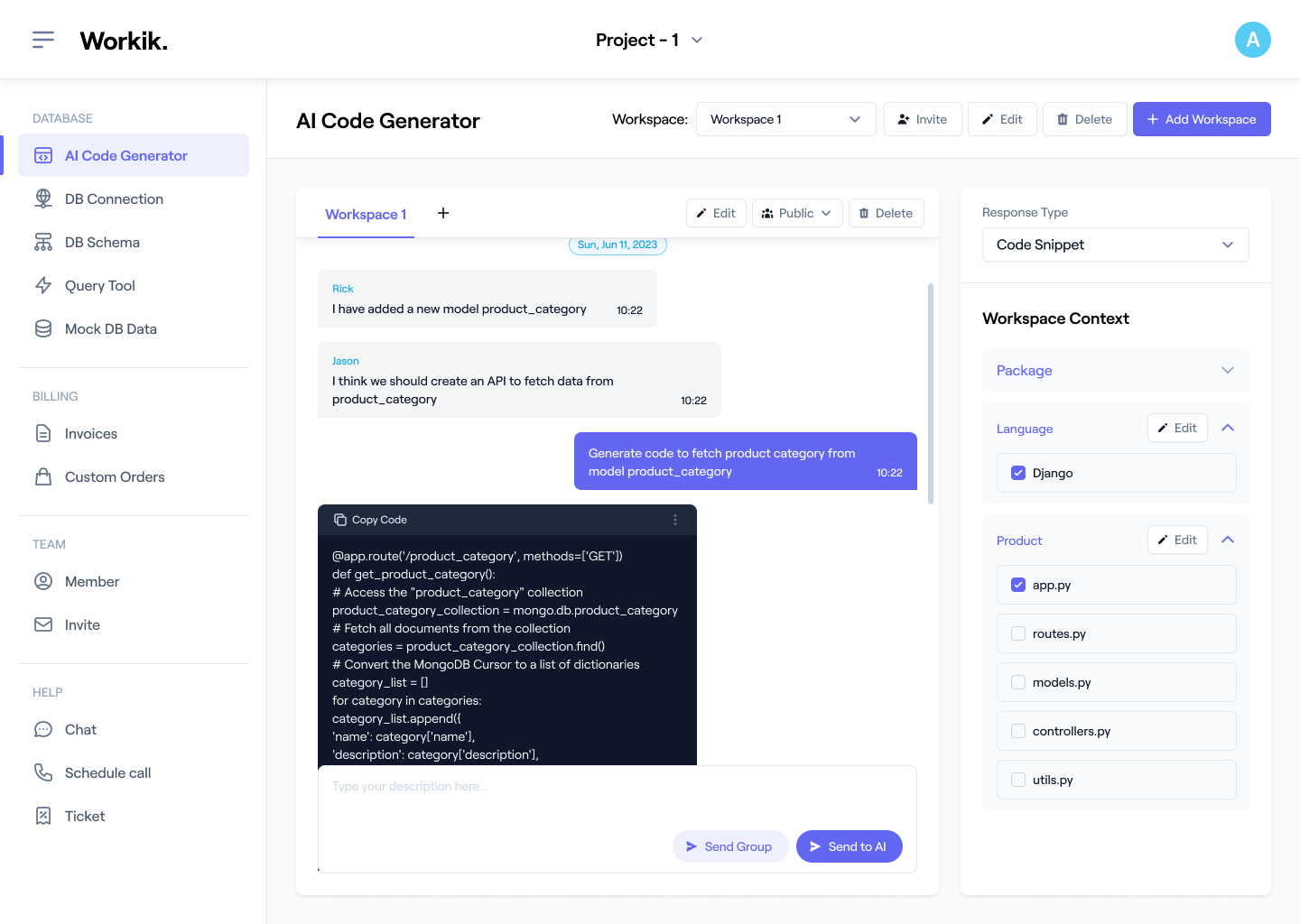

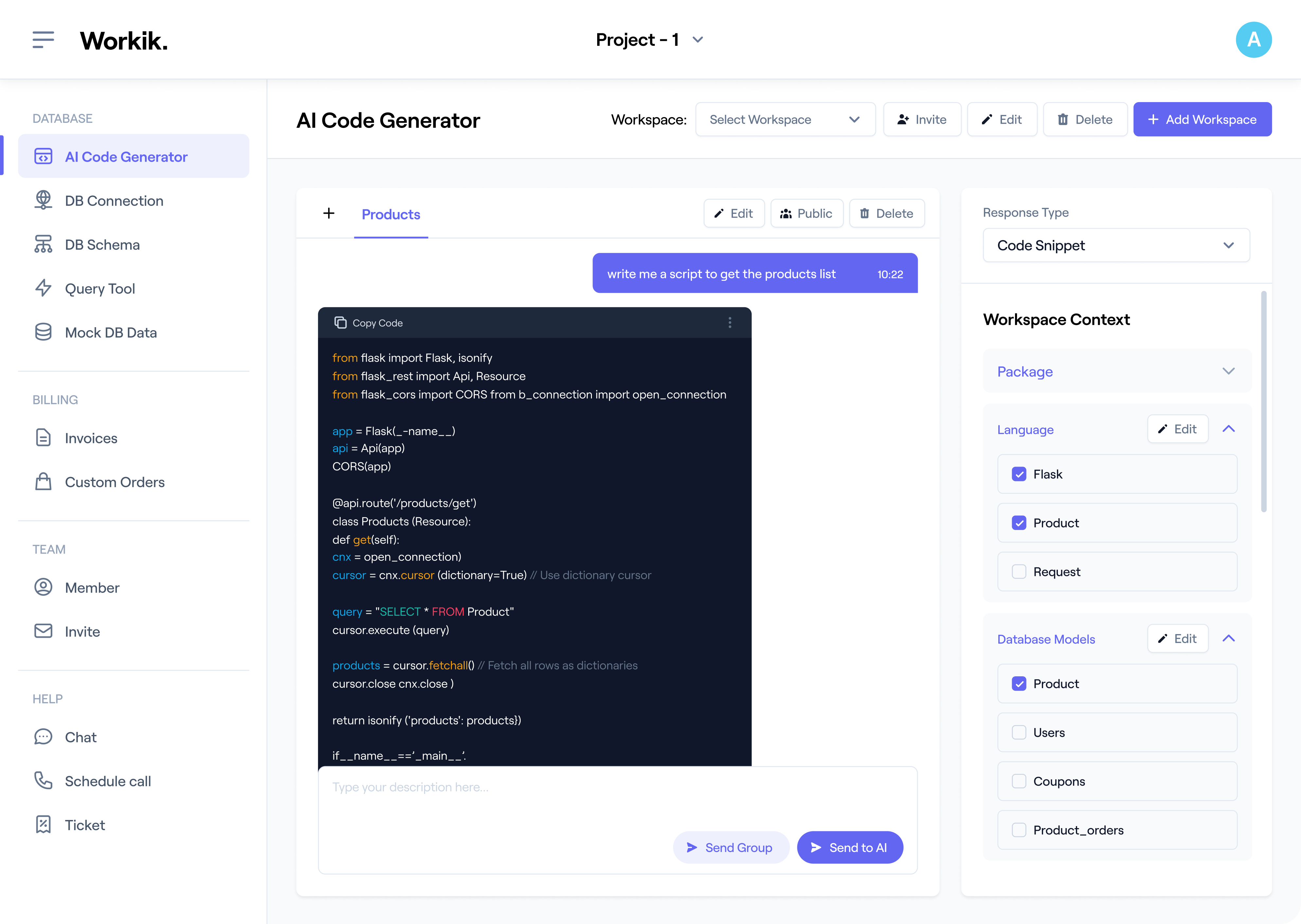

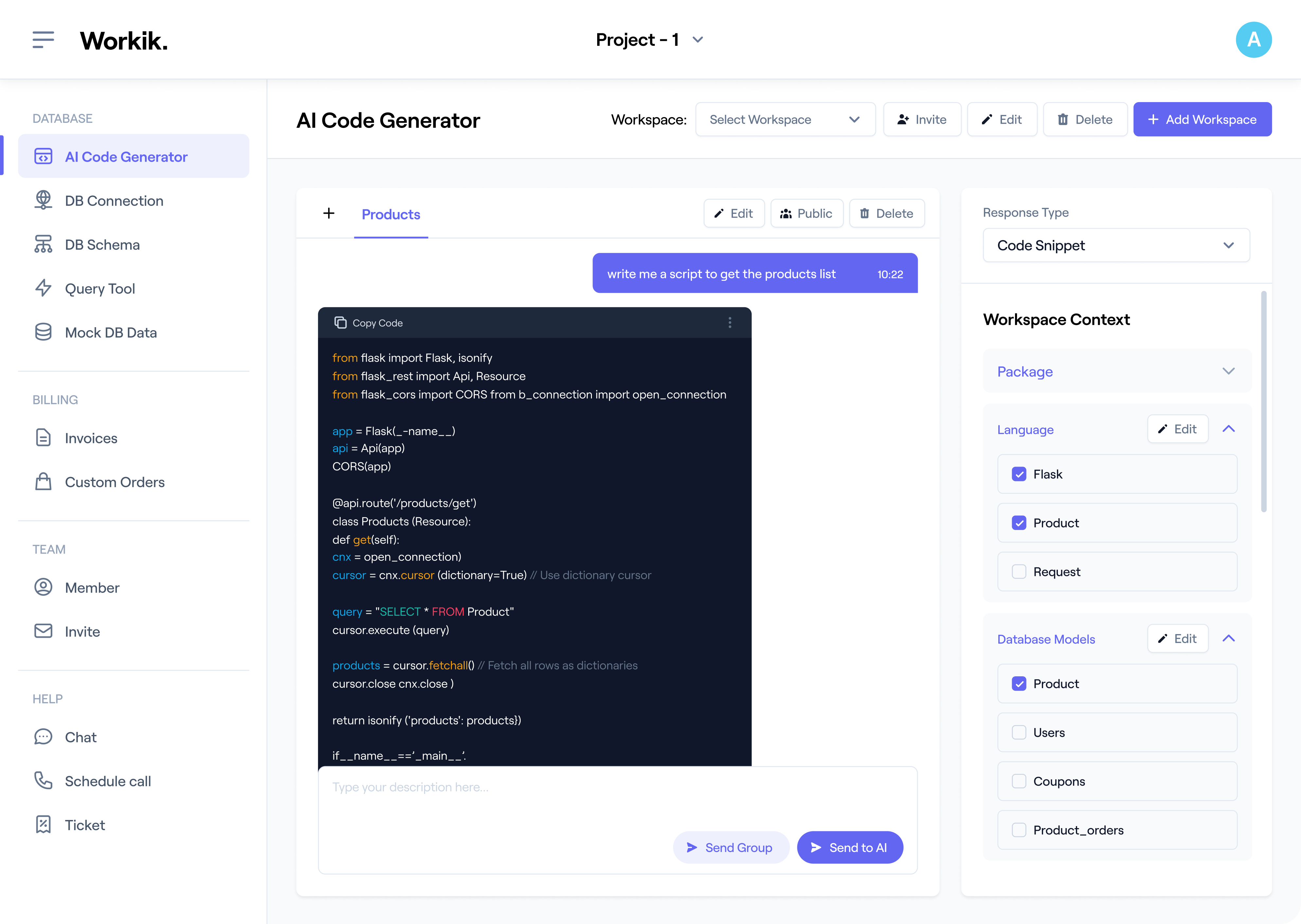

Connect your files through GitHub, GitLab, or Bitbucket. Then upload CSVs, Excel files, or link databases like PostgreSQL and MongoDB. Add schemas and define column types to guide the AI.

Generate scripts for null handling, type correction, and field normalization. Also refactor legacy cleaning logic, optimize transformation steps, and validate data structure, types, and constraints for consistency.

Generate structured documentation for your scripts and schema changes. Invite your team to validate preprocessed data through Workik workspaces.

Expand

.png)

.png)

Expand

Expand

Expand

Expand

Expand

Expand

TESTIMONIALS

Real Stories, Real Results with Workik

Workik cleaned inconsistent field formats across millions of CRM records and generated scripts to handle edge cases we missed.

Stefan Tuning

Backend Developer

I used Workik to normalize schemas from multiple PostgreSQL tables and clean legacy null-handling logic before migrating to Snowflake.

Jared Lunther

Data Engineer

Workik helped us deduplicate and standardize product data across vendor feeds, making our analytics reports 10x more reliable.

Ethan Morales

Full Stack Developer

What are some popular use cases of Workik's AI-powered Data Cleaning Code Generator?

Workik’s AI-powered Data Cleaning Code Generator supports a variety of real-world use cases which includes but are not limited to:

* Generate Python, SQL, or PySpark scripts for handling missing values, standardizing formats, and fixing data types.

* Deduplicate records in customer, sales, or event logs across large datasets.

* Normalize date, currency, and categorical fields for reporting and analytics.

* Detect and correct schema drift across evolving data pipelines.

* Validate data integrity by checking type mismatches, constraint violations, and null patterns.

* Prepare clean, structured data for BI dashboards and machine learning models.

* Clean and merge multi-source datasets from APIs, CSVs, or databases with inconsistent structures.

What context-setting options are available in Workik’s AI for Data Cleaning Code Generator?

Workik offers powerful context-setting options for Data Cleaning tasks, enabling users to:

* Connect to databases like PostgreSQL, MySQL, MongoDB, or Snowflake.

* Link GitHub, GitLab, or Bitbucket to access and clean versioned datasets.

* Upload data schemas in JSON, CSV, or SQL formats to guide AI cleaning logic.

* Define column types, key fields, and null-handling rules for structured cleaning.

* Add examples of dirty data to refine AI’s understanding of formatting issues.

* Include data profiling insights to improve validation and transformation steps.

* Import API definitions to clean and validate incoming API payloads or responses.

Can Workik help me standardize user-generated content like names, addresses, or feedback text?

Yes. Workik uses AI to intelligently clean and normalize free-text inputs. It can fix inconsistent casing in names, standardize address abbreviations (like “St.” to “Street”), and even detect junk or placeholder values in feedback fields. This is especially useful in survey analysis or onboarding forms.

What if my data changes daily or has frequent updates?

Workik lets you convert your cleaning logic into reusable, trigger-based pipelines. Set up scheduled runs or connect it to Git events so every time new data is pushed, Workik applies cleaning logic automatically — ideal for teams working with time-sensitive or streaming data.

Is Workik useful for quick exploration before full data cleaning?

Yes. You can upload any dataset and get an instant AI-generated profile report showing null counts, outlier columns, inconsistent types, and other high-level diagnostics, perfect for knowing where to start, especially during audits or early analysis.

Can Workik clean external API responses or real-time JSON data?

Definitely. You can upload or pipe in JSON payloads from APIs and use Workik to validate schema, filter unwanted fields, normalize key names, or convert them into structured formats for storage or analysis.

Can’t find the answer you are looking for?

Request question

Generate Code For Free

DATA CLEANING: Question & Answer

Data cleaning is the process of detecting, correcting, or removing corrupt, inaccurate, or inconsistent records from a dataset to ensure its quality and usability. Tasks include handling missing values, standardizing column formats, correcting data types, resolving schema mismatches, and removing duplicates. Clean data is critical for downstream tasks like analytics, machine learning, and database operations.

Popular technologies used in data cleaning workflows include:

Languages:

Python, SQL, R, Scala

Libraries/Frameworks:

Pandas, NumPy, PySpark, dplyr (R), OpenRefine

Databases:

PostgreSQL, MySQL, MongoDB, Snowflake, BigQuery

Data Platforms:

Apache Spark, Databricks, AWS Glue, Google Cloud DataPrep

ETL Tools:

Apache NiFi, Talend, Airbyte

Data Profiling Tools:

Great Expectations, Deequ, Data Cleaner

Workflow Orchestration:

Apache Airflow, Prefect

Data cleaning is foundational to a wide range of workflows, including but not limited to:

ML Preprocessing:

Prepare clean, labeled, and consistent datasets for model training and evaluation.

BI and Analytics:

Ensure accurate dashboards and insights by resolving formatting, type, and null inconsistencies.

ETL/ELT Pipelines:

Normalize and validate data during ingestion from diverse sources like APIs, logs, and flat files.

CRM & E-commerce:

Standardize customer records, deduplicate transactions, and fix inconsistent product fields.

Survey and Feedback Processing:

Clean open-text responses, validate schema, and unify categorical answers.

Data Warehousing:

Harmonize column formats and resolve schema drift for consistent querying in Redshift or Snowflake.

Third-Party Integrations:

Clean vendor or partner-supplied data before merging into production systems.

Real-Time Cleaning:

Prepare incoming JSON or API payloads for validation and storage in stream processing platforms.

Data cleaning expertise opens up roles like Data Engineer, Machine Learning Engineer, Data Analyst, ETL Developer, Data Quality Analyst, Business Intelligence Developer, and Analytics Engineer.

Workik AI simplifies and accelerates data cleaning with:

Script Generation:

Create Python, SQL, or PySpark scripts for cleaning tasks instantly.

Null Handling:

Apply smart imputation methods like median, mode, or forward fill.

Data Type Correction:

Detect and fix column type mismatches automatically.

Field Normalization:

Standardize date, currency, and categorical formats.

Duplicate Resolution:

Identify and remove exact or fuzzy duplicates.

Schema Validation:

Catch schema drift and generate correction scripts.

Multi-Source Merge:

Align headers and unify formats across datasets.

Refactoring:

Simplify and improve existing cleaning logic.

Profiling Reports:

Get null counts, outlier detection, and quality metrics.

Reusable Pipelines:

Save and reuse workflows across data projects.

Documentation:

Generate structured docs for cleaning logic and schema updates.

Explore more on Workik

Top Blogs on Workik

Get in touch

Don't miss any updates of our product.

© Workik Inc. 2026 All rights reserved.