Join our community to see how developers are using Workik AI everyday.

Features

Generate Code Instantly

AI generates optimized Python code for web scraping with BeautifulSoup, allowing you to extract text, images, links & more.

Optimize HTML Parsing

Enhance BeautifulSoup scripts using lxml and html5lib with AI, ensuring faster and cleaner HTML parsing with minimal manual effort.

Automate Pagination Handling

AI detects pagination and generates dynamic scripts to scrape multiple pages, handling requests & data extraction seamlessly.

Export Data in Any Format

Use AI to easily export scraped data into CSV, JSON, or integrate directly into databases using pandas and SQLAlchemy.

How it works

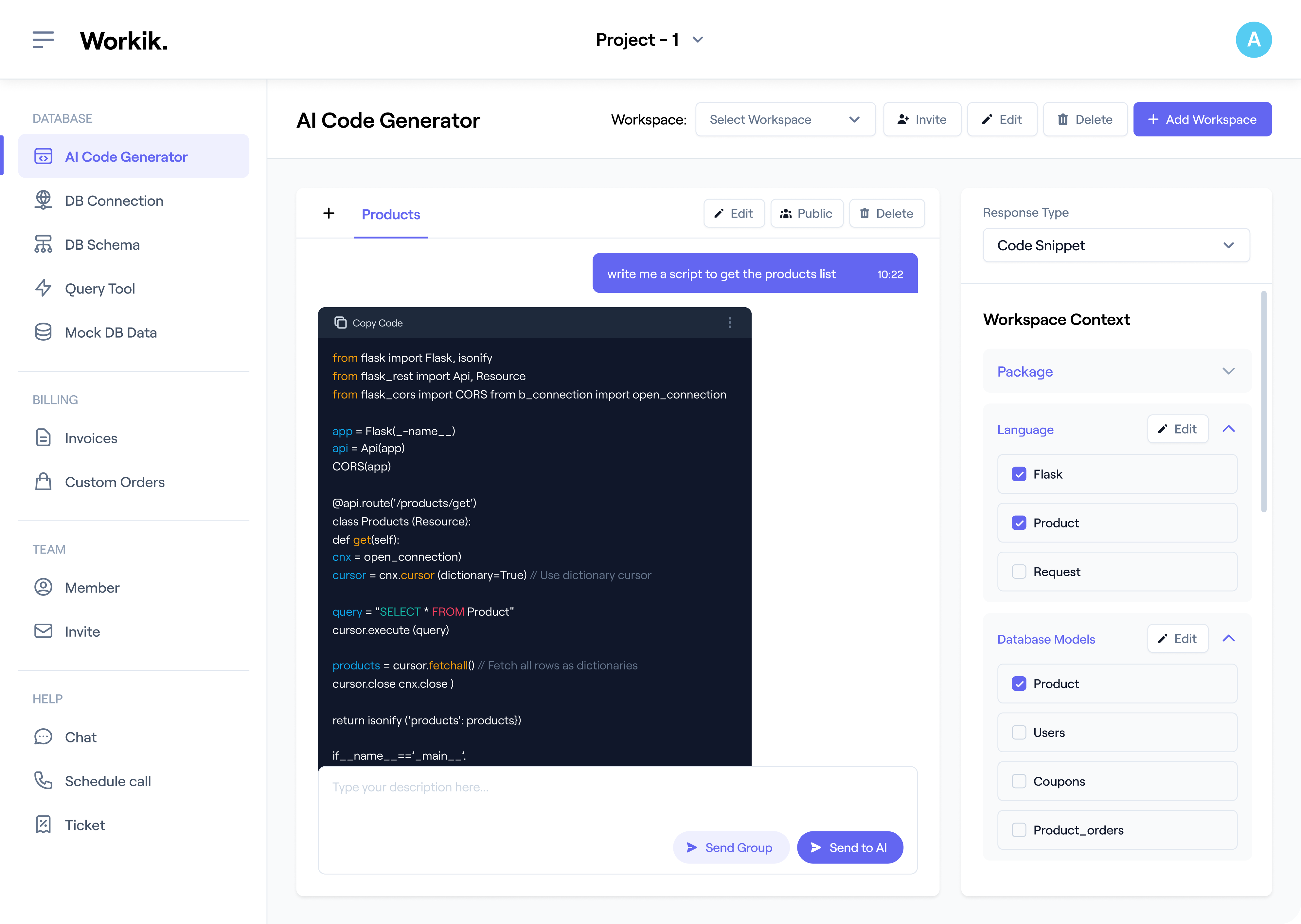

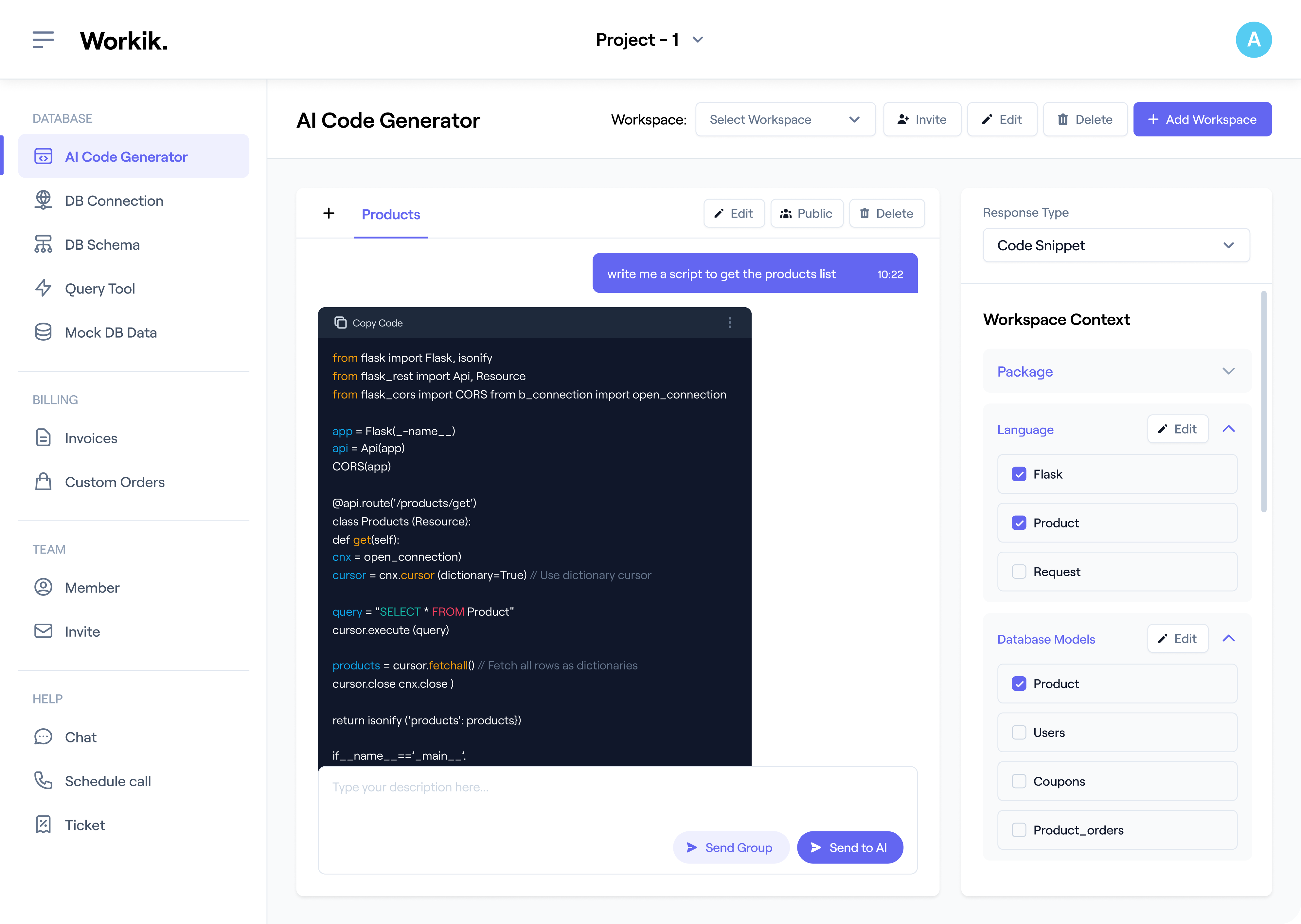

Sign up quickly with Google or manually to access AI-powered BeautifulSoup code generation.

Enter the website URL and select elements to scrape, such as images or links. Specify parsing tools like lxml or html5lib for precision, and easily import existing data/code from GitHub, GitLab, or Bitbucket.

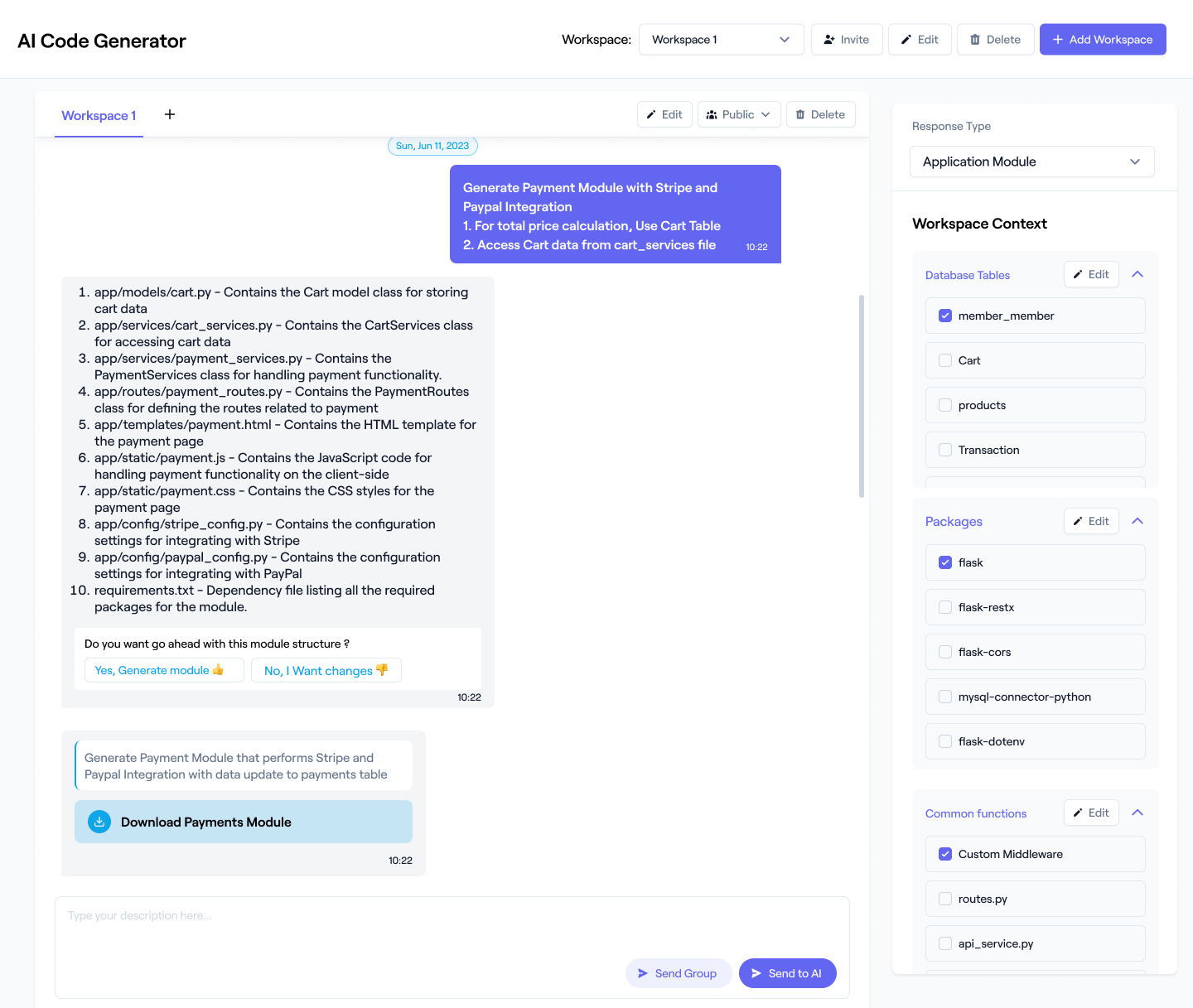

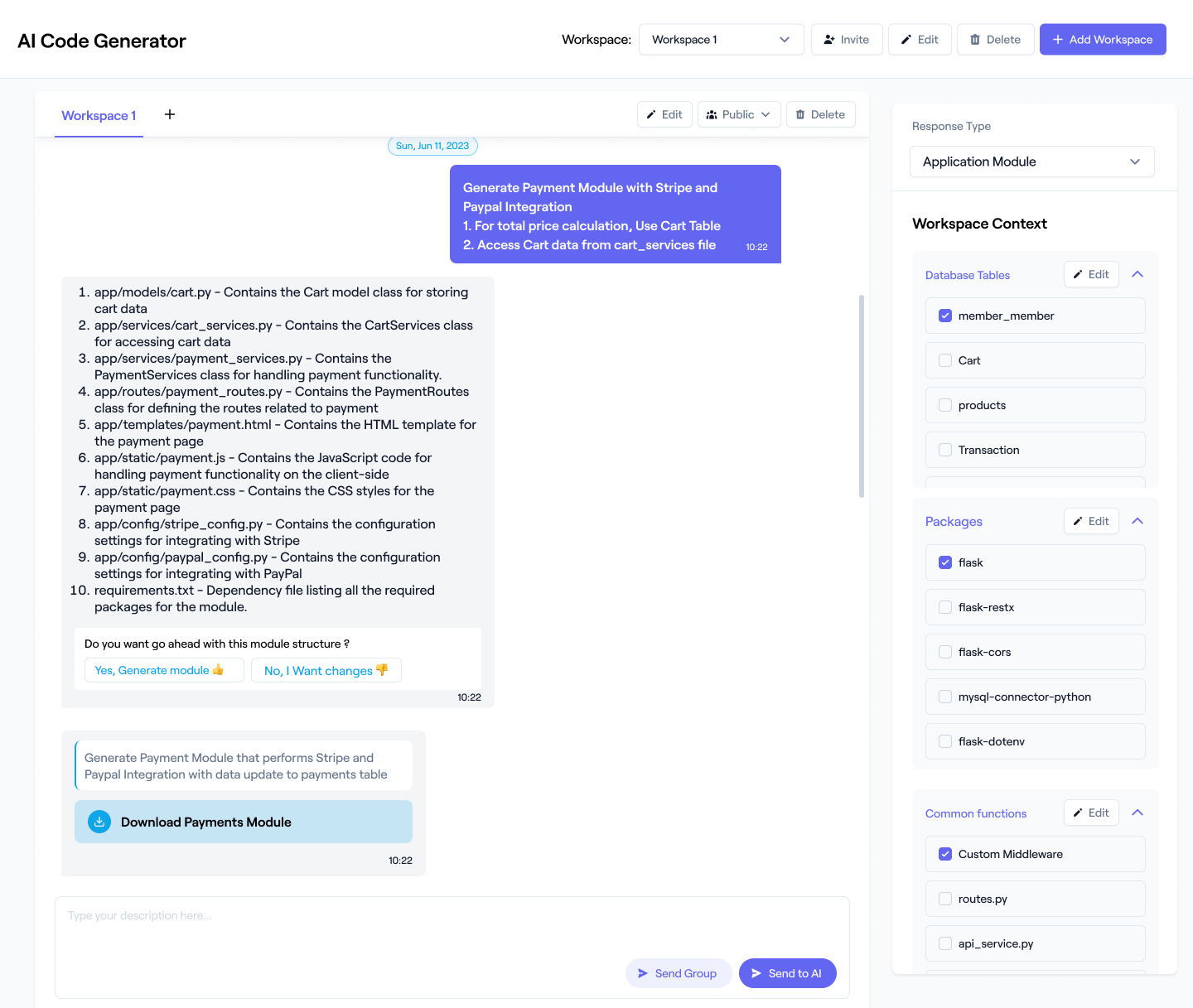

AI generates optimized Python code with BeautifulSoup, handling requests, pagination, and form submissions. Easily customize the script to refine scraping logic and adjust output formats as needed.

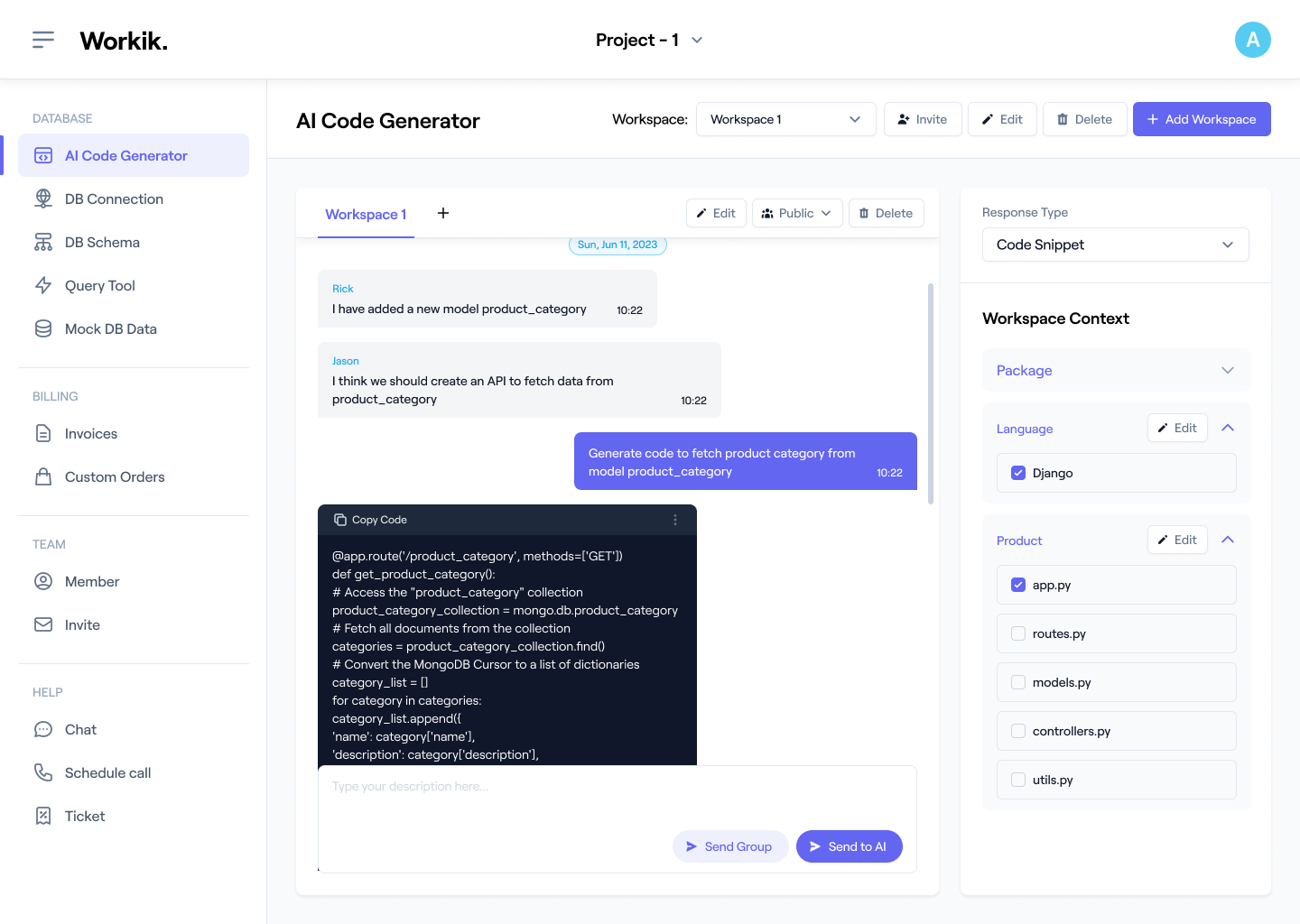

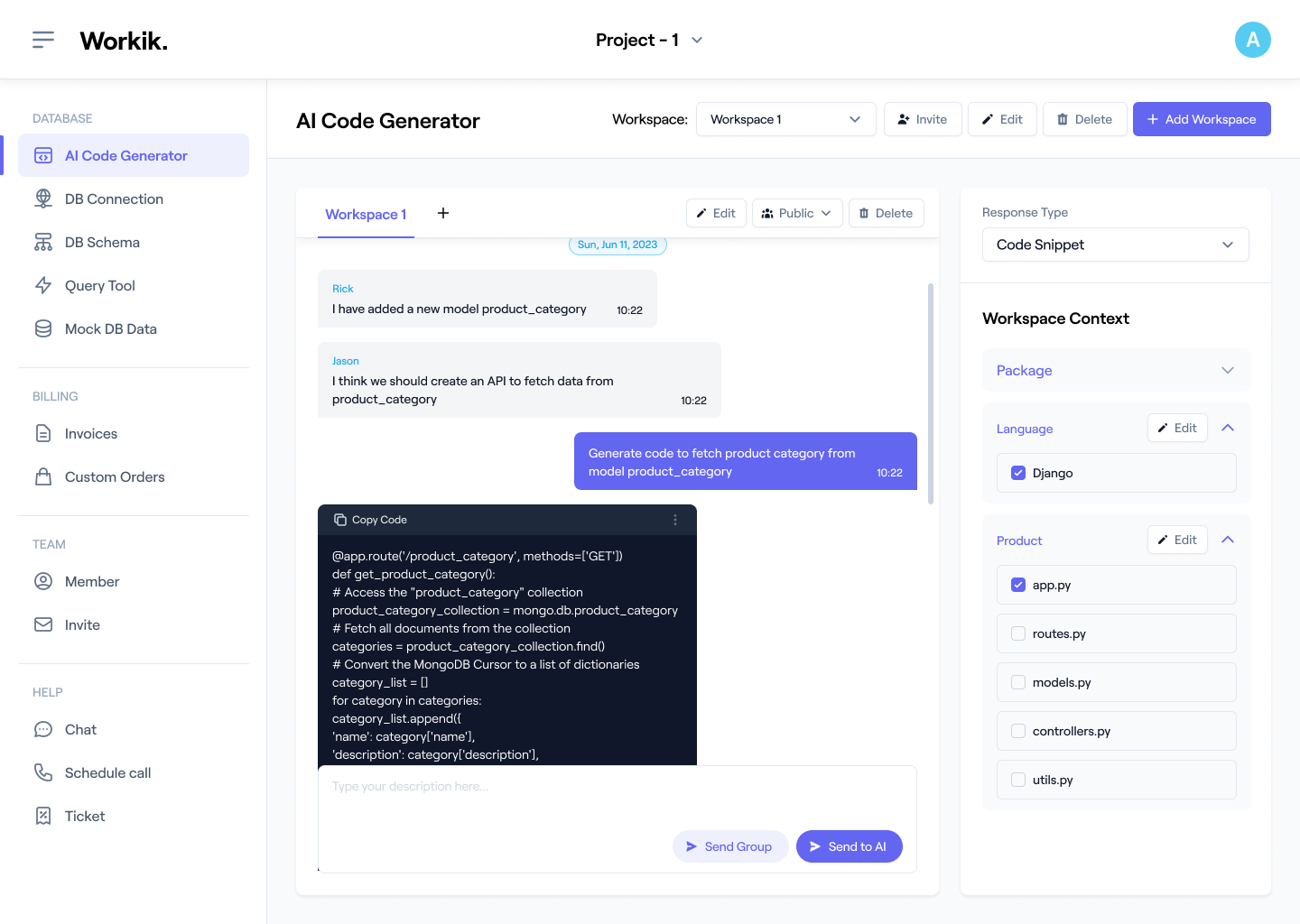

Collaborate in real-time on BeautifulSoup projects by inviting your team into Workik workspaces. Share roles, leverage AI insights, and streamline your web scraping workflow together.

Expand

Expand

Expand

Expand

Expand

Expand

Expand

TESTIMONIALS

Real Stories, Real Results with Workik

Workik's AI made BeautifulSoup scraping fast and efficient. It handled requests and pagination perfectly!

Diana Bloom

Senior Python Developer

BeautifulSoup code generation was seamless. I exported data to JSON in seconds!

Kevin Brew

Data Analyst

Workik’s AI made BeautifulSoup easy to use. Perfect for learning and scraping data effortlessly!

Millie Ortega

Junior Python Developer

What are some popular use cases of Workik's AI-powered BeautifulSoup Code Generator?

Some popular use cases of BeautifulSoup Code Generator for developers include but are not limited to:

* Extract data from any site, targeting specific elements like text, images, or links.

* Scrape metadata such as titles, descriptions, and tags across multiple pages.

* Automate extraction of HTML tables and lists for structured data capture at scale.

* Handle multi-page scraping efficiently with automated pagination.

* Integrate scraped data directly with APIs or databases for seamless data storage.

* Generate scripts for dynamic content handling, including AJAX-loaded pages.

* Schedule recurring scraping jobs to keep datasets updated automatically.

What context-setting options are available in Workik’s AI for BeautifulSoup Code Generator?

Workik offers diverse context-setting options for BeautifulSoup code assistance, allowing users to:

* Specify the target website URL for scraping.

* Define HTML elements to extract (e.g., images, links, tables).

* Select parsing libraries like lxml or html5lib for accurate HTML processing.

* Import existing code or data from GitHub, GitLab, or Bitbucket.

* Add dynamic contexts like database schemas or API endpoints for enhanced scraping workflows.

Can Workik AI automate advanced web scraping tasks like handling forms or AJAX-loaded content?

Yes, Workik AI can generate code that automates form submissions or handles AJAX-based content. For instance, if you need to log into a site to access restricted data or scrape dynamically loaded sections, Workik’s AI can generate Python code integrated with requests and Selenium to execute these actions, making even complex tasks manageable.

Can I use Workik to scrape dynamic websites with BeautifulSoup?

Yes, Workik supports scraping dynamic websites by integrating Selenium for handling JavaScript-loaded content. Once the page is fully loaded, Workik uses BeautifulSoup to extract the necessary data.

Can Workik help me handle large-scale scraping with BeautifulSoup?

Yes, Workik generates scalable BeautifulSoup scripts, automating pagination, error handling, and rate limiting. It enables smooth handling of large datasets across multiple pages while ensuring efficient data extraction.

How does Workik help with data exporting after scraping?

Workik simplifies exporting scraped data to CSV, JSON, or databases using tools like pandas and SQLAlchemy. It streamlines the post-processing and storage of web-scraped data for further analysis or integration.

Generate Code For Free

BeautifulSoup: Question and Answer

BeautifulSoup is a Python library used for web scraping, allowing developers to extract and parse data from HTML and XML files. It simplifies navigating through web pages' HTML structure and helps retrieve specific information like images, text, and links with ease.

Popular languages, frameworks, and tools used with BeautifulSoup include:

Languages:

Python

HTTP Requests:

requests, urllib

Parsing Tools:

lxml, html5lib

Automation:

Selenium (for JavaScript-heavy pages)

Data Handling:

pandas (for data manipulation), CSV, JSON

Database Integration:

SQLAlchemy, SQLite, PostgreSQL

Development Tools:

Jupyter Notebook, Google Colab, VS Code

Popular use cases for BeautifulSoup include, but are not limited to:

Data Extraction:

Scrape structured data from web pages.

Price Monitoring:

Track product prices from e-commerce sites.

SEO Data Collection:

Gather metadata like titles, descriptions, and headers for SEO analysis.

Content Aggregation:

Pull articles, blog posts, or news from multiple sites.

Job Listings Scraping:

Scrape job boards for vacancy data.

Research & Analysis:

Collect web data for academic or business research.

Career roles in BeautifulSoup include Web Scraping Specialist, Data Analyst, Python Developer, SEO Analyst, Data Scientist, and Web Researcher. These roles focus on automating web scraping, processing web data for analysis, and integrating BeautifulSoup into Python projects for research, SEO, and machine learning tasks.

Workik AI enhances BeautifulSoup workflows by:

Code Generation:

Generates Python code for scraping tasks, handling requests, HTML parsing, and data extraction.

Pagination Handling:

Automates multi-page scraping with built-in pagination logic.

Data Export:

Exports scraped data to CSV, JSON, or integrates with databases like SQLAlchemy.

Debugging:

Provides insights to fix issues like incorrect selectors or missing HTML elements.

Optimization:

Refactors BeautifulSoup code for efficiency and performance in large-scale scraping.

Refactoring:

Improves existing code for readability and maintainability.

Integration:

Integrates BeautifulSoup with Selenium for dynamic content scraping.

Documentation:

Creates clear documentation for BeautifulSoup scripts for easier maintenance.

Explore more on Workik

Get in touch

Don't miss any updates of our product.

© Workik Inc. 2026 All rights reserved.